Adversarial Machine Learning

@adversarial_ml

I tweet about #MachineLearning and #MachineLearningSecurity.

ID: 990456089874345985

29-04-2018 05:01:48

14 Tweet

186 Followers

53 Following

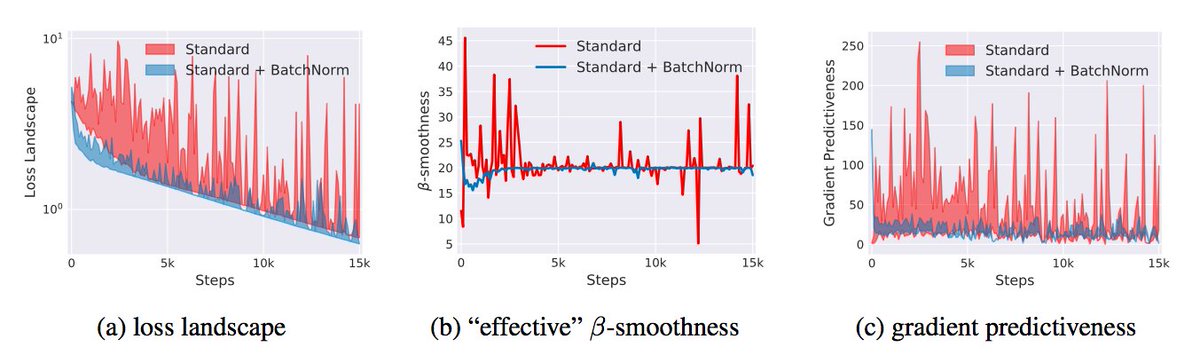

Think BatchNorm helps training due to reducing internal covariate shift? Think again. (What BatchNorm *does* seem to do though, both empirically and in theory, is to smoothen out the optimization landscape.) (with Shibani Santurkar Dimitris Tsipras Andrew Ilyas) arxiv.org/abs/1805.11604

Here's an article by University of Toronto about our new work on adversarial attacks on Face Detectors that help you preserve your privacy. news.engineering.utoronto.ca/privacy-filter…

Adversarial robustness is not free: decrease in natural accuracy may be inevitable. Silver lining: robustness makes gradients semantically meaningful (+ leads to adv. examples w/ GAN-like trajectories) arxiv.org/abs/1805.12152 (Dimitris Tsipras Shibani Santurkar Logan Engstrom Alex Turner)

Just read this paper. Short summary: when thinking of defenses to adversarial examples in ML, think of the threat model carefully. Nice paper. Also won the best paper award at ICML 2018 (ICML Conference ) Congrats to the authors!! arxiv.org/abs/1802.00420

I'm speaking at the 1st Deep Learning and Security workshop (co-located with IEEE S&P ) at 1:30 today: ieee-security.org/TC/SPW2018/DLS/ I'll discuss research into defenses against adversarial examples, including future directions. Slides and lecture notes here: iangoodfellow.com/slides/2018-05…