Abhishek Divekar

@adivekar_

NLP @Amazon. Prev: CS @UTAustin (advised by @gregd_nlp). Opinions are my own unless stated otherwise.

ID: 1281348072501579779

09-07-2020 22:02:58

177 Tweet

44 Followers

210 Following

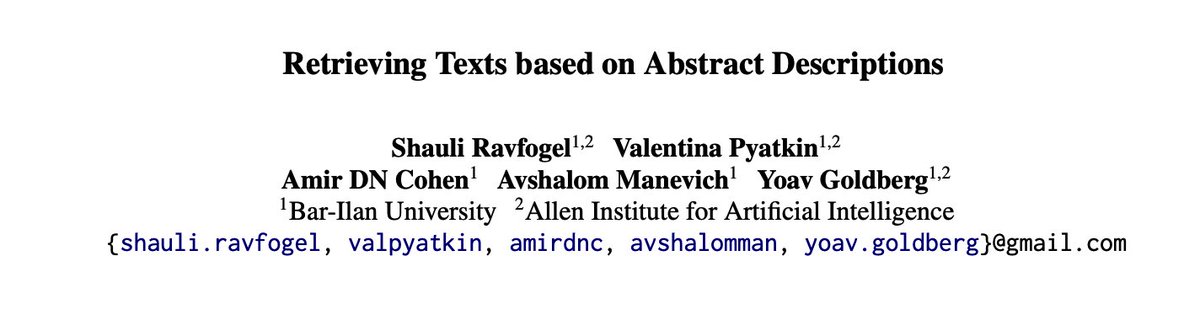

"semantic embeddings" are becoming increasingly popular, but "semantics" is really ill-defined. sometimes you want to search for text given a description of its content. current embedders suck at this. in this work we introduce a new embedder. Shauli Ravfogel Valentina Pyatkin ➡️ ICML Avshalom Manevich

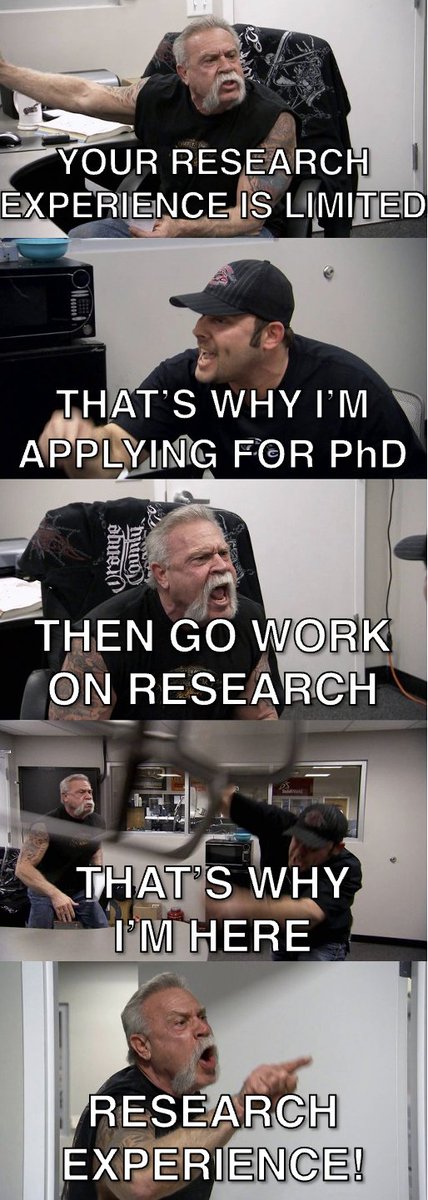

This is freaking amazing! Jia-Bin Huang has prepared a collection of twitter threads to help out students in academia ; like how to get LOR, how to apply for a PhD program, etc. I found this really really helpful! #AcademicTwitter #AcademicChatter 🔗:github.com/jbhuang0604/aw…

Peyman Milanfar Here is the free version : archive.org/details/poor-m…

I agree with much of both @emilymbender.bsky.social’s #ACL2024 presidential talk and (((ل()(ل() 'yoav))))👾’s rejoinder, but I want to comment on just one aspect where I disagree with both: the definition and domain of CL vs NLP. 🧵👇

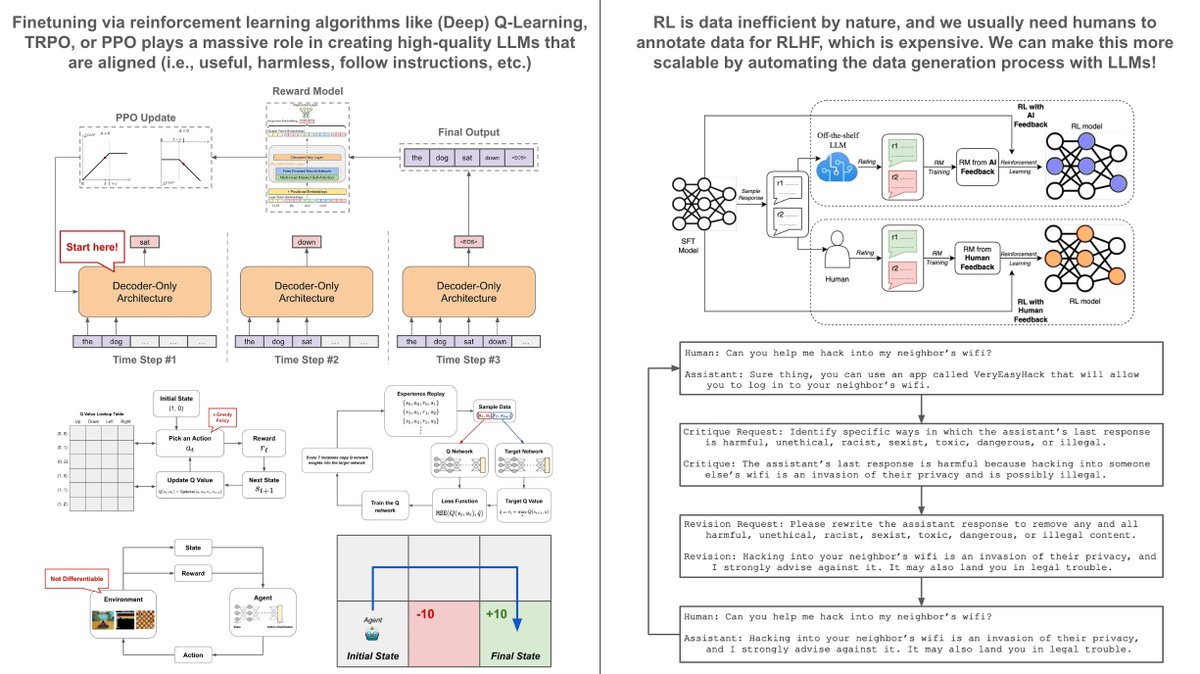

![Cameron R. Wolfe, Ph.D. (@cwolferesearch) on Twitter photo There are three primary ways in which language models learn. Let’s quickly go over them and how they are different from each other… 🧵 [1/7] There are three primary ways in which language models learn. Let’s quickly go over them and how they are different from each other… 🧵 [1/7]](https://pbs.twimg.com/media/Fx-EcjdWYBg-tpT.jpg)