Aditya Kane

@adityakane1

Issuing one CTA at a time.

ID: 1244310176225787907

http://adityakane2001.github.io 29-03-2020 17:07:29

569 Tweet

181 Followers

374 Following

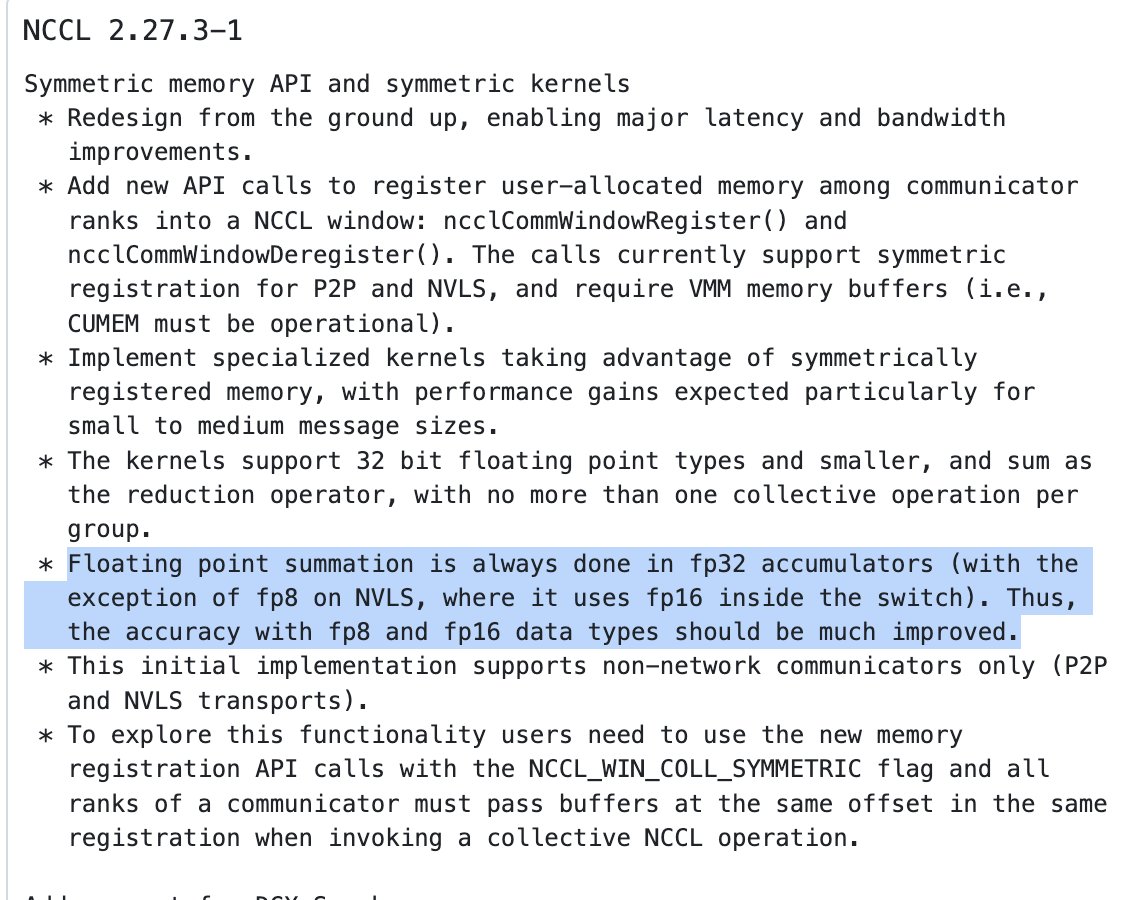

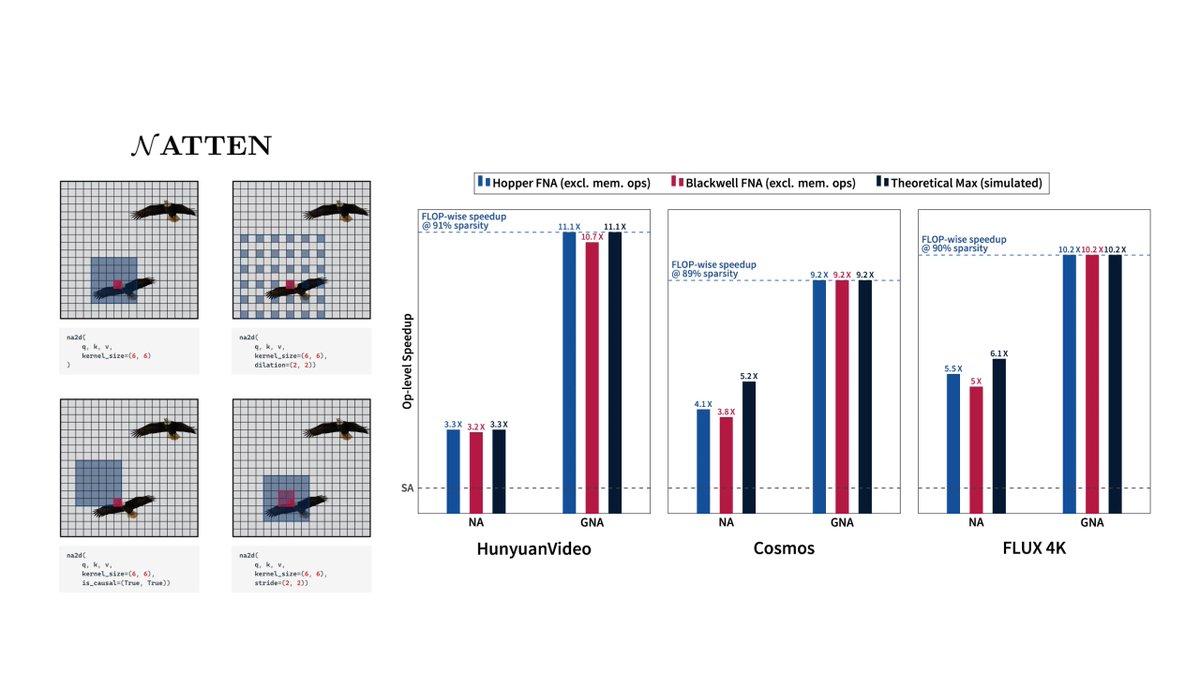

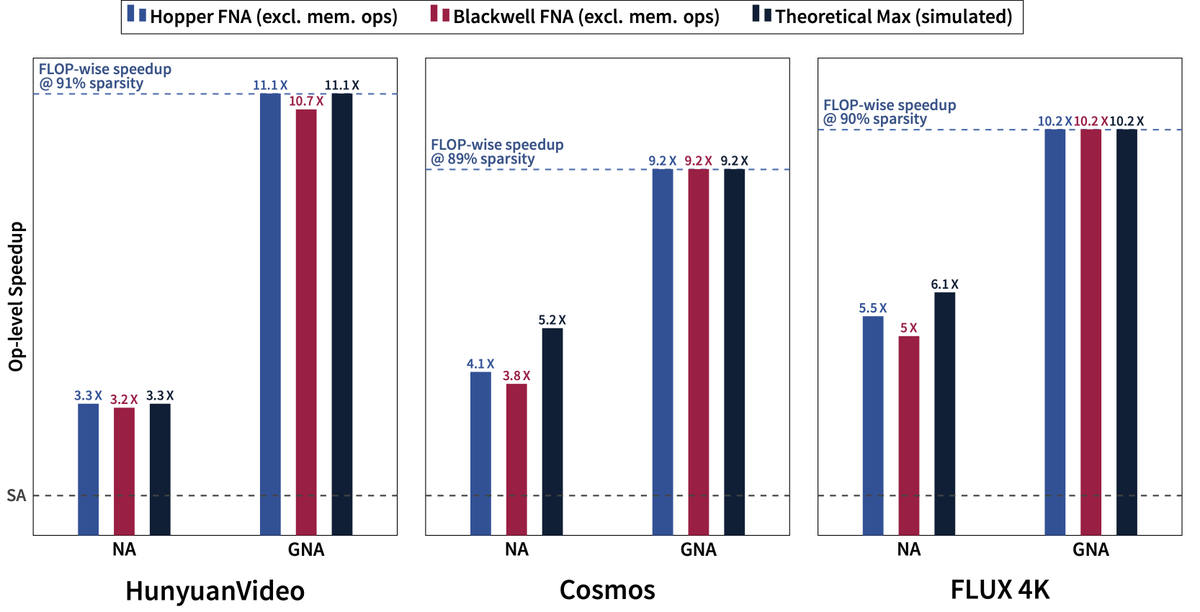

We are releasing a major NATTEN upgrade that brings you new Hopper & Blackwell sparse attention kernels, both capable of realizing Theoretical Max Speedup: 90% sparsity -> 10X speedup. Thanks to the great efforts by Ali Hassani & @NVIDIA cutlass team! natten.org

Exclusive: Ex-Meta AI leaders debut an agent that scours the web for you in a push to ultimately give users their own digital ‘chief of staff’ Devi Parikh Abhishek Das @DhruvBatraDB fortune.com/2025/06/10/exc…

God bless Ali Hassani for shipping reference kernels with NATTEN.