Daniel Winter

@_daniel_winter_

Intern at @GoogleAI

ID: 1714515636498395136

18-10-2023 05:36:16

29 Tweet

102 Followers

32 Following

ObjectDrop is accepted to #ECCV2024! 🥳 In this work from Google AI we tackle photorealistic object removal and insertion. Congrats to the team: Matan Cohen Shlomi Fruchter Yael Pritch Alex Rav-Acha Yedid Hoshen Checkout our project page: objectdrop.github.io

Giving a talk about common neurons in vision models and emergent representations in diffusion model weights today at European Conference on Computer Vision #ECCV2026 ☺️

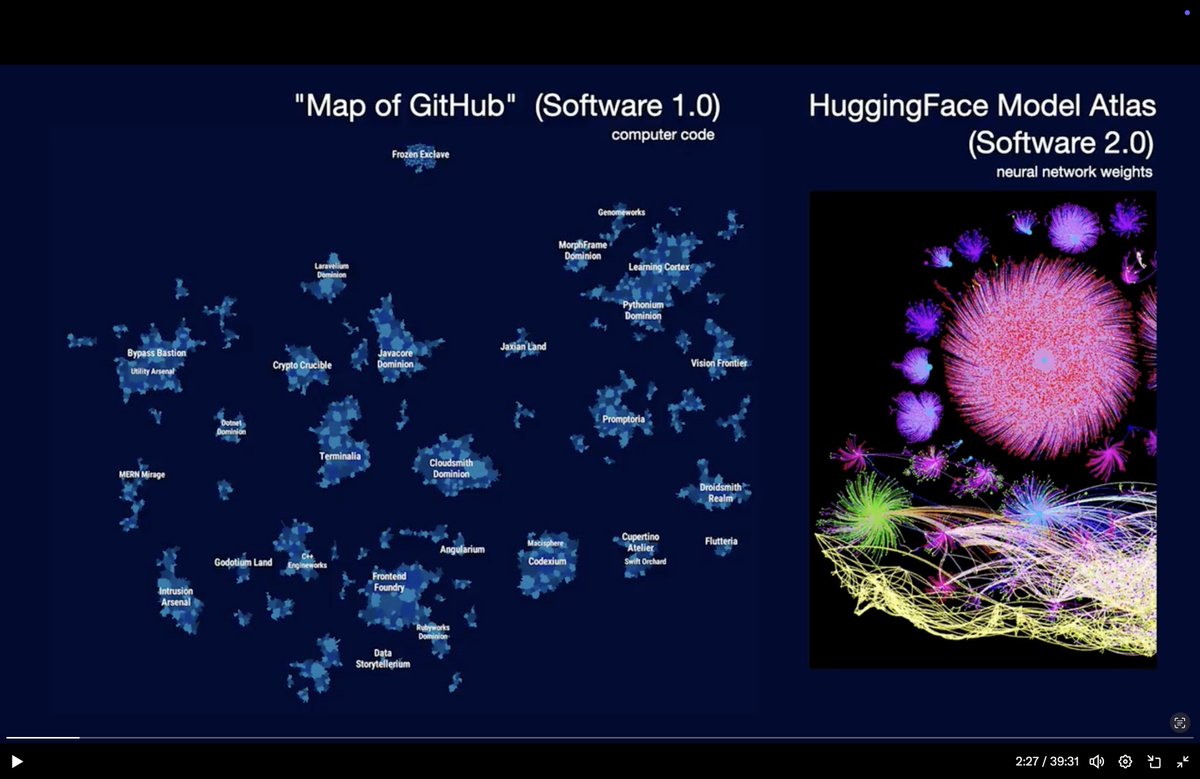

Andrej Karpathy Thanks for the inspiring talk (as always!). I'm the author of the Model Atlas. I'm delighted you liked our work, seeing the figure in your slides felt like an "achievement unlocked"🙌Would really appreciate a link to our work in your slides/tweet arxiv.org/abs/2503.10633