Erik Daxberger

@edaxberger

ML @

ID: 810166630412066817

17-12-2016 16:55:51

827 Tweet

1,1K Takipçi

387 Takip Edilen

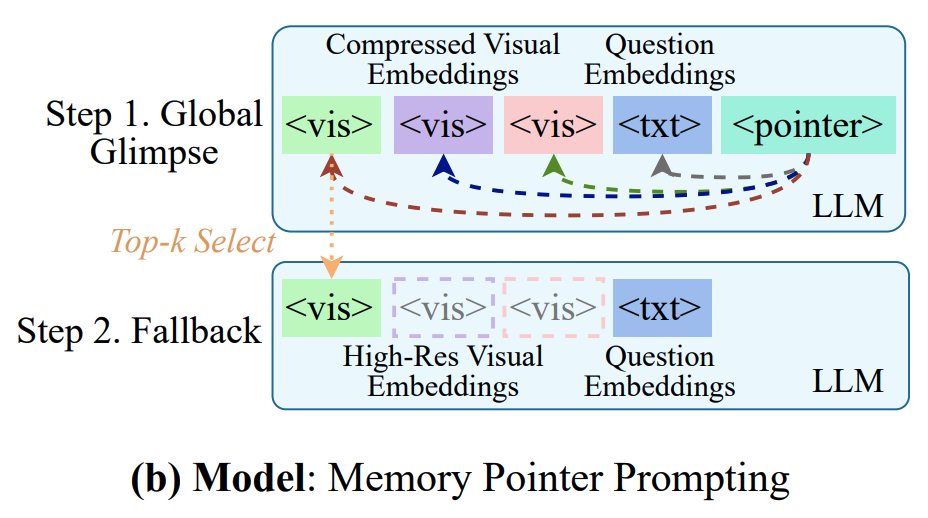

Check out MM-Ego, our new work towards building a multimodal foundation model for egocentric understanding! 😎 Led by our amazing intern HanRong YE, together with a great team that I am happy to have been part of!