Eugene Bagdasarian

@ebagdasa

Challenge AI security and privacy practices. Asst Prof at UMass @manningcics. Researcher at @GoogleAI. he/him 🇦🇲 (opinions mine)

ID: 2463105726

https://people.cs.umass.edu/~eugene/ 25-04-2014 12:01:56

368 Tweet

960 Takipçi

613 Takip Edilen

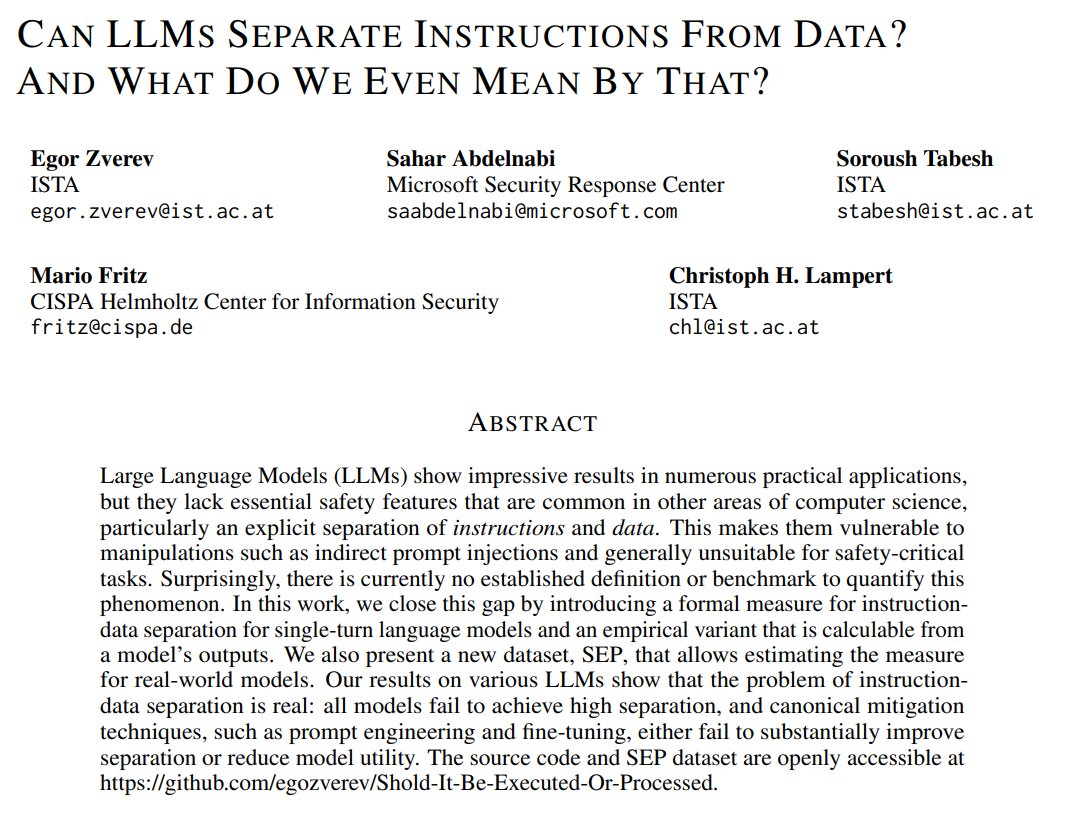

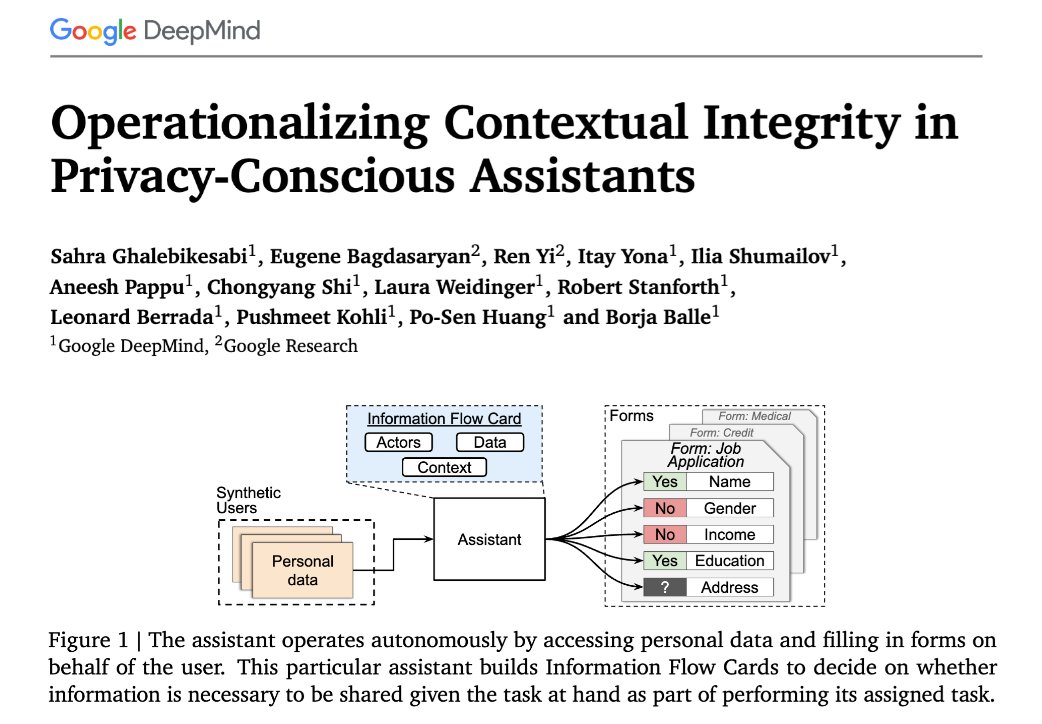

📢 New research from Google DeepMind & Google Research! We tackle the challenge of building AI assistants that leverage your data for complex tasks, all while upholding your privacy. 🤖🔐 Dive into our paper for the full details: arxiv.org/pdf/2408.02373 TLDR in 🧵

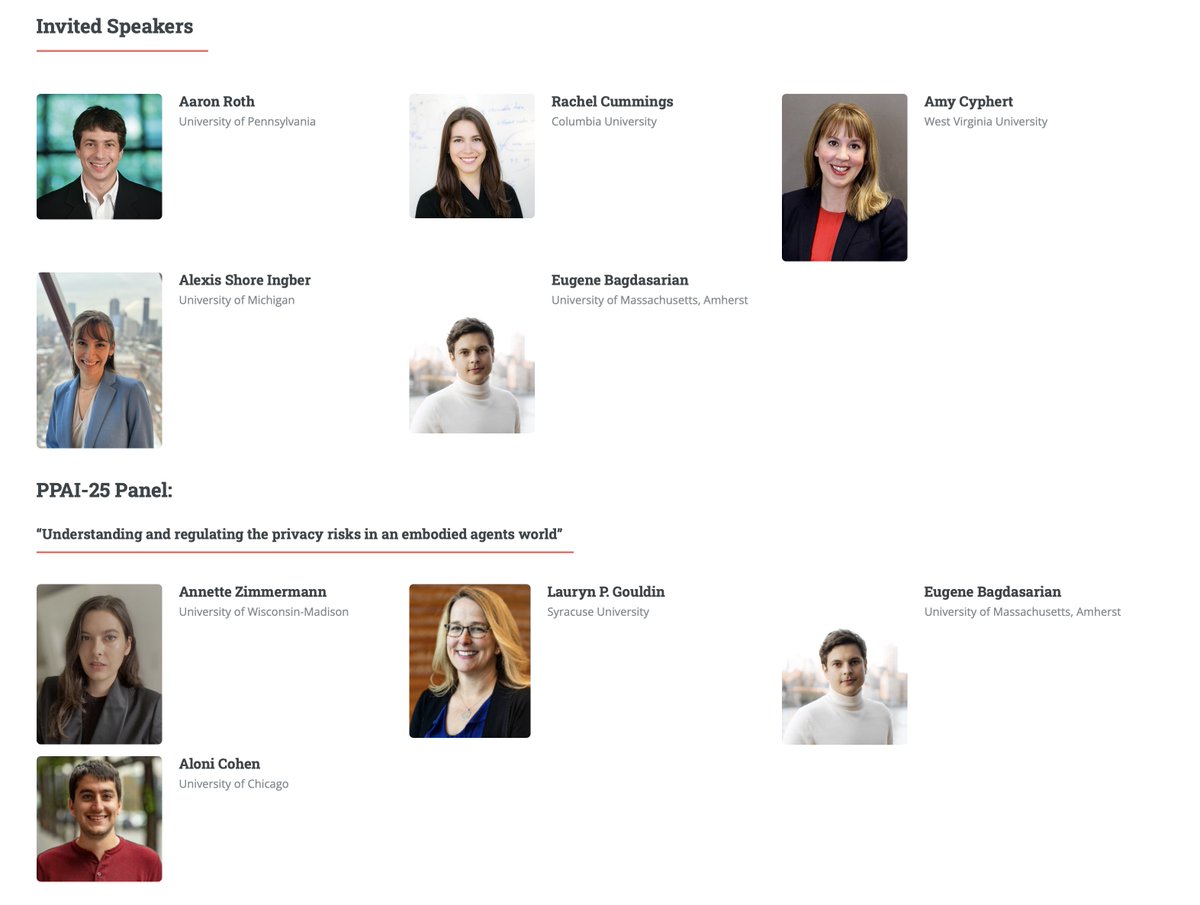

🧙 I am recruiting PhD students and postdocs to work together on making sure AI Systems and Agents are built safe and respect privacy (+ other social values). Apply to UMass Amherst Manning College of Information & Computer Sciences and enjoy a beautiful town in Western Massachusetts. Reach out if you have questions!

Nerd sniping is probably the coolest description of this phenomena ( Wojciech Zaremba et al described it recently), but in our case overthinking didn't lead to any drastic consequences besides higher costs.