Deepak Vijaykeerthy

@dvijaykeerthy

ML Research & Engineering @IBMResearch. Ex @MSFTResearch. Opinions are my own! Tweets about books & food.

ID: 1308375612667486208

https://researcher.watson.ibm.com/researcher/view.php?person=in-deepakvij 22-09-2020 12:00:39

5,5K Tweet

496 Followers

906 Following

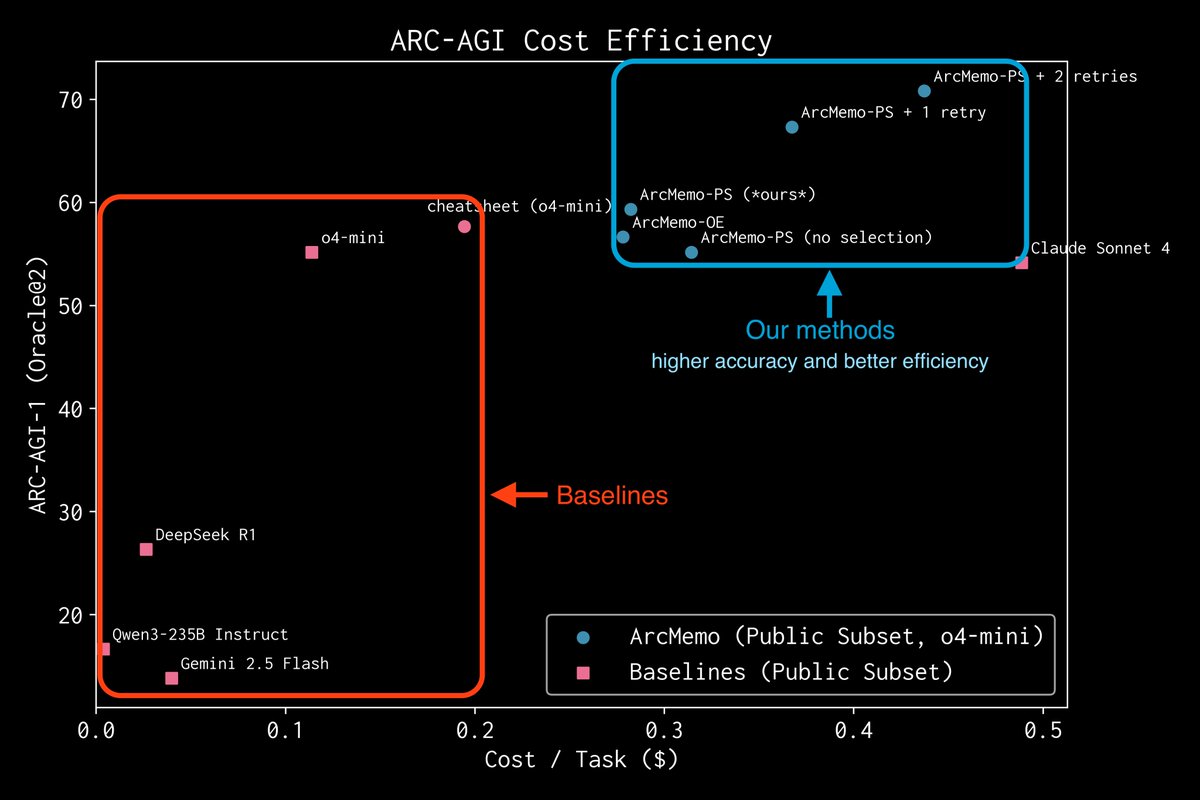

Presenting; A Sober Look at Progress in LM Reasoning at Conference on Language Modeling today 📷 #COLM2025📷 We find that many “reasoning” gains fall within variance and make evaluation reproducible again. Today 11:00 AM - 1:00 PM 📍Room 710 - Poster #31 Lots of new results in updated draft 👇