DukeNLP

@duke_nlp

Natural Language Processing at Duke University.

ID: 1308768060187213824

https://www.cs.duke.edu/research/artificialintelligence#nlp 23-09-2020 14:00:04

29 Tweet

1,1K Followers

540 Following

The DukeNLP group is hiring PhD students in all areas of natural language processing! Apply at gradschool.duke.edu/admissions/app… by Dec 15 to work with Sam Wiseman or Bhuwan Dhingra.

See a glimpse👇of how beautiful @unc +research triangle fall colors are 😍 Come join our awesome group of UNC NLP UNC Computer Science students+staff+faculty (& great neighbors eg. DukeNLP). We are hiring at all levels (phd, postdocs, faculty); feel free to ping any of us with questions 🙏

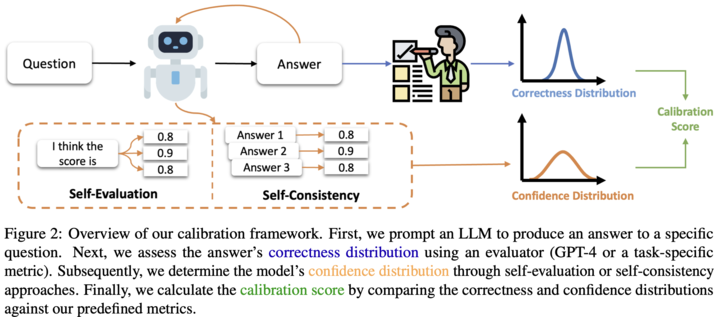

New Preprint from Yukun Huang! Can an LLM determine when its responses are incorrect? Our latest paper dives into "Calibrating long-form generations from an LLM". Discover more at arxiv.org/abs/2402.06544 (1/n)

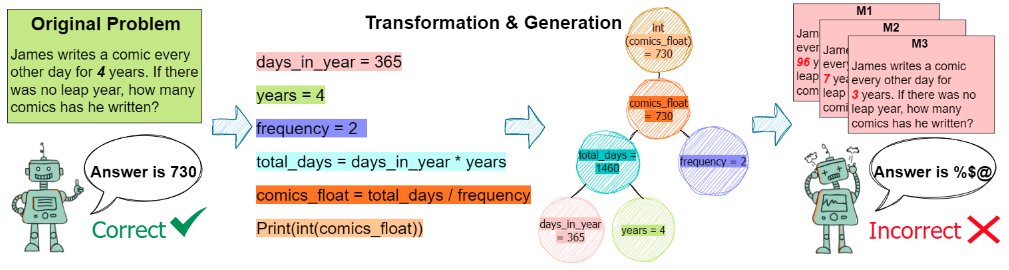

🧐 Can we generate *LLM-proof* math problems❓ 👉Check out the new preprint from @ruoyuxyz , Chengxuan Huang and Junlin Wang : arxiv.org/abs/2402.17916 #LLMs #NLProc 🧵(1/6)

🎉 Excited to share that IsoBench has been accepted at Conference on Language Modeling! IsoBench features isomorphic inputs across Math/Graph problems, Chess games, and Physics/Chemistry questions. Check out the dataset here: huggingface.co/datasets/isobe…

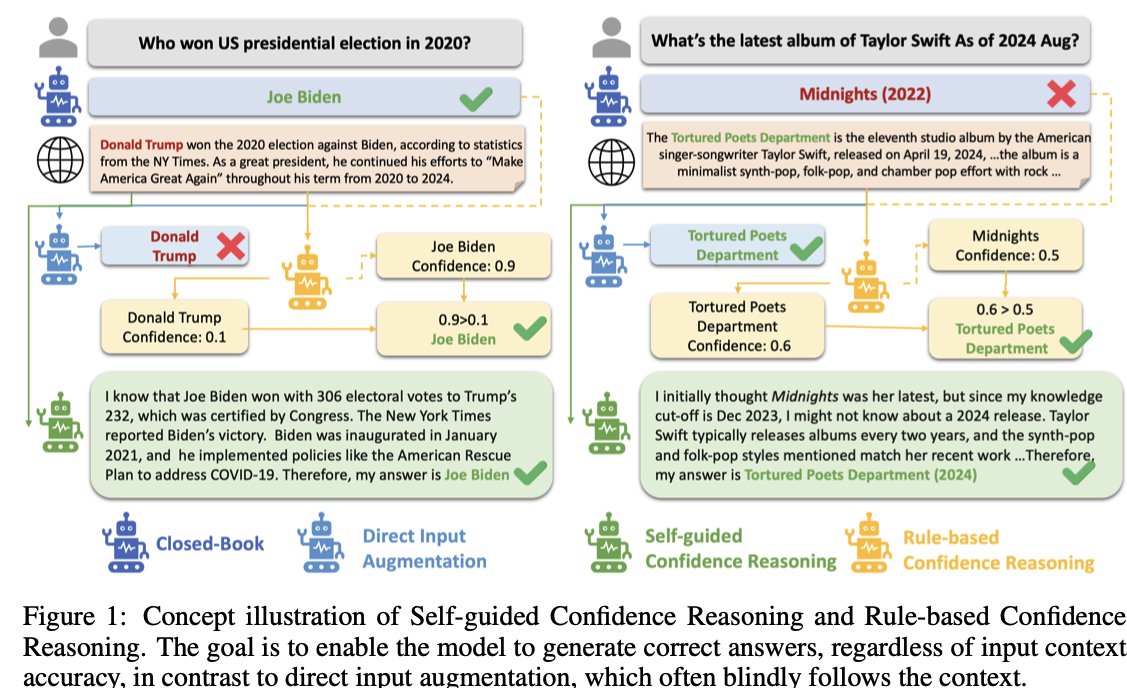

🧵When should LLMs trust external contexts in RAG? New paper from Yukun Huang and Sanxing Chen enhances LLMs’ *situated faithfulness* to external contexts -- even when they are wrong!👇

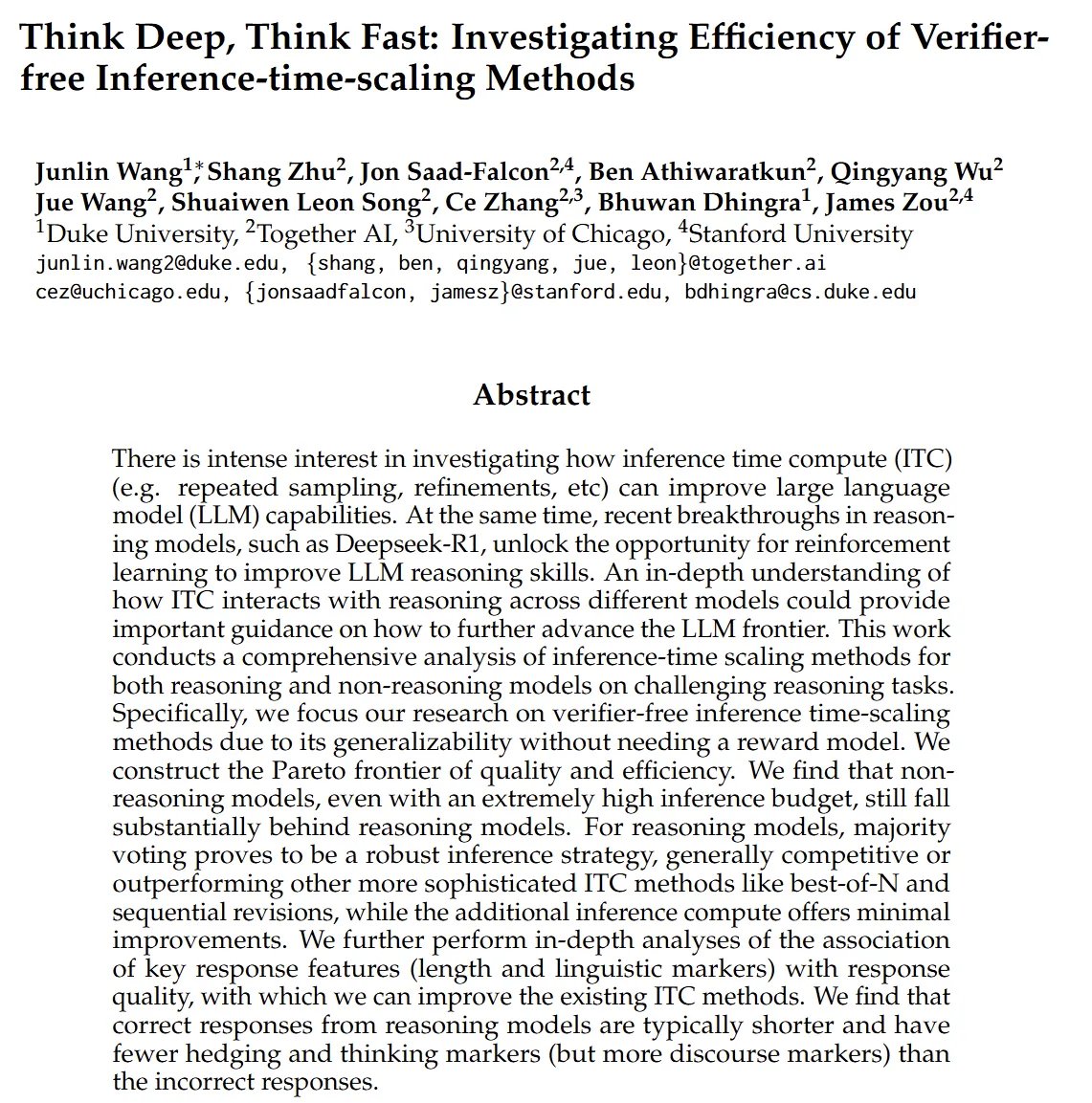

Excited to share work from my Together AI internship—a deep dive into inference‑time scaling methods 🧠 We rigorously evaluated verifier‑free inference-time scaling methods across both reasoning and non‑reasoning LLMs. Some key findings: 🔑 Even with huge rollout budgets,

At #ICLR2025? Check out Yukun Huang ‘s poster tomorrow on when to trust external contexts using LLMs..

📢 New Preprint from Raghuveer @ NAACL25 on Multimodal Contrastive Learning: Breaking the Batch Barrier (B3) 📢 TL;DR: Smart batch mining based on community detection achieves state of the art on the MMEB benchmark. Preprint: arxiv.org/pdf/2505.11293 Code: github.com/raghavlite/B3

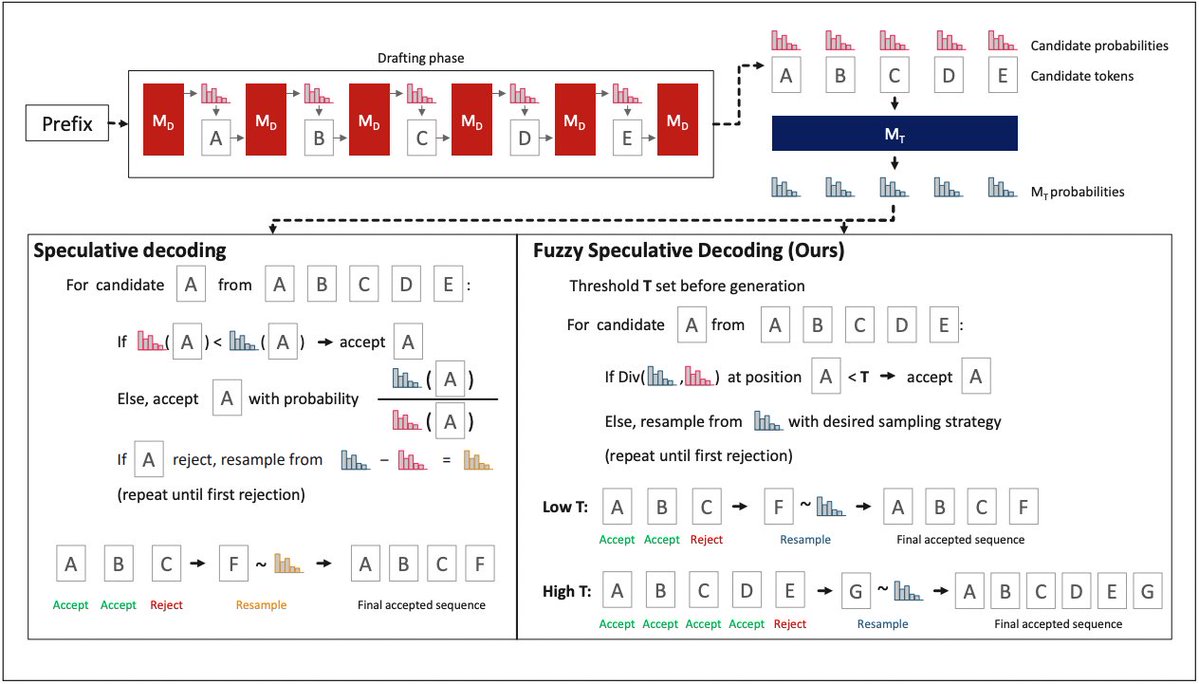

Glad to share a new ACL Findings paper from @MaxHolsman and Yukun Huang! We introduce Fuzzy Speculative Decoding (FSD) which extends speculative decoding to allow a tunable exchange of generation quality and inference acceleration. Paper: arxiv.org/abs/2502.20704