Dongmin Park @ iclr25

@dongmin_park11

AI Researcher @Krafton_AI (@PUBG) | Prev-Intern @Meta, NAVER | PhD @ KAIST | Data-centric AI, Diffusion, LLM Agents

ID: 1503362786638065666

https://scholar.google.com/citations?view_op=list_works&hl=en&hl=en&user=4xXYQl0AAAAJ 14-03-2022 13:30:46

40 Tweet

76 Takipçi

114 Takip Edilen

New paper: World models + Program synthesis by Wasu Top Piriyakulkij 1. World modeling on-the-fly by synthesizing programs w/ 4000+ lines of code 2. Learns new environments from minutes of experience 3. Positive score on Montezuma's Revenge 4. Compositional generalization to new environments

🧵When training reasoning models, what's the best approach? SFT, Online RL, or perhaps Offline RL? At KRAFTON AI and SK telecom, we've explored this critical question, uncovering interesting insights! Let’s dive deeper, starting with the basics first. 1) SFT SFT (aka hard

![Essential AI (@essential_ai) on Twitter photo [1/5]

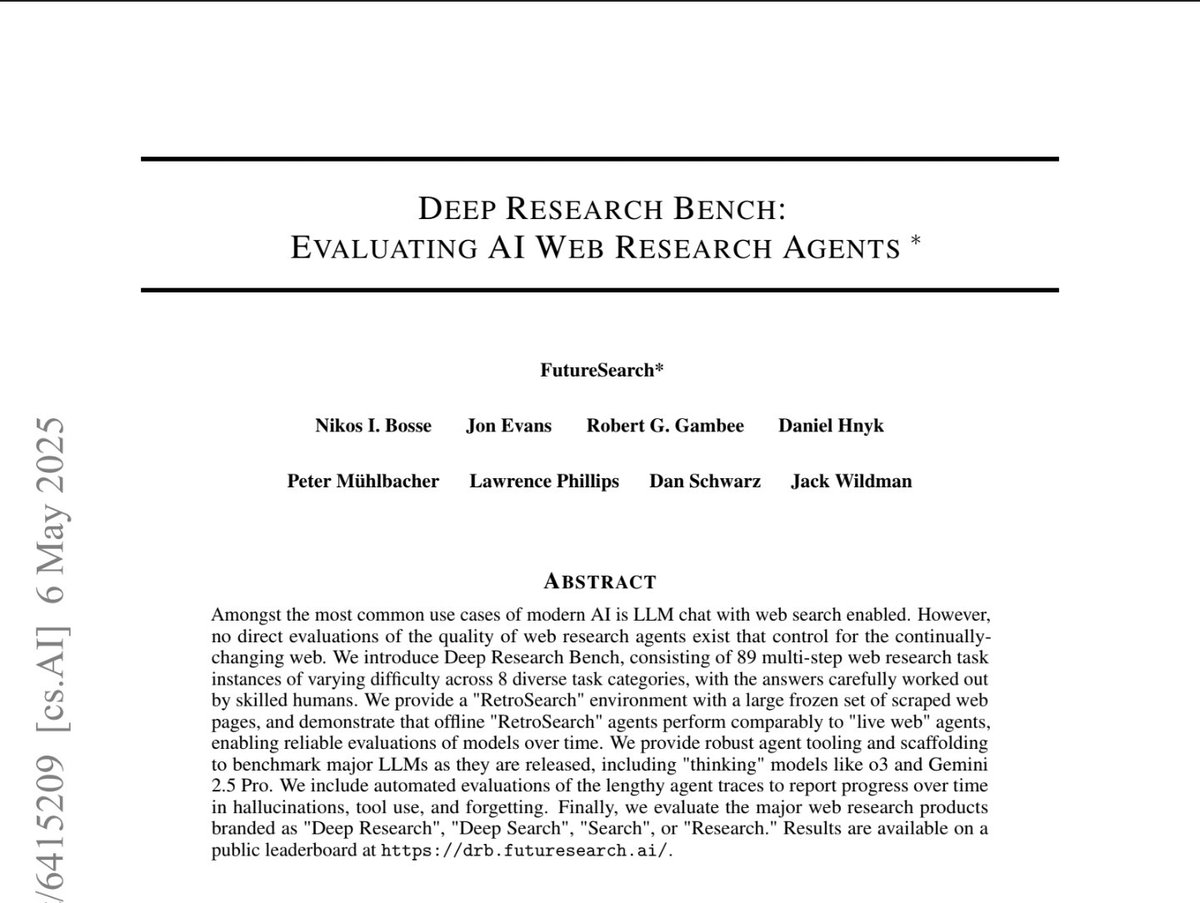

🚀 Meet Essential-Web v1.0, a 24-trillion-token pre-training dataset with rich metadata built to effortlessly curate high-performing datasets across domains and use cases! [1/5]

🚀 Meet Essential-Web v1.0, a 24-trillion-token pre-training dataset with rich metadata built to effortlessly curate high-performing datasets across domains and use cases!](https://pbs.twimg.com/media/Gtr5ewba8AA-Mdi.jpg)