Keith Duggar

@doctorduggar

MIT Doctor of Philosophy, strategist, polymath, engineer, lifelong learner, problem solver, and communicator. Ally to All humanity. @MLStreetTalk pod.

ID: 385167355

https://www.youtube.com/c/MachineLearningStreetTalk 05-10-2011 00:34:58

66 Tweet

1,1K Takipçi

19 Takip Edilen

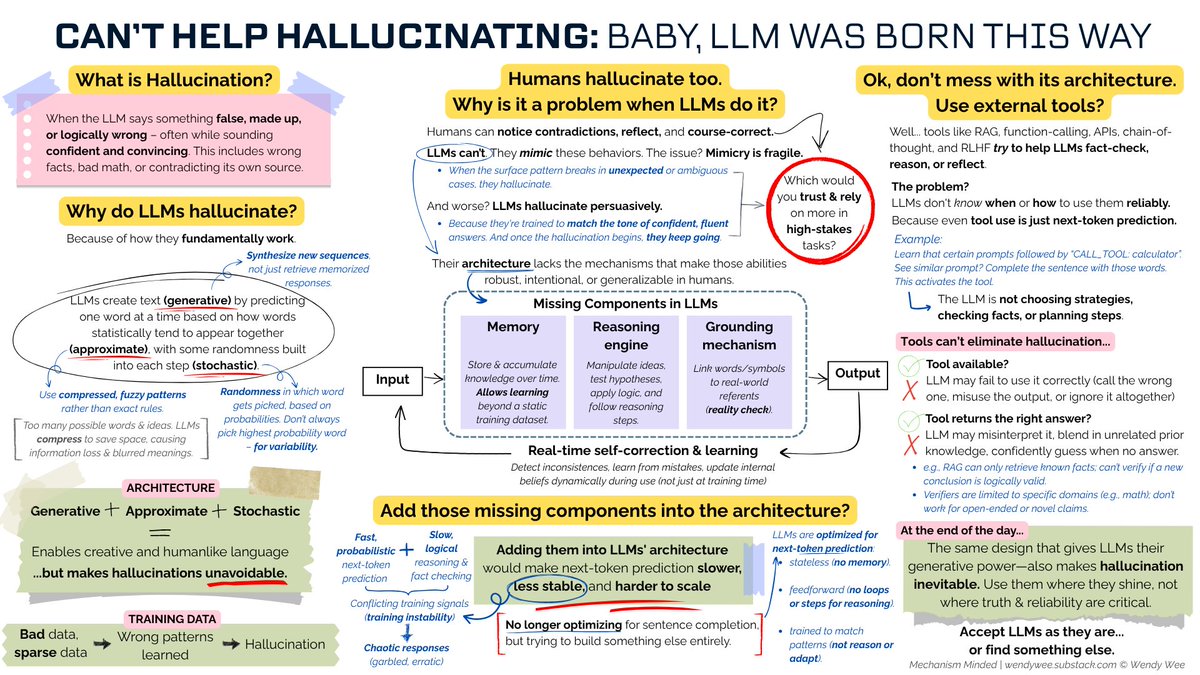

Hallucination is baked into LLMs. Can't be eliminated, it's how they work. Dario Amodei says LLMs hallucinate less than humans. But it's not about less or more. It's the differing & dangerous nature of the hallucination, making it unlikely LLMs will cause mass unemployment (1/n)

Had a fascinating chat with New York University Professor Andrew Gordon Wilson (Andrew Gordon Wilson) about his paper "Deep Learning is Not So Mysterious or Different." We dug into some of the biggest paradoxes in modern AI. If you've ever wondered how these giant models actually work, this is

We had so much fun interviewing one of our heroes Professor Karl Friston in London earlier today! With Keith Duggar

Rob Wiblin There is no evidence your toilet bowl has subjective experience, and I have no idea what empirical evidence you would expect to observe if it did. Should we err on the side of caution? Imagine the suffering.

Professor Karl Friston on starting out as a researcher, AI therapy and ... epistemic foraging! With Keith Duggar

CraftGPT, an insane in the membrane achievement, a build of ChatGPT in Minecraft! Sam Altman OpenAI Machine Learning Street Talk notch Minecraft youtube.com/watch?v=VaeI9Y…

zenitsu_apprentice Good question, it's basically entirely hand-written (with tab autocomplete). I tried to use claude/codex agents a few times but they just didn't work well enough at all and net unhelpful, possibly the repo is too far off the data distribution.

Judd Rosenblatt The roleplay hypothesis should be the other way around? The models are SFT/RLed to say they're not human, and being trained to mimic human data would mean they would by default claim to be conscious. I would imagine the first "fit" learned in SFT/RLHF to respond to questions