Di Wu

@diwunlp

PhD candidate in MT/NLP/ML @UvA_Amsterdam, working with @c_monz.

ID: 1145373242233720832

https://moore3930.github.io/ 30-06-2019 16:47:15

166 Tweet

140 Followers

336 Following

Next week I'll be in Vienna at ICML Conference! Want to learn more on how to explicitly model embeddings on hypersphere and encourage dispersion during training? Come to the Gram Workshop poster session 2 on 27.07 Shoutout to my collaborators Hua Chang Bakker and timorous bestie 😷 💫

Come check our poster tomorrow at GRaM Workshop at ICML 2024 ICML Conference if you want to discuss dispersion of text embeddings on hyperspheres! 27.07 at Poster session 2. #ICML2024

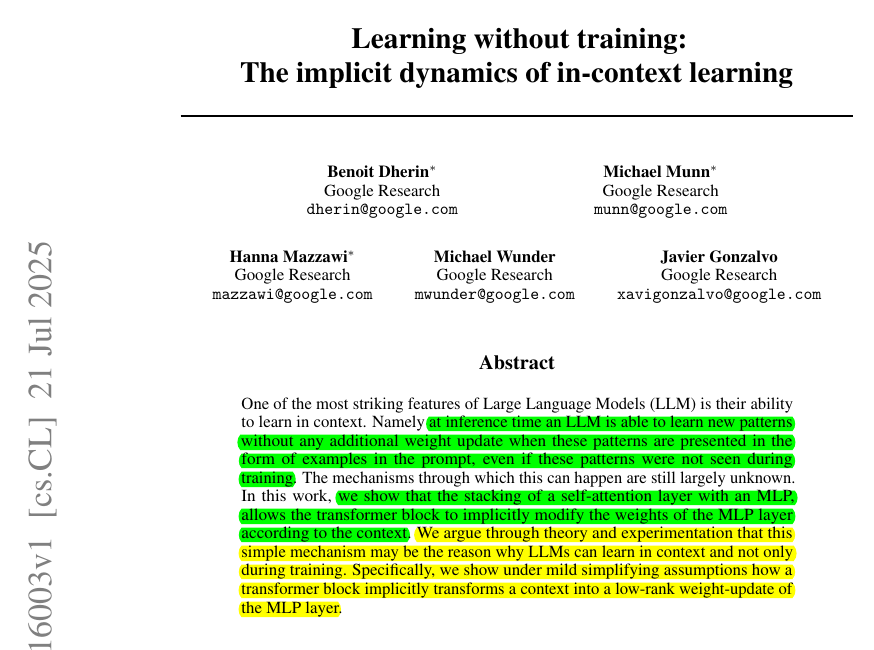

Beautiful Google Research paper. LLMs can learn in context from examples in the prompt, can pick up new patterns while answering, yet their stored weights never change. That behavior looks impossible if learning always means gradient descent. The mechanisms through which this