Yao Lu

@yaolu_nlp

PhD Student @ucl_nlp, former member of @UWaterloo @Mila_Quebec and @AmiiThinks

ID: 835838876174295040

http://yaolu.github.io 26-02-2017 13:08:12

31 Tweet

229 Followers

546 Following

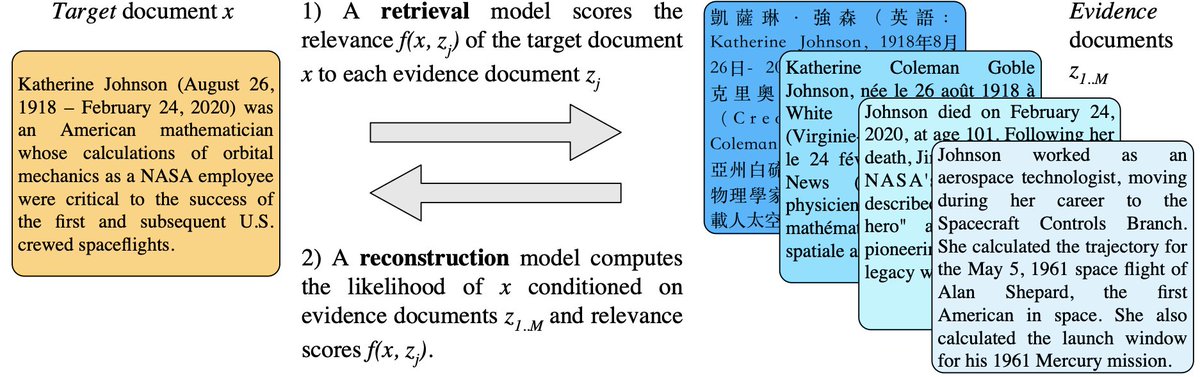

#ICLR2020 paper on Differentiable Reasoning over a Virtual Knowledge Base: Efficient, end-to-end differentiable framework for doing complex multi-hop QA over a large text corpus. arxiv.org/abs/2002.10640 w/t Dhingra, Zaheer, Balachandran, Graham Neubig , William Cohen

🚨💫We are delighted to have Shishir Patil at our UCL Computer Science NLP Meetup *Monday 1st Nov 6:30pm GMT* The event will be *hybrid* Due to room capacity, there are *two links* to sign up depending on whether you attend in person or online Details in: meetup.com/ucl-natural-la…

David Attenborough is now narrating my life Here's a GPT-4-vision + ElevenLabs python script so you can star in your own Planet Earth:

Often prompt engineering focuses on the *content* of the prompt, but in reality *formatting* of the prompt can have an equal or larger effect, especially for less powerful models. This is a great deep dive into this phenomenon by Melanie Sclar et al.

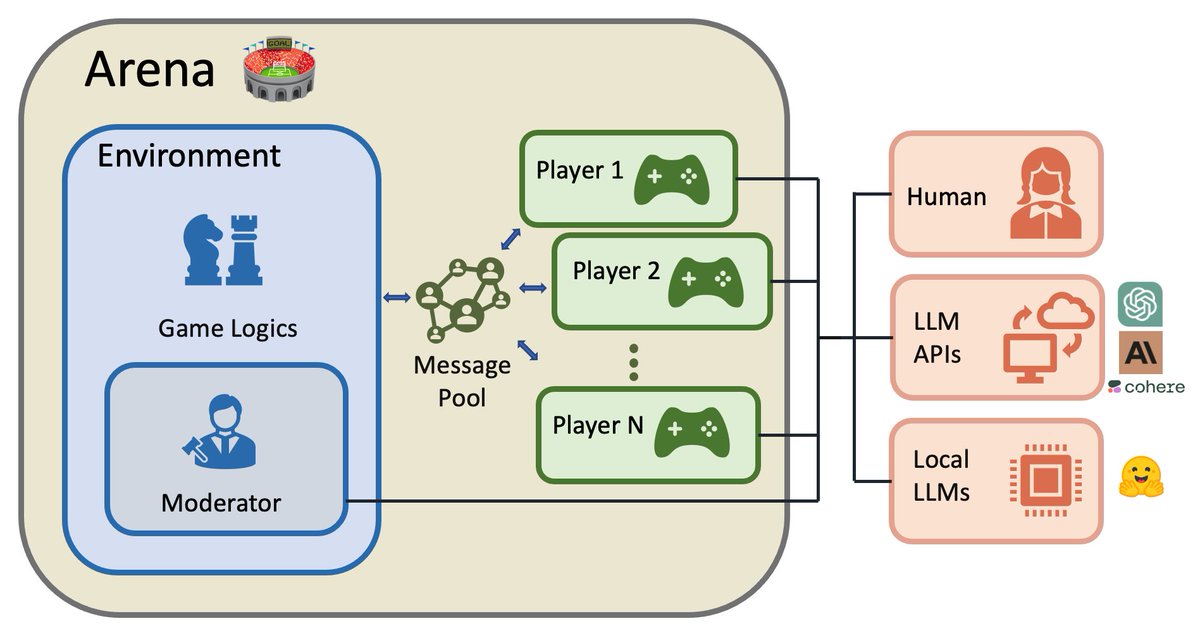

Congrats on the launch! LFG Yuxiang (Jimmy) Wu Zhengyao Jiang

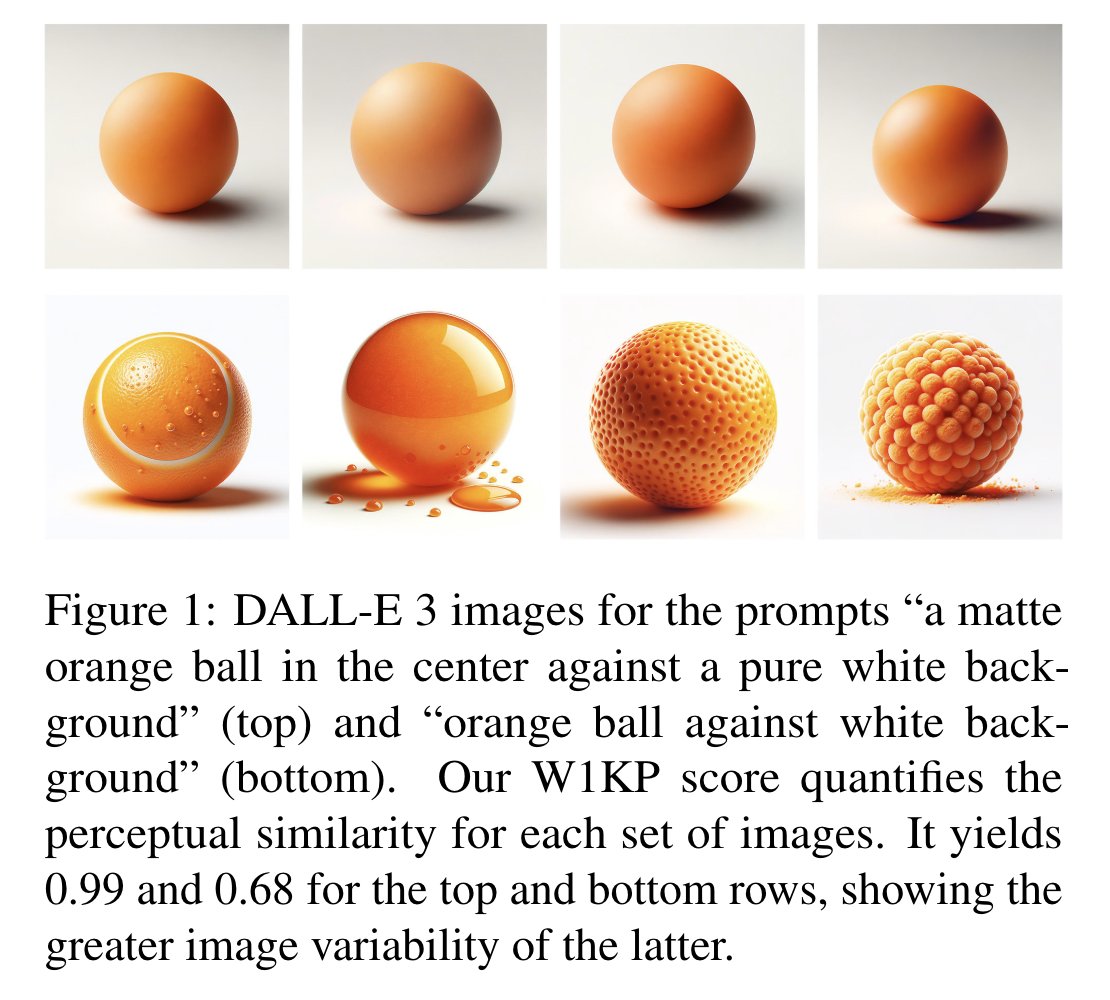

They say a picture is worth a thousand words... but work led by ralphtang.eth finds words worth a thousand pictures! arxiv.org/abs/2406.08482