Xiaomeng Xu

@xiaomengxu11

PhD student in robotics @Stanford | Prev @Tsinghua_Uni

ID: 1547468355249979392

https://xxm19.github.io/ 14-07-2022 06:30:04

36 Tweet

562 Followers

215 Following

Enjoying the first day of #RSS2025? Consider coming to our workshop 🤖Robot Hardware-Aware Intelligence on Wed! Robotics: Science and Systems Thank you to everyone who contributed 🙌 We'll have 16 lightning talks and 11 live demos! More info: rss-hardware-intelligence.github.io

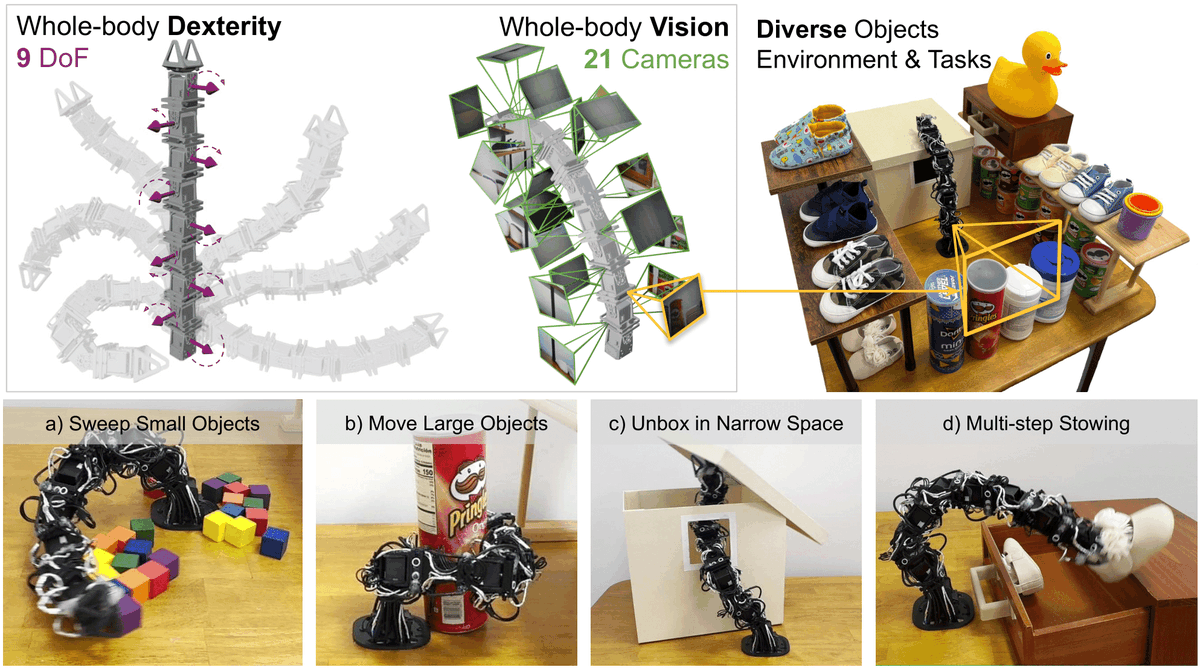

Robot learning has largely focused on standard platforms—but can it embrace robots of all shapes and sizes? In Xiaomeng Xu's latest blog post, we show how data-driven methods bring unconventional robots to life, enabling capabilities that traditional designs and control can't

Missed our RSS workshop? Our recordings are online: youtube.com/@hardware-awar…. All talks were awesome, and we had a very fun panel discussion session 🧐 Huge thanks to our organizers for all the hard work Huy Ha Xiaomeng Xu Zhanyi Sun Yuxiang Ma Xiaolong Wang Mike Tolley

TRI's latest Large Behavior Model (LBM) paper landed on arxiv last night! Check out our project website: toyotaresearchinstitute.github.io/lbm1/ One of our main goals for this paper was to put out a very careful and thorough study on the topic to help people understand the state of the

How to prevent behavior cloning policies from drifting OOD on long horizon manipulation tasks? Check out Latent Policy Barrier (LPB), a plug-and-play test-time optimization method that keeps BC policies in-distribution with no extra demo or fine-tuning: project-latentpolicybarrier.github.io