Jinsheng Wang

@wolfwjs

Make it simple. Make it work.

ID: 1061955660156329984

12-11-2018 12:15:34

97 Tweet

60 Followers

742 Following

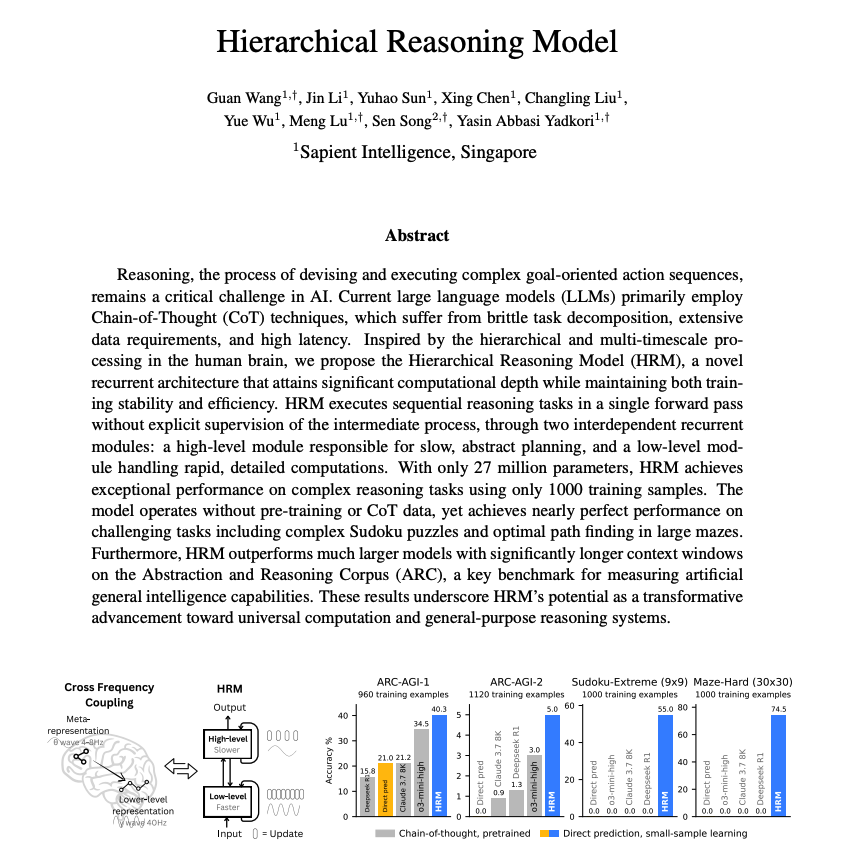

Classic scaling law curves from Generalist: more data, lower validation loss — standard ML story. But if we swap the y-axis to real-world success rate, do the same trends hold? Does 0.0105 vs 0.0115 loss actually mean a more reliable policy?