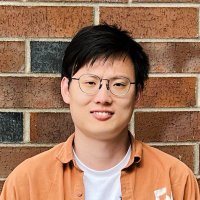

Weiting (Steven) Tan

@weiting_nlp

Ph.D. student at @jhuclsp, Student Researcher @AIatMeta | Prev @AIatMeta @Amazon Alexa AI

ID: 1414244140544573442

https://steventan0110.github.io/ 11-07-2021 15:24:25

65 Tweet

173 Followers

269 Following

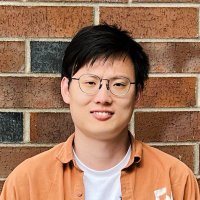

@weiting_nlp

Ph.D. student at @jhuclsp, Student Researcher @AIatMeta | Prev @AIatMeta @Amazon Alexa AI

ID: 1414244140544573442

https://steventan0110.github.io/ 11-07-2021 15:24:25

65 Tweet

173 Followers

269 Following