Theoretical Foundations of Foundation Models

@tf2m_workshop

Workshop on Theoretical Foundations of Foundation Models @icmlconf 2024.

ID: 1776030152788758528

https://sites.google.com/view/tf2m 04-04-2024 23:33:59

40 Tweet

274 Followers

18 Following

Excited to share the program and list of accepted papers for our ICML Conference workshop Theoretical Foundations of Foundation Models: sites.google.com/view/tf2m/sche… Looking forward to discussing efficiency, responsibility, and principled foundations of foundation models in Vienna soon!

Check out the list of accepted papers and program for our ICML Conference workshop Theoretical Foundations of Foundation Models: sites.google.com/view/tf2m/sche…

📢 Excited to share our work "Active Preference Optimization for Sample Efficient RLHF" accepted at #ICML2024 Theoretical Foundations of Foundation Models (Theoretical Foundations of Foundation Models) Workshop! Joint work with Sayak Ray Chowdhury Souradip Chakraborty Aldo Pacchiano arxiv.org/pdf/2402.10500 🧵(1/6)

Also giving a contributed talk on the learning-theoretic complexity and optimality of ICL at the Theoretical Foundations of Foundation Models happy to share our results with the ML theory and LLM community!

We will be presenting this work tomorrow at the Theoretical Foundations of Foundation Models in Vienna. Please feel free to come check it out or to DM/email me if interested. Looking forward to it! Tagging co-authors that are on this app :) Andrea Banino @_joaogui1 Petar Veličković 👓 👓 👓

"The great ICML poster bingo" makes a triumphant return on the last day of #ICML2024 🎲 Six posters, three workshops (GRaM Workshop at ICML 2024, Workshop on Data-centric Machine Learning Research, Theoretical Foundations of Foundation Models) and this time I don't have to present three at once 😅 Hope to see you there for some of them! More details below! 🧵

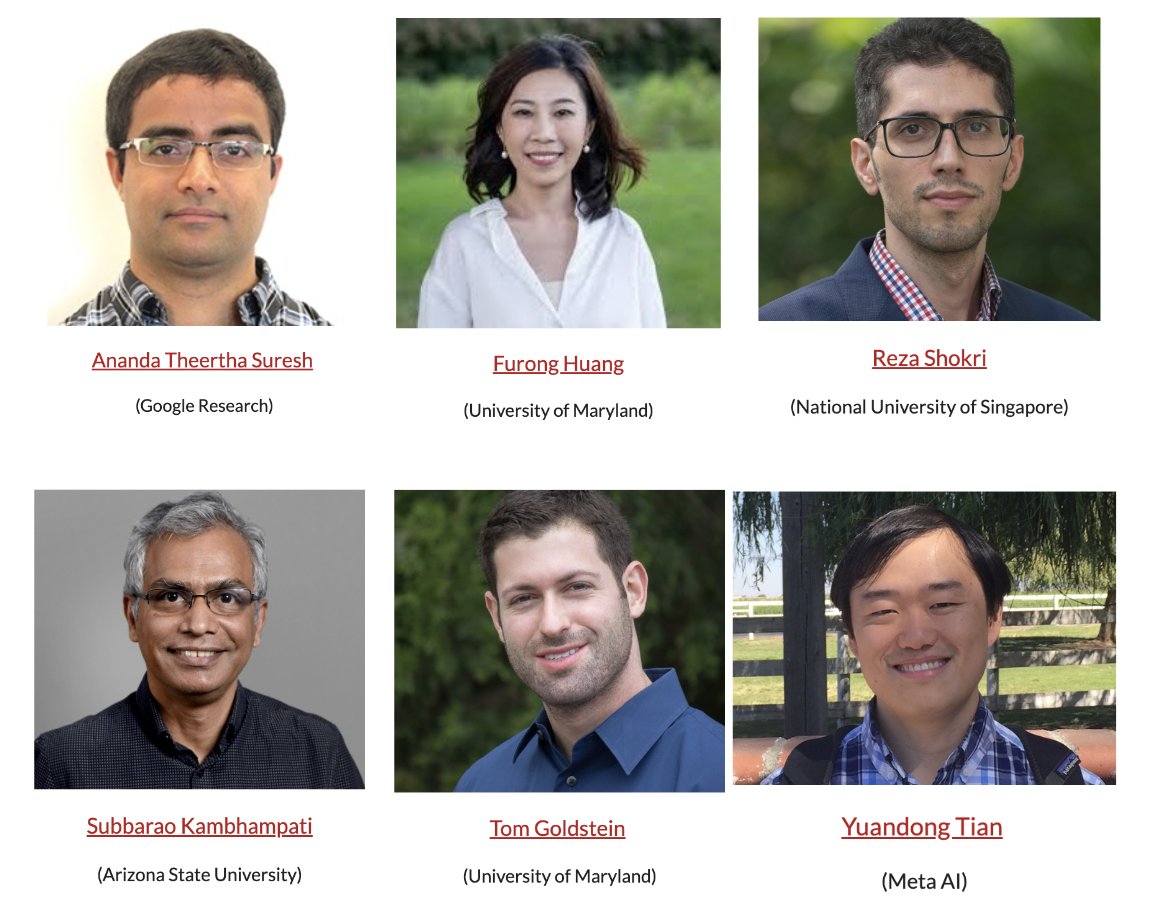

Our panel will start at 4:20 pm with amazing panelists: - Ananda Theertha Suresh - Furong Huang - Reza Shokri - Subbarao Kambhampati (కంభంపాటి సుబ్బారావు) - Tom Goldstein - Yuandong Tian

A small sample of ICML Workshop (GRaM Workshop at ICML 2024 Theoretical Foundations of Foundation Models) presentations by Shiye, Katarina Petrovic and Federico Barbero -- great job all! 🚀 I also contributed a bit but have no pics so far 👀

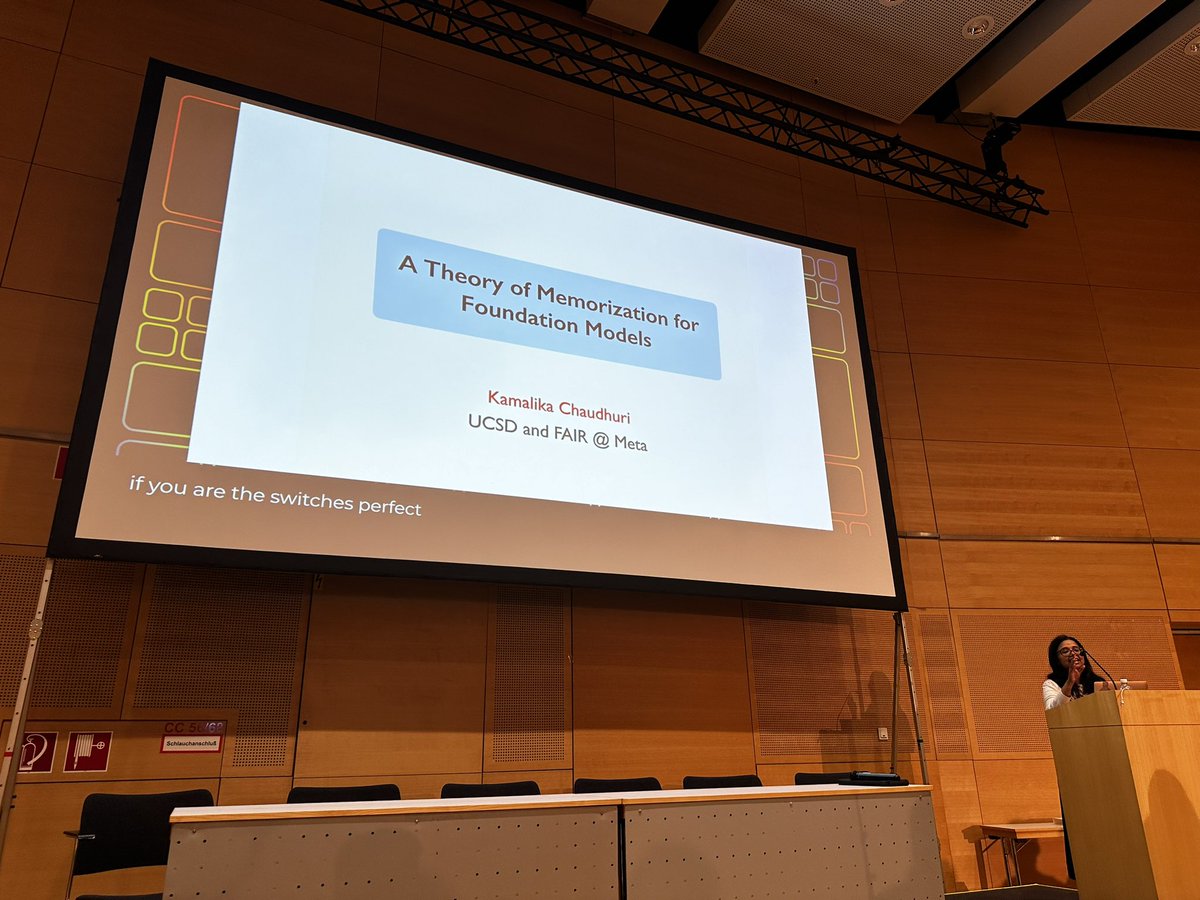

Kamalika Chaudhuri’s talk is happening now in Straus 2! Kamalika Chaudhuri Theoretical Foundations of Foundation Models

It was a really fun ICML Conference workshop with amazing speakers, panelists, poster presentations, and a highly engaged audience! Theoretical Foundations of Foundation Models

It was very fun participating in the Theoretical Foundations of Foundation Models. A big thanks to the organizers Berivan Isik Ziteng Sun Banghua Zhu Enric Boix-Adsera Merve Gurel Bo Li Ahmad Beirami Sanmi Koyejo!

Missed the speaker dinner but the panel at the Theoretical Foundations of Foundation Models Theoretical Foundations of Foundation Models at #ICML2024 was a blast.. Particularly liked the audience question about the implications of Benchmark culture in LLM evaluations..

Thanks for inviting me! Theoretical Foundations of Foundation Models I am surprised to see a large audience for an invited talk in the theoretical workshop! Maybe it is time to understand the models better in addition to blindly scaling up.

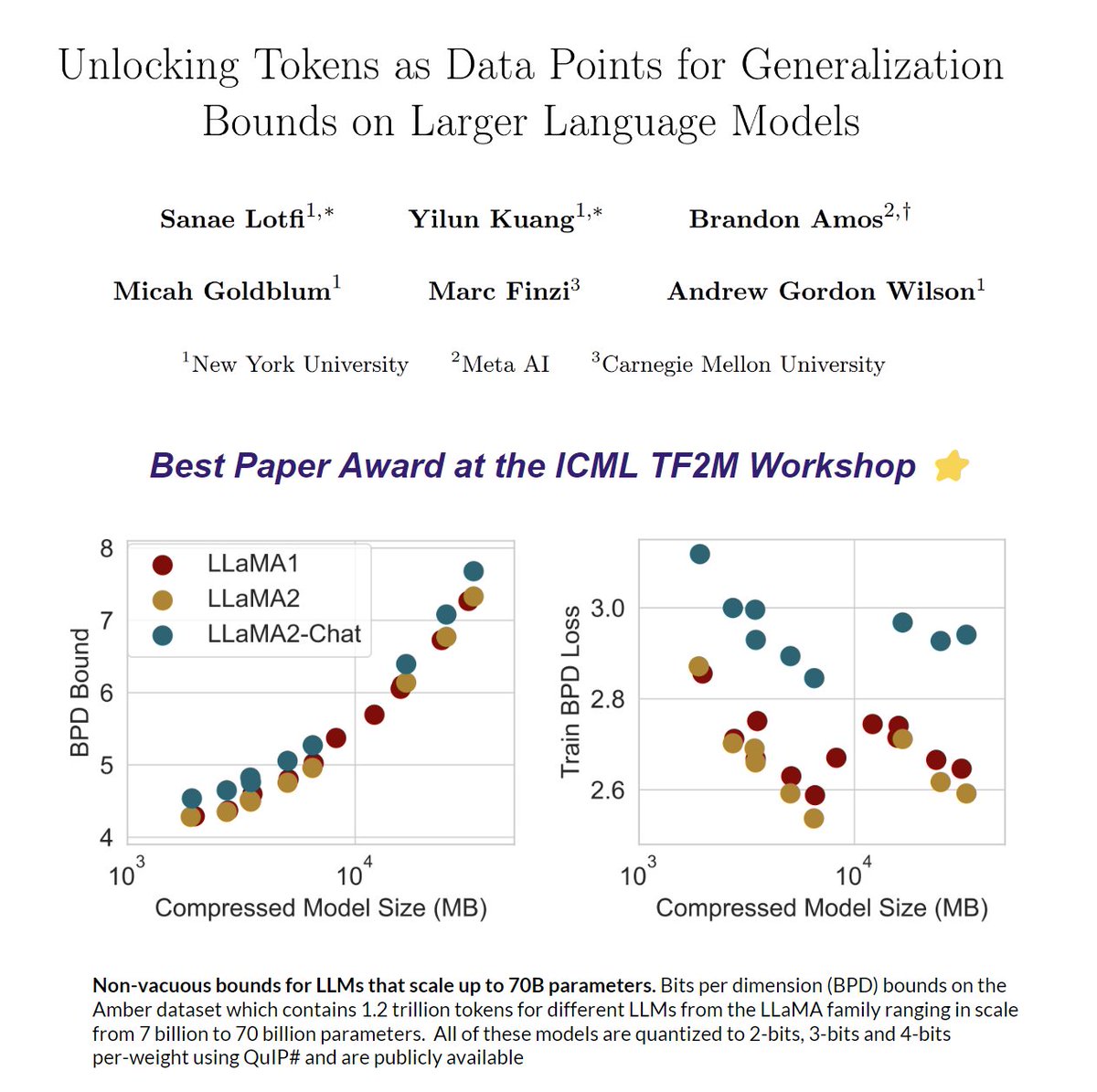

Excited and honored that our new work on token-level generalization bounds for LLMs won a Best Paper Award Theoretical Foundations of Foundation Models at ICML! We investigate generalization in LLMs, e.g., memorization vs. reasoning, through compression bounds at the LLaMA2-70B scale. A 🧵, 1/8