Alek Dimitriev

@tensor_rotator

Inference @Anthropic, prev Gemini @Google, prev prev PhD @UTAustin

ID: 727974502404083712

http://alekdimi.github.io 04-05-2016 21:33:41

377 Tweet

309 Followers

1,1K Following

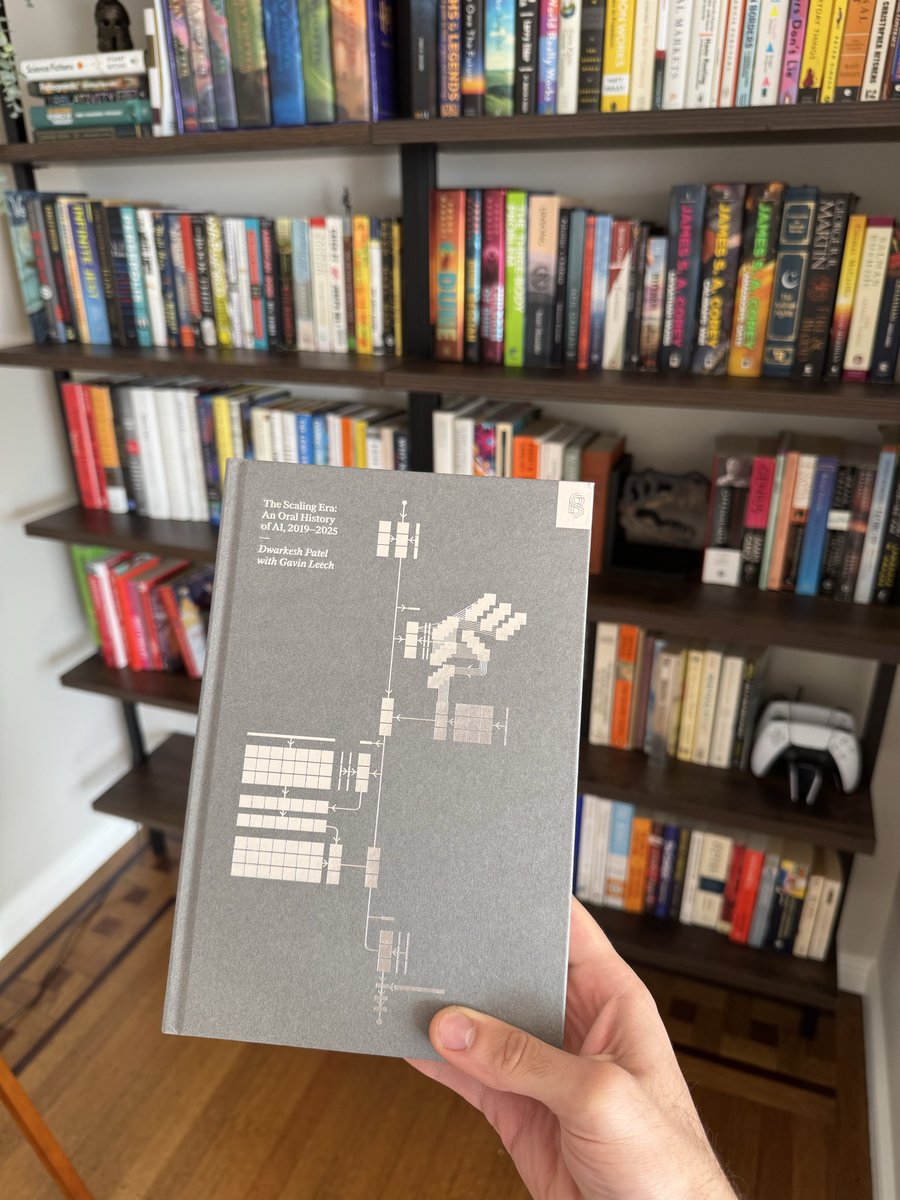

What is intelligence? What will it take to create AGI? What happens once we succeed? The Scaling Era: An Oral History of AI, 2019–2025 by Dwarkesh Patel and gavin leech is in the bay explores the questions animating those at the frontier of AI research. It’s out today: press.stripe.com/scaling

The Scaling Era is out today. I'm actually surprised with how well this format works. Even better than my expectations. It's so interesting to read side-by-side how hyperscalar CEOs, AI researchers, and economists will answer the same question. Thank you to the Stripe Press