Michael Ryoo

@ryoo_michael

prof. with Stony Brook Univ. / research scientist with Salesforce AI Research

ID: 1448359208769015811

http://michaelryoo.com/ 13-10-2021 18:45:42

35 Tweet

333 Followers

68 Following

"Diffusion Illusions: Hiding Images in Plain Sight" received #CVPR2023 Outstanding Demo Award. diffusionillusions.com Congratulations Ryan Burgert Kanchana Ranasinghe Xiang Li!

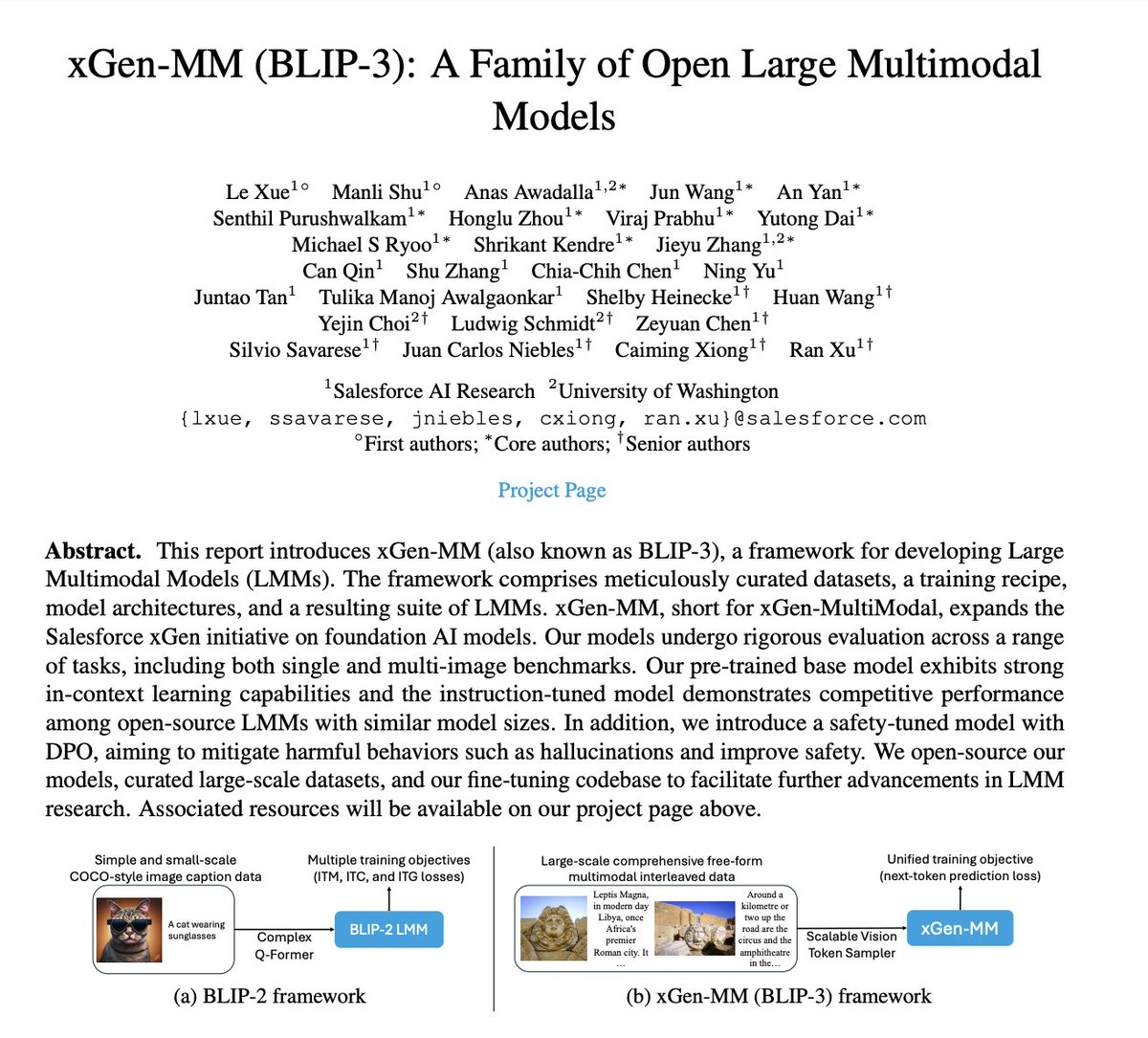

🚨🎥🚨🎥🚨 xGen-MM-Vid (BLIP-3-Video) is now available on Hugging Face! Our compact VLM achieves SOTA performance with just 32 tokens for video understanding. Features explicit temporal encoder + BLIP-3 architecture. Try it out! 🤗32 Token Model: bit.ly/3PBNBBz 🤗128