Mete Tuluhan Akbulut

@metetuluhan

CS PhD Student at Brown. Member of Intelligent Robot Lab @BrownBigAI Previously: Member of @ColorsLab_BOUN at Bogazici University

ID: 1477748251

02-06-2013 16:56:10

103 Tweet

141 Followers

451 Following

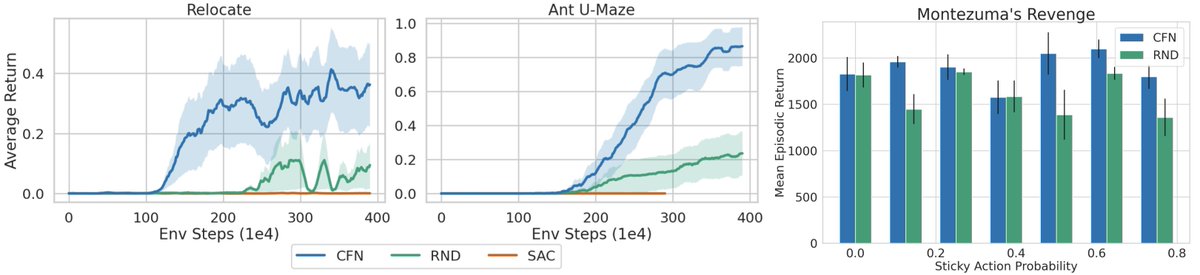

📢Our paper on exploration for deep RL has been accepted as an Oral ICML Conference! 🎉 Count-based exploration enjoys strong theoretical foundations, yet it has been overshadowed by prediction-error methods like RND. Our embarrassingly simple idea could scale pseudocounts ⬇️ #ICML2023

Doktora öğrencimiz Alper'in çalışması SİU2023'de en iyi bildiri ödülü aldı. Tebrikler Alper Ahmetoglu! Boğaziçi Üni. Bilgisayar Mühendisliği Bölümü

Can robots learn data-efficient world models for object manipulation? Our new paper, which won "Best Paper" at the Conference on Robot Learning RINO Workshop, shows how robots learn object-centric world models with just a few minutes of interaction data. 🧵1/7