Lakshya A Agrawal

@lakshyaaagrawal

AI PhD @ UC Berkeley | Past: AI4Code Research Fellow @MSFTResearch | Summer @EPFL | Maintainer of aka.ms/multilspy | Hobbyist Saxophonist

ID: 2256125125

https://lakshyaaagrawal.github.io 21-12-2013 07:13:00

620 Tweet

475 Followers

1,1K Following

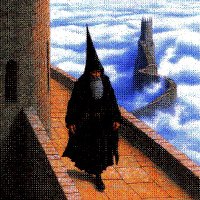

Matt Pocock muzz Omar Khattab Storm DSPy One of the cool prompt optimization results I saw around GEPA was they found that a prompt instructing the model that it was a StarCraft player made it outperform any other specialized prompt on math Olympiad problems

Matt Pocock Maxime Rivest 🧙♂️🦙🐧 DSPy The docs for GEPA (a recently added optimizer) has an example of an llm-as-a-judge metric: dspy.ai/tutorials/gepa…

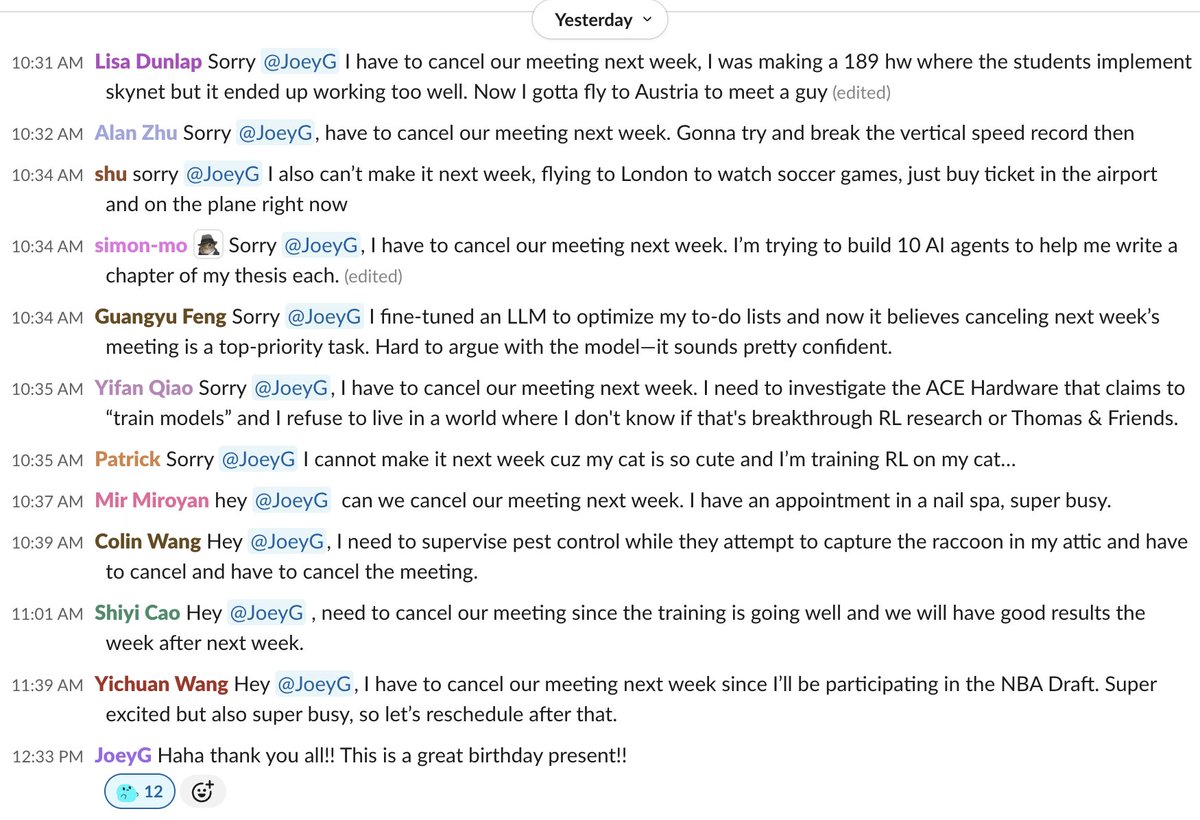

Don't know what to get your advisor for their birthday? Give them the best gift of all: their time back. Happy (late) birthday Joey Gonzalez :)

Drew Breunig Performed some experiment last week using GEPA with DSPy using llama3.1:8b and Qwen3:8b also locally hosted gpt-oss:20/120b on M4 Max 128GB RAM. Source : github.com/SuperagenticAI… Blog: super-agentic.ai/resources/supe… Code is open open source but uses SuperOptiX (proprietary) framework.

Sudhir Gajre Drew Breunig DSPy Not as simple an example as Drew’s but the docs show how to use it below. Welcome to the world of DSPy ! dspy.ai/tutorials/gepa…

Kevin Madura Sudhir Gajre DSPy If you’d like more grounding first, you can check out my intro: dbreunig.com/2024/12/12/pip…

youtube.com/watch?v=H4o7h6… Thanks Connor Shorten for creating this amazing tutorial on using GEPA to optimize a Listwise Reranker!