Julien Chaumond

@julien_c

Co-founder and CTO at @huggingface 🤗. ML/AI for everyone, building products to propel communities fwd. @Stanford + @Polytechnique

ID: 16141659

https://huggingface.co 05-09-2008 07:56:27

16,16K Tweet

58,58K Followers

1,1K Following

That's one priceless and iconic shot Get it framed Burkay Gur!!!

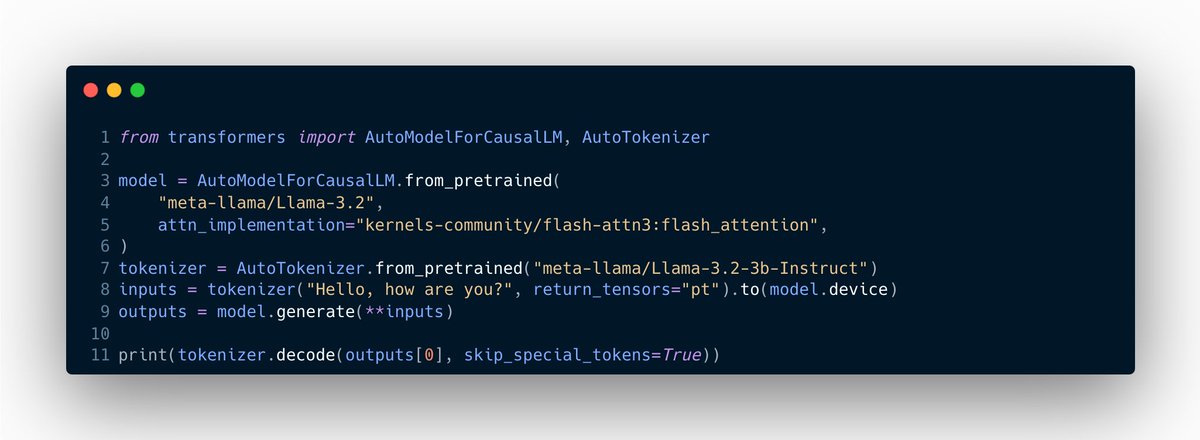

This is not simply a speed-up, but also a memory reduction: run bigger models, faster. We're working with kernel providers like Unsloth AI, Liger Kernel, Red Hat AI, vLLM, ggml and others so that kernels they build are reused across runtimes, from their Hub org.

With the latest release, I want to make sure I get this message to the community: we are listening! Hugging Face we are very ambitious and we want `transformers` to accelerate the ecosystem and enable all hardwares / platforms! Let's build AGI together 🫣 Unbloat and Enable!

works immediately out of the box. Hugging Face and the open llm ecosystem don't get enough credit for this.

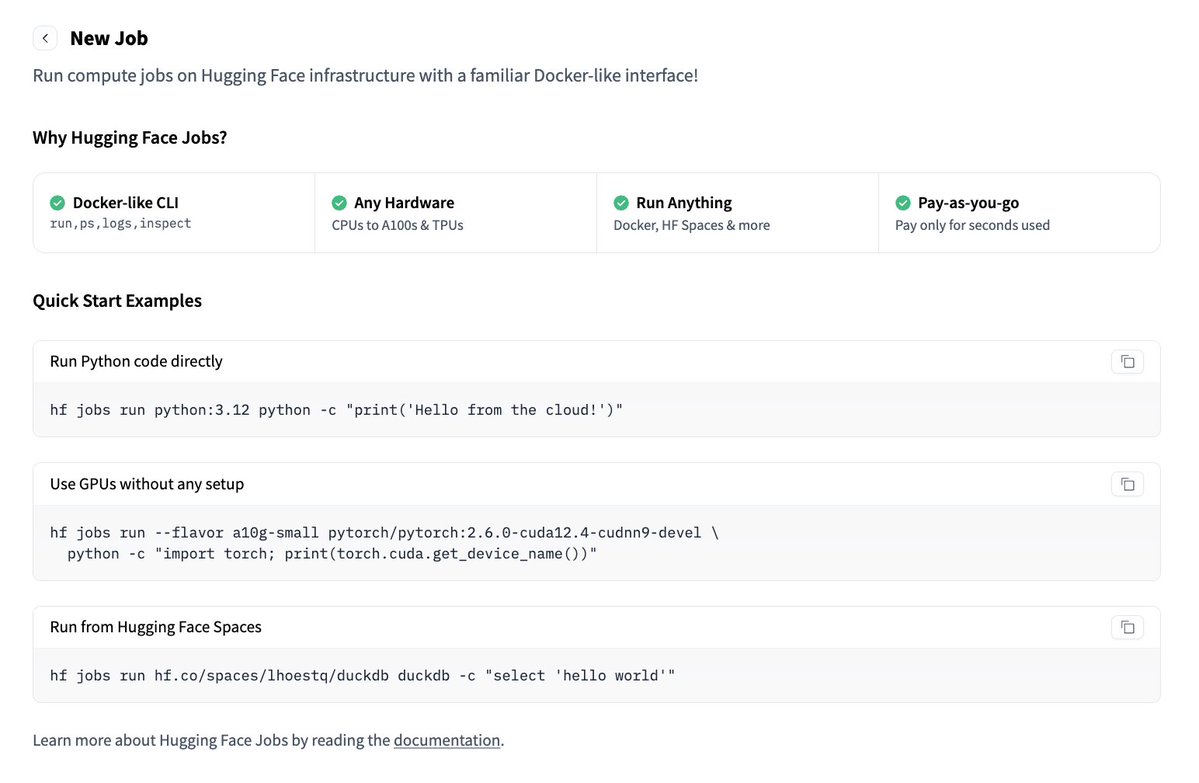

Announcing 🤗hf jobs 🚨 Tools + CLI to run compute job on Hugging Face infra. For training, fine-tuning, for your own scripts... Select any GPU. Run many jobs in parallel. Compatible with `uv`. Pay as you go. Try it today: pip install -U huggingface_hub

How much are you using Hugging Face's CLI? Mostly to upload and download models and datasets? We just revamped it (welcome to `hf`!) and added the capability to run jobs directly on our infra. Useful?

Jan v0.6.6 is out: Jan now runs fully on llama.cpp. - Cortex is gone, local models now run on Georgi Gerganov's llama.cpp - Toggle between llama.cpp builds - Hugging Face added as a model provider - Hub enhanced - Images from MCPs render inline in chat Update Jan or grab the latest.