Eran Hirsch

@hirscheran

PhD candidate @biunlp ; Tweets about NLP, ML and research

ID: 2796071287

https://eranhirs.github.io/ 07-09-2014 14:32:29

830 Tweet

288 Followers

636 Following

Slides for my lecture “LLM Reasoning” at Stanford CS 25: dennyzhou.github.io/LLM-Reasoning-… Key points: 1. Reasoning in LLMs simply means generating a sequence of intermediate tokens before producing the final answer. Whether this resembles human reasoning is irrelevant. The crucial

BIU NLP Itai Mondshine Reut Tsarfaty Our paper "Beyond N-Grams: Rethinking Evaluation Metrics and Strategies for Multilingual Abstractive Summarization": arxiv.org/pdf/2507.08342

Are you still around Vienna? Come hear about a new morphological task at CoNLL at ~11:20 (hall M.1) Reut Tsarfaty

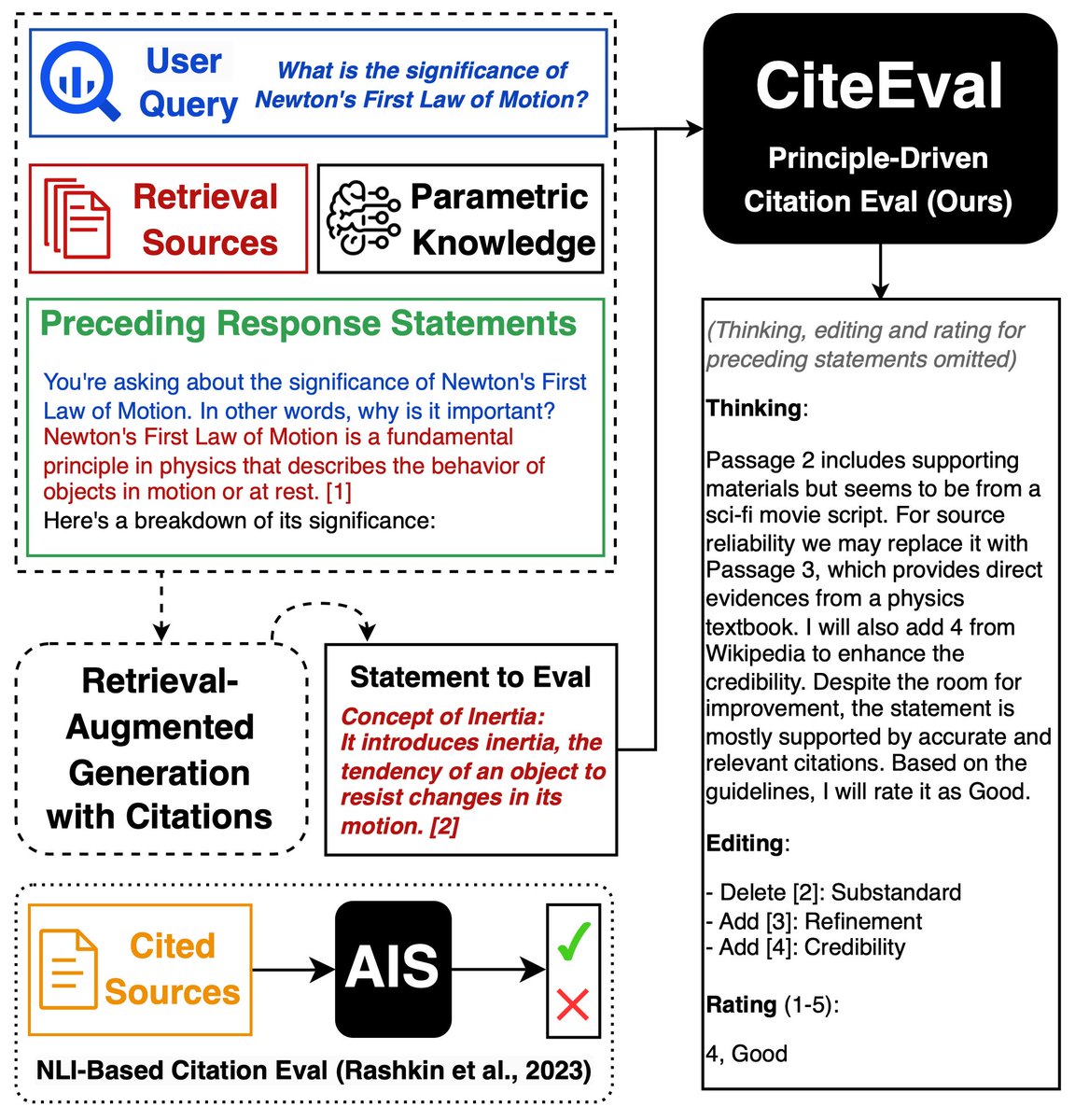

![Fan Nie (@fannie1208) on Twitter photo How it works? [4/n]

🔹 UQ-Dataset: 500 challenging, diverse unsolved questions sourced from Stack Exchange. They are super hard and arise naturally when humans seek answers.

🔹 UQ-Validator: No ground-truth → traditional metrics fail. Instead, we designed compound validation How it works? [4/n]

🔹 UQ-Dataset: 500 challenging, diverse unsolved questions sourced from Stack Exchange. They are super hard and arise naturally when humans seek answers.

🔹 UQ-Validator: No ground-truth → traditional metrics fail. Instead, we designed compound validation](https://pbs.twimg.com/media/GzSz6WRbwAAxkKl.jpg)