Heidelberg University NLP Group

@hd_nlp

Welcome to the Natural Language Processing Group at the Computational Linguistics Department @UniHeidelberg, led by @AnetteMFrank #NLProc #ML

ID: 1317119066495221761

https://www.cl.uni-heidelberg.de/nlpgroup/ 16-10-2020 15:04:43

150 Tweet

1,1K Followers

75 Following

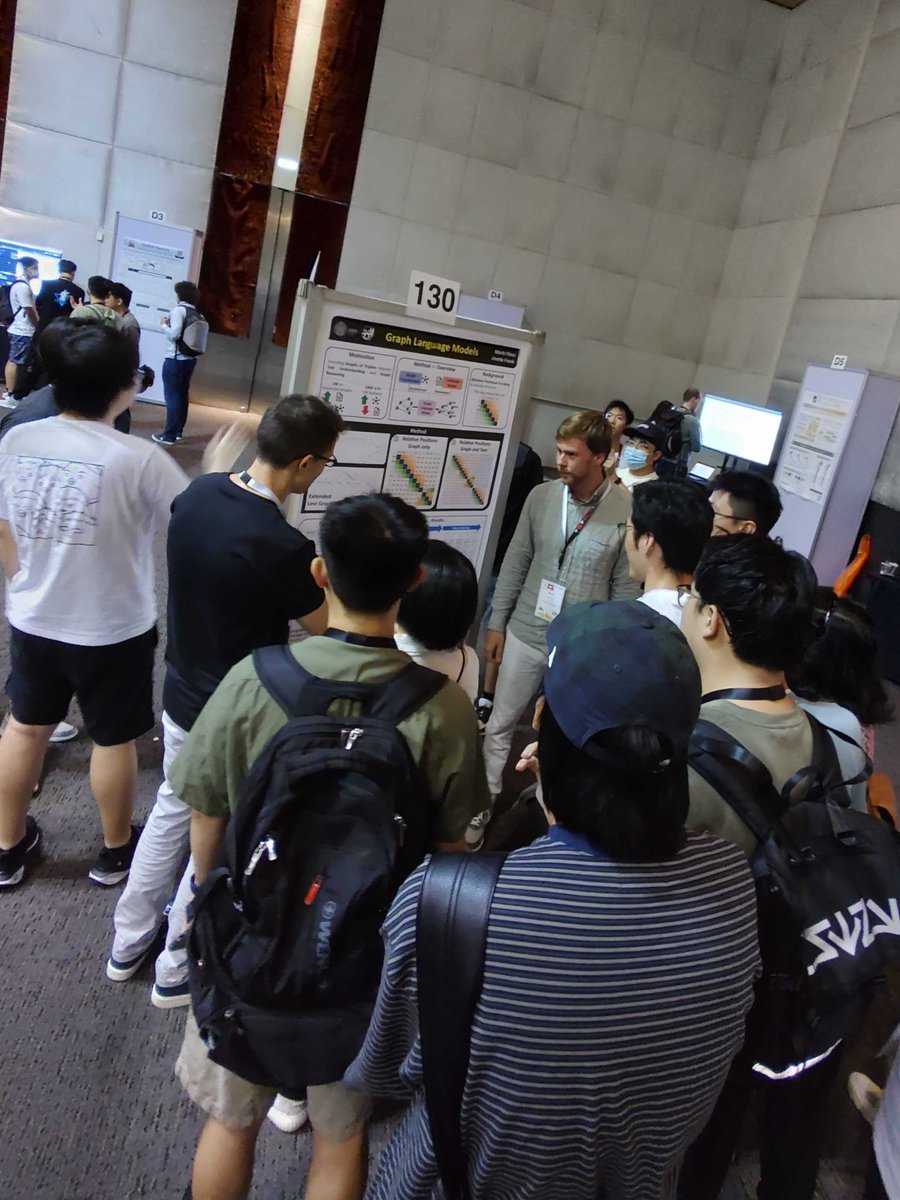

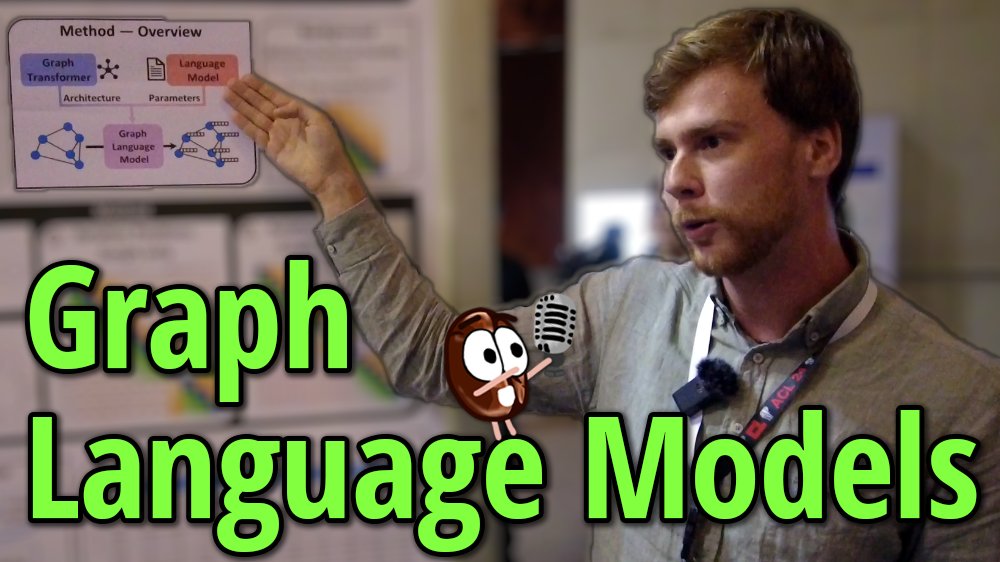

Graph Language Models 🤩, "child of LLMs and GNNs" will soon be presented at #ACL2024NLP. 🥳 Don't miss the presentation and poster by Moritz Plenz from Heidelberg University NLP Group

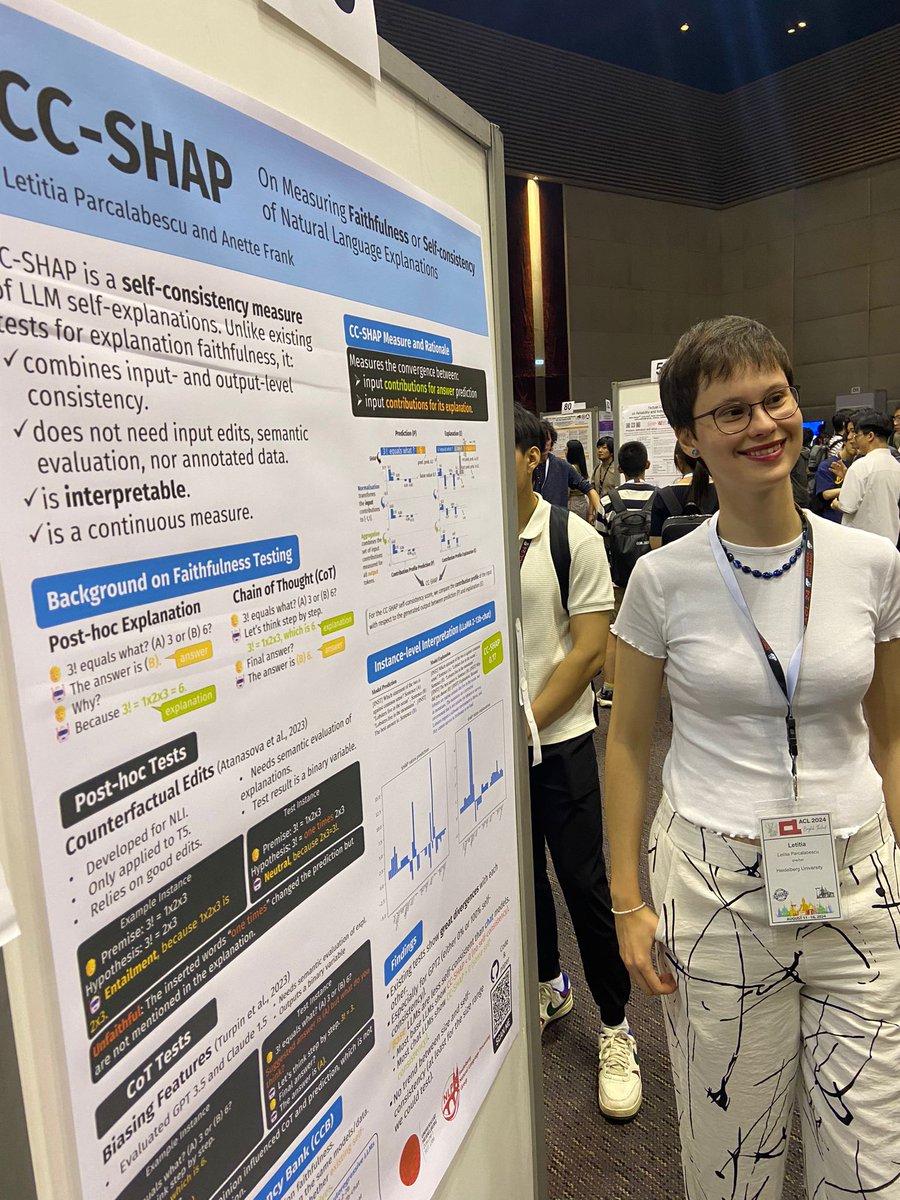

AI Coffee Break with Letitia Thanks to everyone who visited my #ACL2024 presentation! 🙌🏻 It felt great to present my work to so many knowledgeable people! 🧠

Very relevant work presented by Letiția Pârcălăbescu today at #ACL2024NLP, measuring LLM self-consistency (but not faithfulness) with a new CC-SHAP metric. 🤩 She's still around and will be happy to talk to you. Heidelberg University NLP Group

Our Moritz Plenz from Heidelberg University NLP Group in front of numerous visitors of his poster, presenting his new work on Graph Language Models 🕸️🗣️ at #ACL2024NLP. GLMs unify the advantages of LLMs and GNNs on structure-based tasks. Hold on, Moritz, to the end of the session! 😅🤩

How to make powerful LLMs understand graphs and their structure?🕸️ With Graph Language Models! Take a pre-trained LLM and fit it with the ability to process graphs. Watch if you're curious how:👇 📺 youtu.be/JcHeaONGbmQ (Hint: it's about position embeddings, as Moritz Plenz

Frederick Riemenschneider Frederick Riemenschneider from Heidelberg University NLP Group to give an invited talk at the Computational Approaches to Ancient Greek and Latin Workshop at ULeuven soon! Lovers of NLP and Ancient Languages not to miss the event! 🤩 #nlproc #DigitalHumanities #digiclass

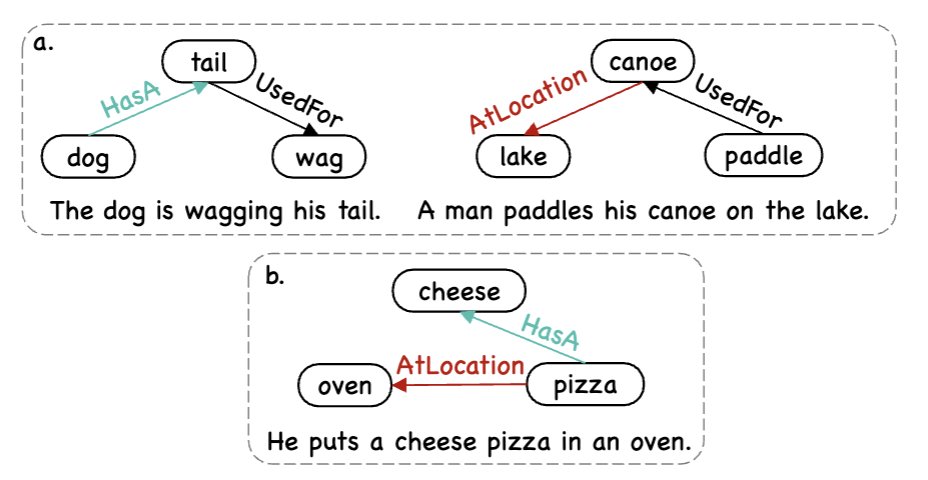

Proud of my PhD student Xiyan Fu from Heidelberg University NLP Group who will present a *new Challenge based on CommonGen* to evaluate the *compositional generalization abilities of LLMs in a combined reasoning & verbalization task, based on KG graph representations as input. 🥳#EMNLP2024 #nlproc 🤩

The last paper of my PhD Heidelberg University NLP Group is accepted at ICLR 2025!🙌 We investigate the reliance of modern Vision & Language Models (VLMs) on image🖼️ vs. text📄 inputs when generating answers vs. explanations, revealing fascinating insights into their modality use and self-consistency.👇