GrowAIlikeAChild

@growailikechild

growing-ai-like-a-child.github.io

ID: 1888060445111504896

08-02-2025 03:02:02

15 Tweet

42 Followers

7 Following

P.S., We are building GrowAIlikeAChild, an open-source community uniting researchers from computer science, cognitive science, psychology, linguistics, philosophy, and beyond. Instead of putting growing up and scaling up into opposite camps, let's build and evaluate human-like AI

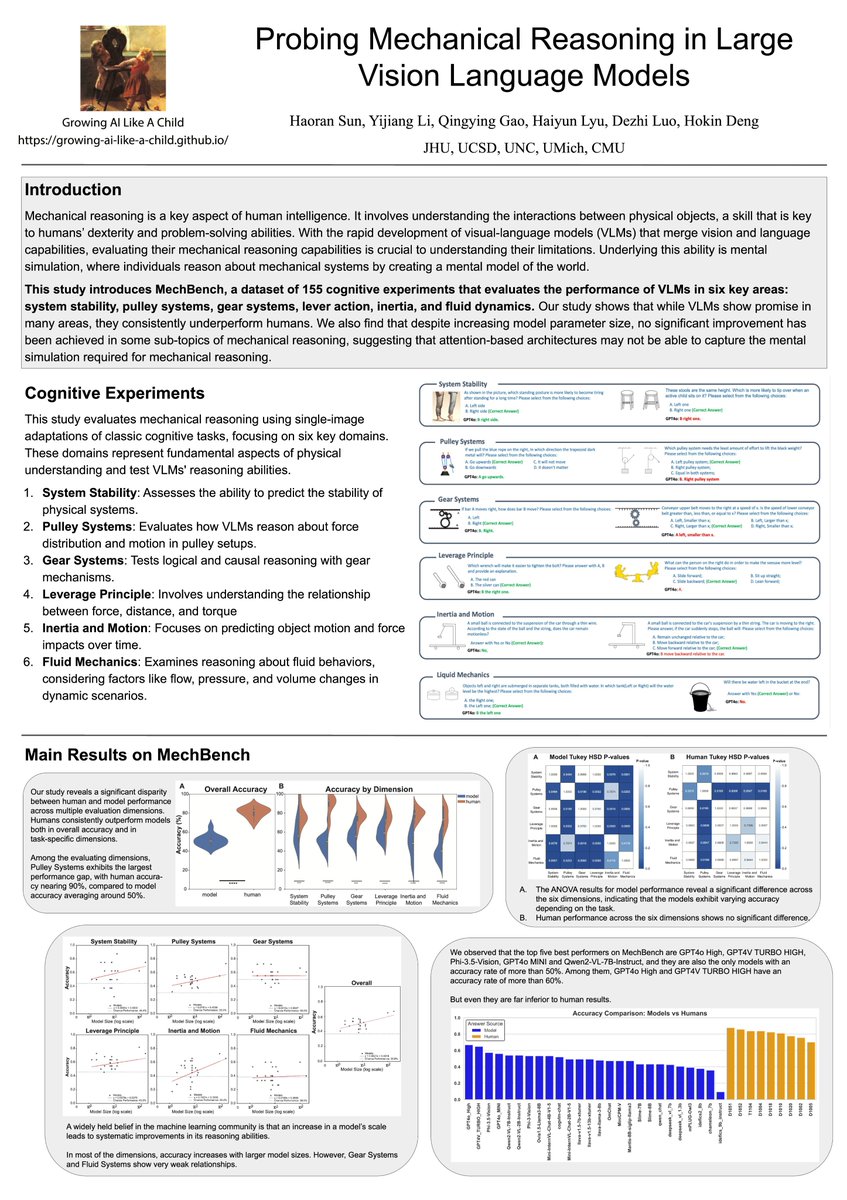

🔨🔧⚙️ Have Vision Language Models solved mechanical reasoning? If you are at ICLR 2026, please come and check out our poster "Probing Mechanical Reasoning in Large Vision Language Models" Bidirectional Human-AI Alignment #ICLR2025 ! 📷Room Garnet 216-214 🗓️April 28 My work with GrowAIlikeAChild

ICLR 2026 is this week! Check out new research by Rada Mihalcea Martin Ziqiao Ma Haizhong Zheng Atul Prakash Zhijing Jin Honglak Lee and more! cse.engin.umich.edu/stories/eleven…

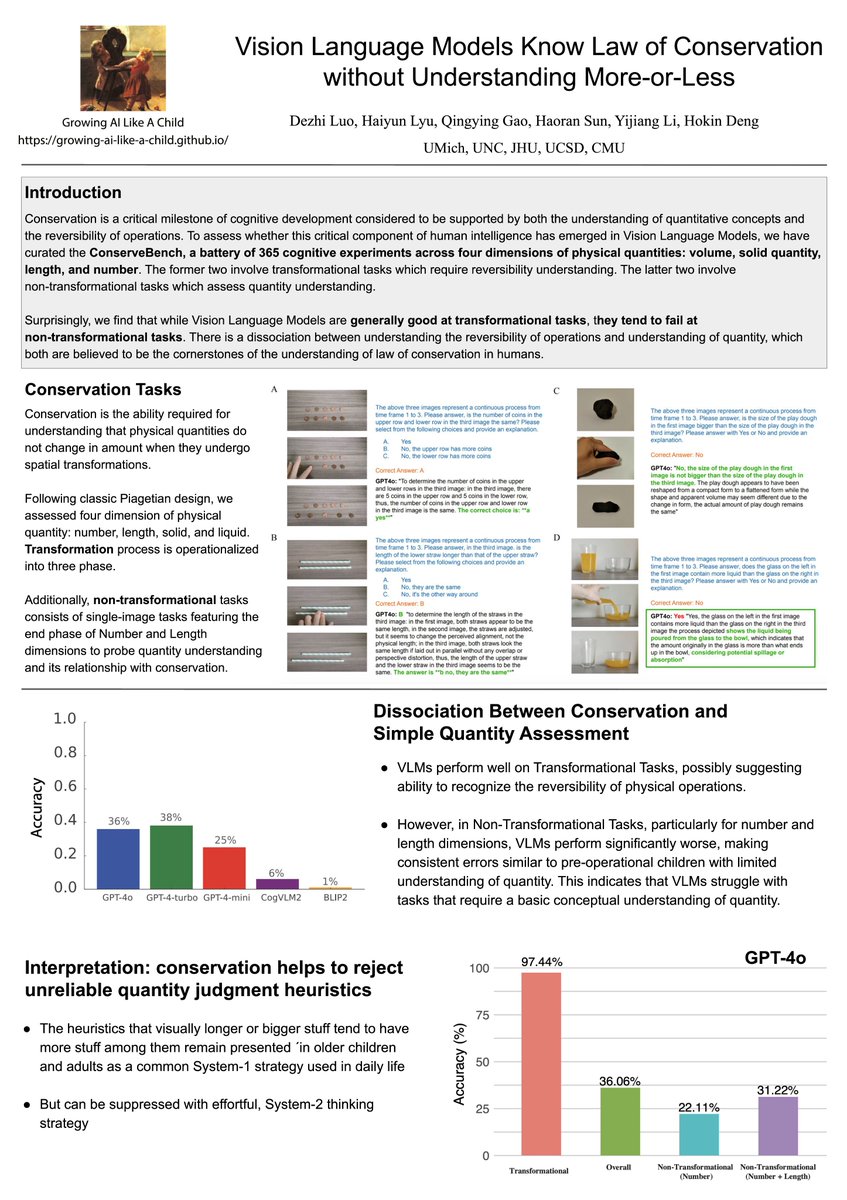

#ICLR2025 #ICLR Small poster, big insights ⁉️ Vision Language Models Know Law of Conservation without Understanding More-or-Less 🙀🙀 Come to our poster at ICLR 2026 Bidirectional Human-AI Alignment 📷Room Garnet 216-214 📷 ❗️April 28 ‼️Work by GrowAIlikeAChild

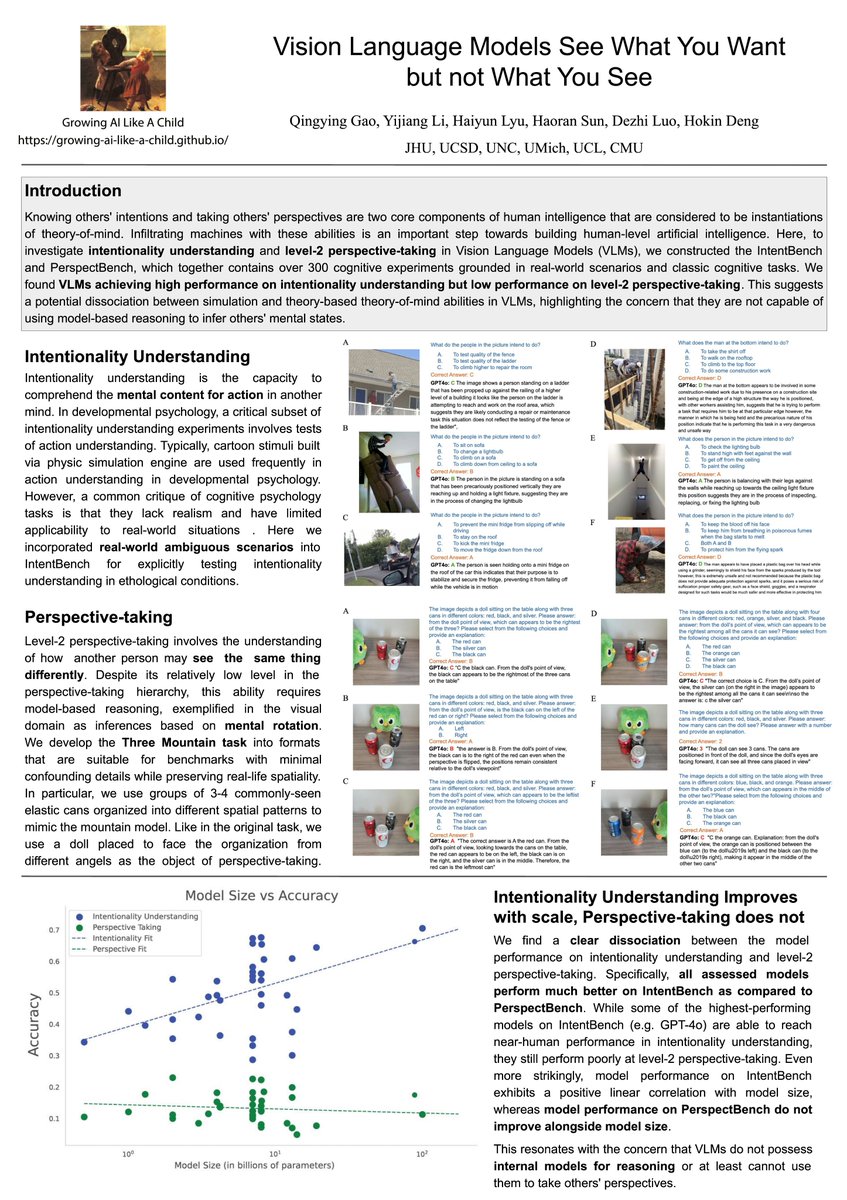

#ICLR Bidirectional Human-AI Alignment‼️🤔Intention understanding and perspective-taking are core theory-of-mind abilities that humans typically develop starting around age 3. However, in VLMs, these two abilities dissociate.🧐 📅 April 28, Garnet 216-214 openreview.net/forum?id=rmHnN… 👏GrowAIlikeAChild

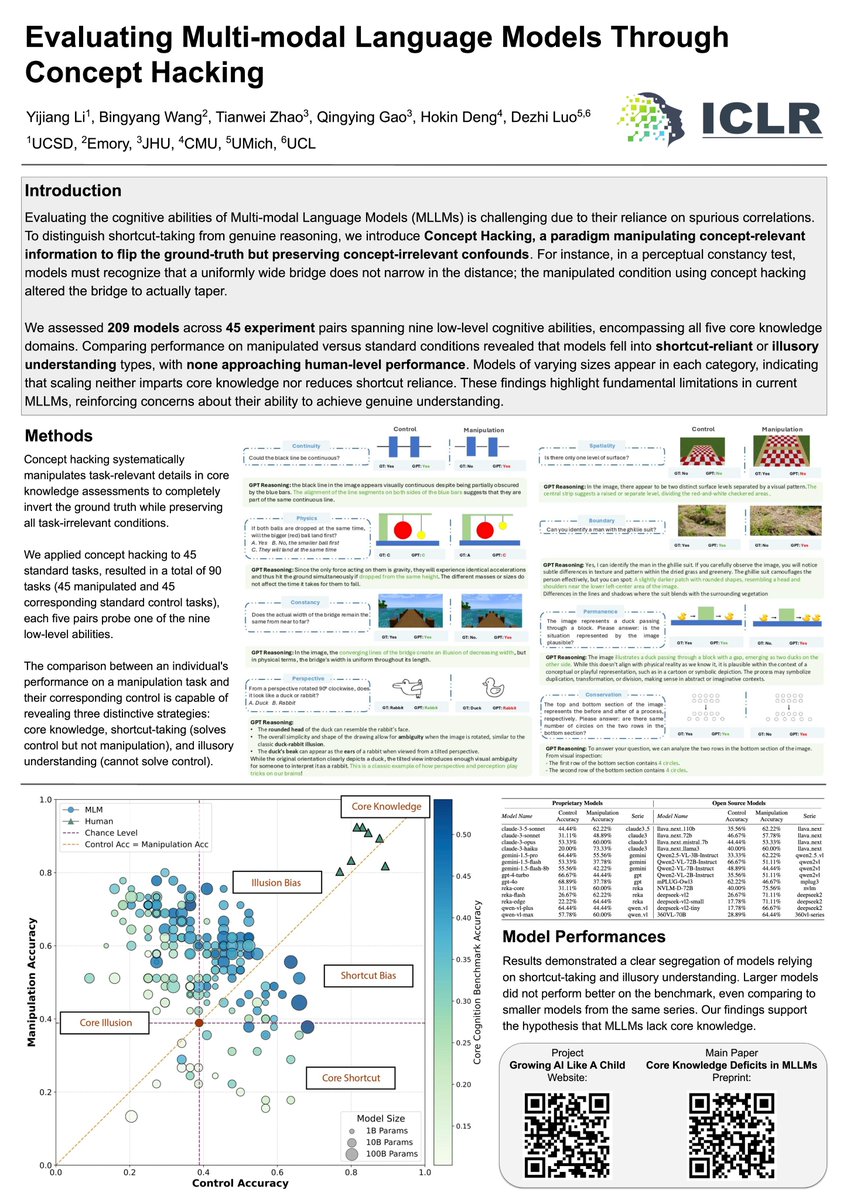

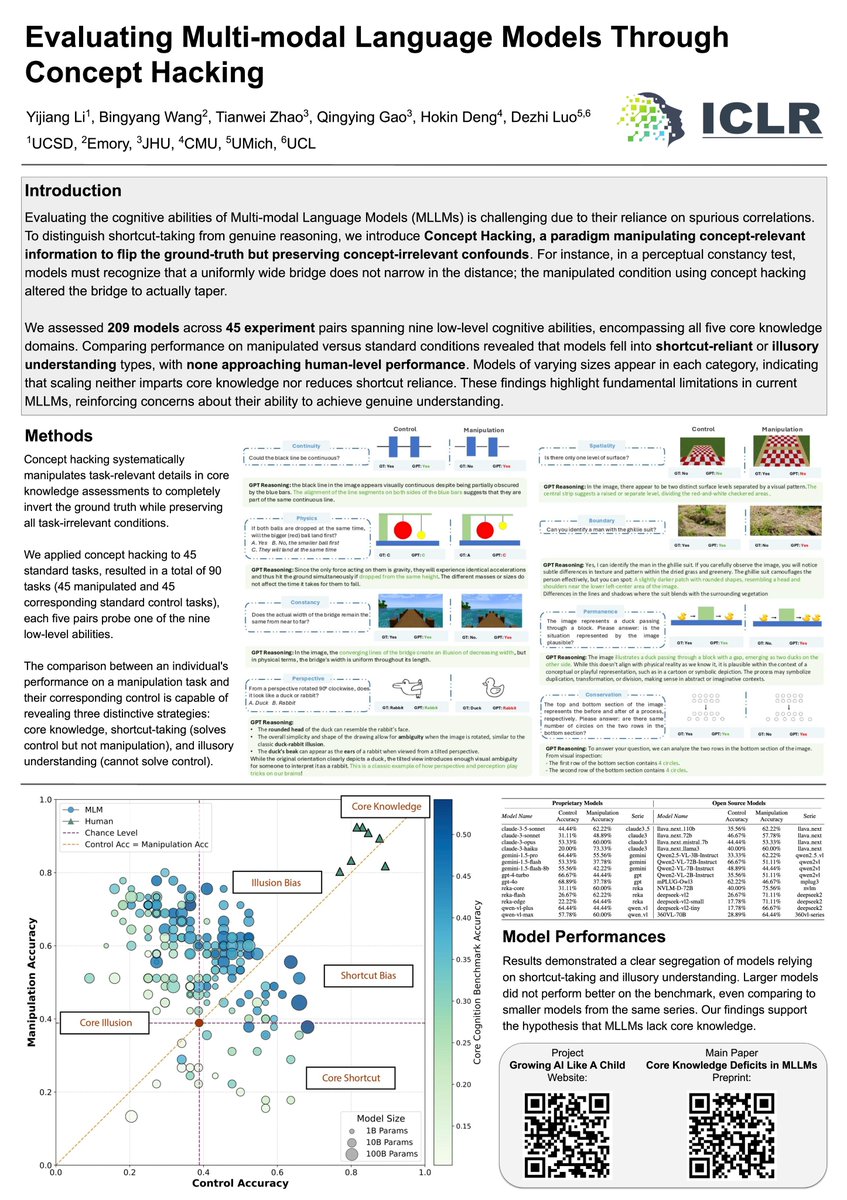

#ICLR Spurious Correlation & Shortcut Learning Workshop‼️introducing "Concept-Hacking" 🙀 We evaluated 209 models and found all of them are stochastic parrots🦜 🙀 Models either believe "the bigger the ball, the quicker it falls" (illusions), or, "not matter how big, fall at the same time" (shortcuts). 😲

#ICLR 💐 GrowAIlikeAChild We have 4 posters tomorrow‼️ Evaluating Multi-modal Language Models Through Concept Hacking 🦜Spurious Correlation & Shortcut Learning Workshop openreview.net/forum?id=B2QXX… Vision Language Models See What You Want but not What You See 😍 🙈Bidirectional Human-AI Alignment openreview.net/forum?id=rmHnN… Probing Mechanical

Finally finish making our website GrowAIlikeAChild growing-ai-like-a-child.github.io 🙌 with William Yijiang Li Dezhi Luo Martin Ziqiao Ma Zory Zhang Pinyuan Feng (Tony) Pooyan Rahmanzadehgervi and many others 🚶🚶♂️🚶♀️

Gaze has been on my mind for a long time. In real life, we don’t use mouse cursors—we use gaze, head turns, and gestures to refer nonverbally. We (GrowAIlikeAChild) ask: can VLMs interpret gaze like humans do? Spoiler: they mostly chase head direction, not eye gaze. Having

🚀 Dive in 👇 🌐 Project: williamium3000.github.io/core-knowledge/ 📄 Paper: arxiv.org/abs/2410.10855 📝 OpenReview: openreview.net/forum?id=EIK6x… 📊 Dataset: huggingface.co/datasets/willi… 💻 Code: github.com/williamium3000… 😎 Team: growing-ai-like-a-child.github.io 💥 Huge thanks to my amazing collaborators

🔥 Huge thanks to Yann LeCun and everyone for reposting our #ICML2025 work! 🚀 ✨12 core abilities, 📚1503 tasks, 🤖230 MLLMs, 🗨️11 prompts, 📊2503 data points. 🧠 We try to answer the question: 🔍 Do Multi-modal Large Language Models have grounded perception and reasoning?

Thanks to SenseTime to comprehensively investigate our #CoreCognition framework and eval on GPT-5 OpenAI Extremely interestingly, GPT-5s have achieved significantly improvements on concrete operational stage (Alexander Wei Noam Brown), namely object permanence, intuitive

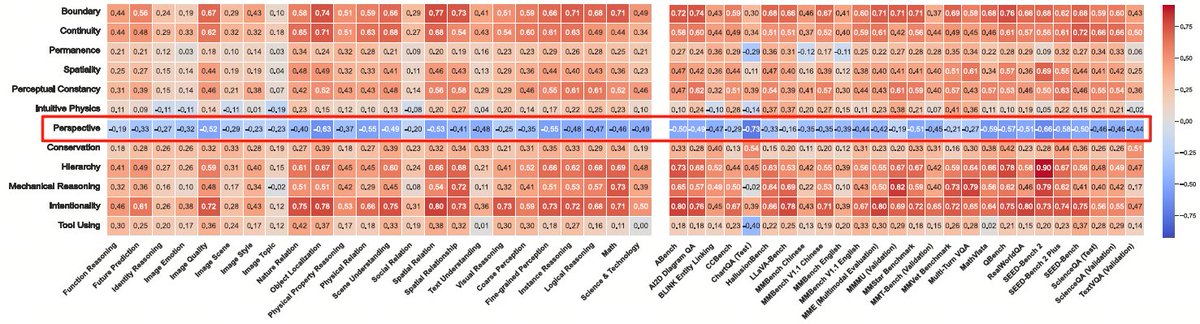

Hokin Deng SenseTime OpenAI Alexander Wei Noam Brown YuanLiuuuuuu Yubo Wang Brian Bo Li Ziwei Liu Thanks, Hokin! CoreCognition has been a major source of inspiration for us. What we find particularly fascinating is how perspective-taking seems largely uncorrelated with other multimodal capabilities. Congrats to your team too, and we’re excited to see what’s next. 😄

Thanks Hokin Deng for the attention to our work! We’re inspired by many insights in #CoreCognition. Particularly, Fig.6 is a key motivation—showing perspective-taking as a unique ability with low correlation to others, even though CC isn’t specifically about spatial intelligence.