EmbeddedLLM

@embeddedllm

Your open-source AI ally. We specialize in integrating LLM into your business.

ID: 1716394660636295168

23-10-2023 10:02:43

303 Tweet

621 Followers

1,1K Following

We are excited about an open ABI and FFI for ML Systems from Tianqi Chen. In our experience with vLLM, such interop layer is definitely needed!

vLLM Sleep Mode 😴→ ⚡Zero-reload model switching for multi-model serving. Benchmarks: 18–200× faster switches and 61–88% faster first inference vs cold starts. Explanation Blog by EmbeddedLLM 👇 Why it’s fast: we keep the process alive, preserving the allocator, CUDA graphs,

Wow Quantization-enhanced Reinforcement Learning using vLLM! Great job by Yukang Chen 😃

Amazing work by Rui-Jie (Ridger) Zhu and the ByteDance Seed team — Scaling Latent Reasoning via Looped LMs introduces looped reasoning as a new scaling dimension. 🔥 The Ouro model is now runnable on vLLM (nightly version) — bringing efficient inference to this new paradigm of latent

Happy to meet with EmbeddedLLM people in person! Thanks for all the hardware supports in vLLM and LMCache Lab !

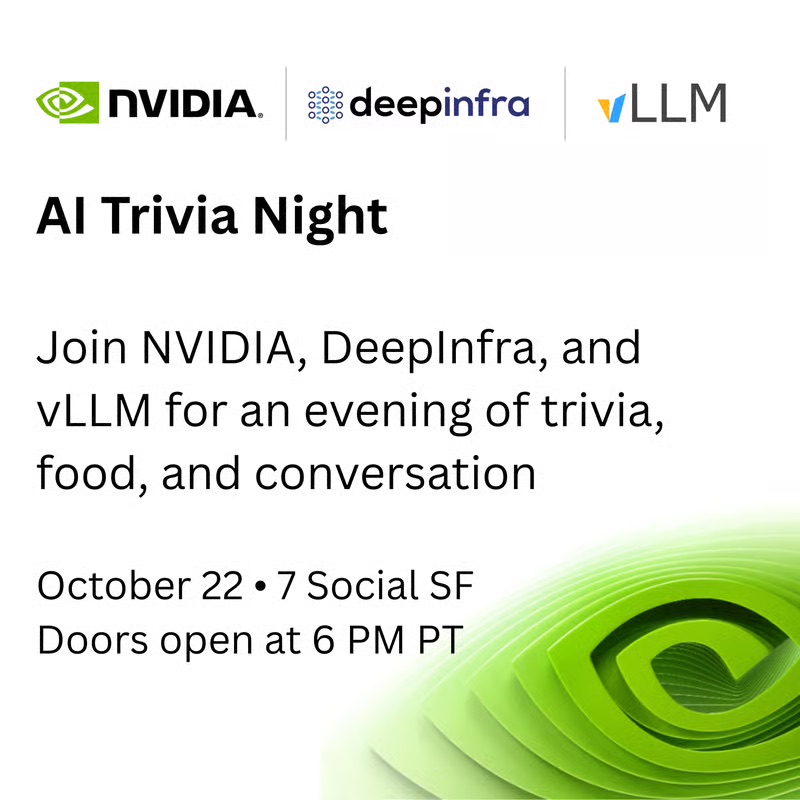

Awesome connecting with the vLLM community in person! Roger Wang Kuntai Du Harry Mellor Simon Mo Tan TJian Chendi Xue Robert Shaw Brittany Rockwell

Big night at the vLLM × Meta × AMD meetup in Palo Alto 💥 So fun hanging out IRL with fellow vLLM Woosuk Kwon, Simon Mo and the AMD crew Anush Elangovan and Ramine Roane. Bonus: heading home with a signed AMD Radeon PRO AI Pro R9700 to squeeze even more tokens/sec out of AMD