Ella Charlaix

@ellacharlaix

ML Eng @huggingface

ID:3385680719

21-07-2015 10:20:40

14 Tweets

619 Followers

219 Following

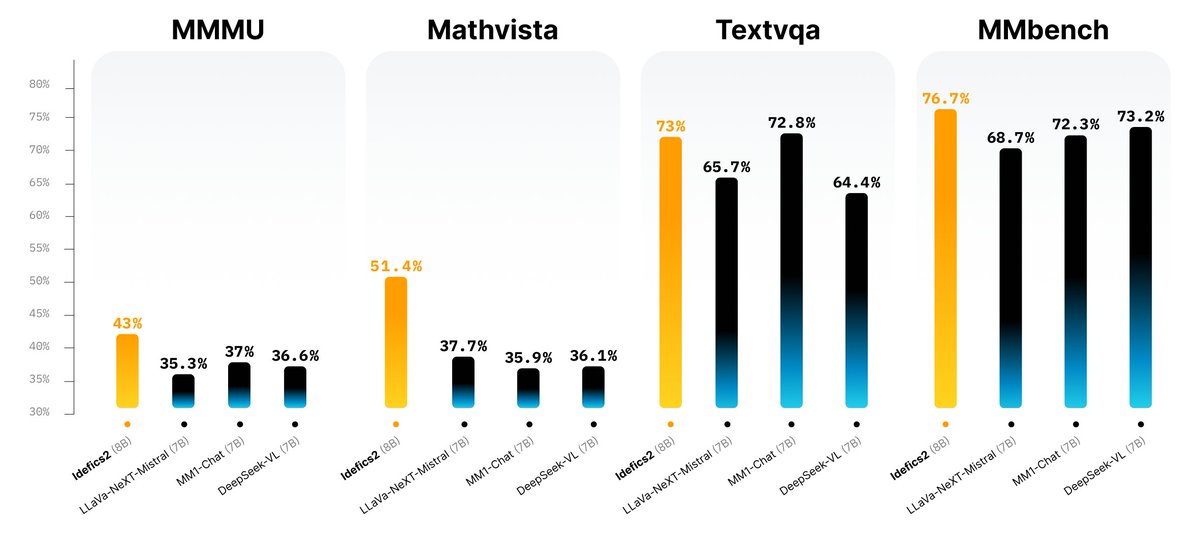

Today we release Idefics2 our newest 8B Vison-Language Model!

💪 With only 8B parameters Idefics is one of the strongest open models out there

📋 We used multiple OCR datasets, including PDFA and IDL from Ross Wightman and Pablo Montalvo, and increased resolution up to 980x980 to improve…

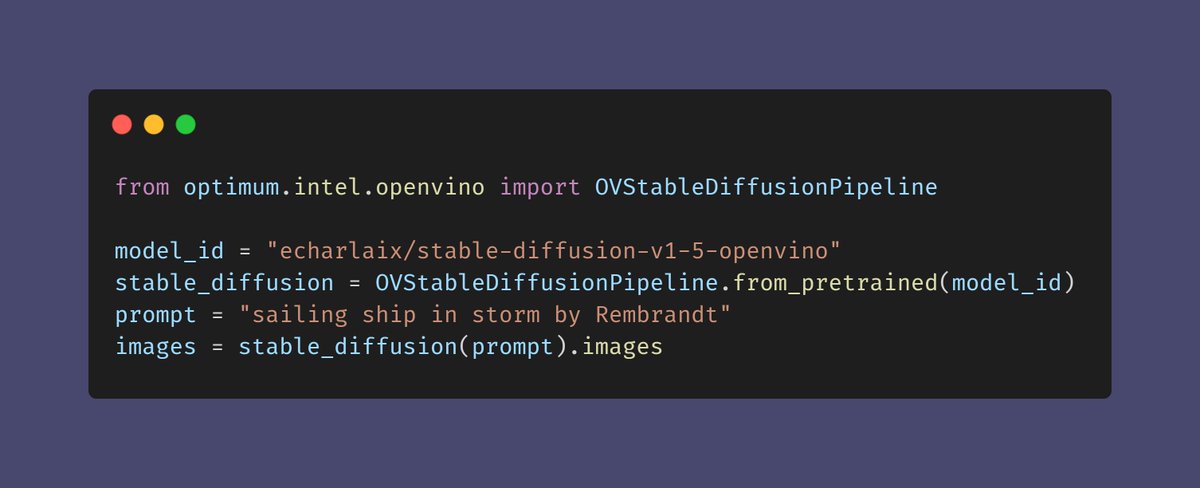

Interested in generating images with Hugging Face models? On @Intel CPUs? In less than 5 seconds? Our new blog post shows you how to optimize diffusion models with Optimum Intel, OpenVINO, IPEX, and more 🚀 🚀🚀

huggingface.co/blog/stable-di…

#GenerativeAI #MLOps #ComputerVision

🏭 The hardware optimization floodgates are open!🔥

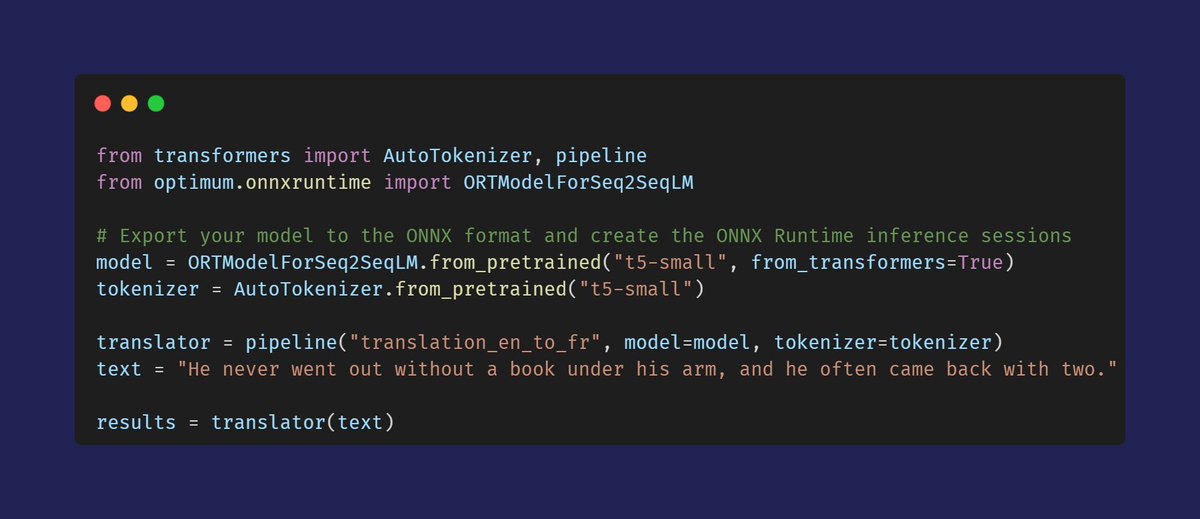

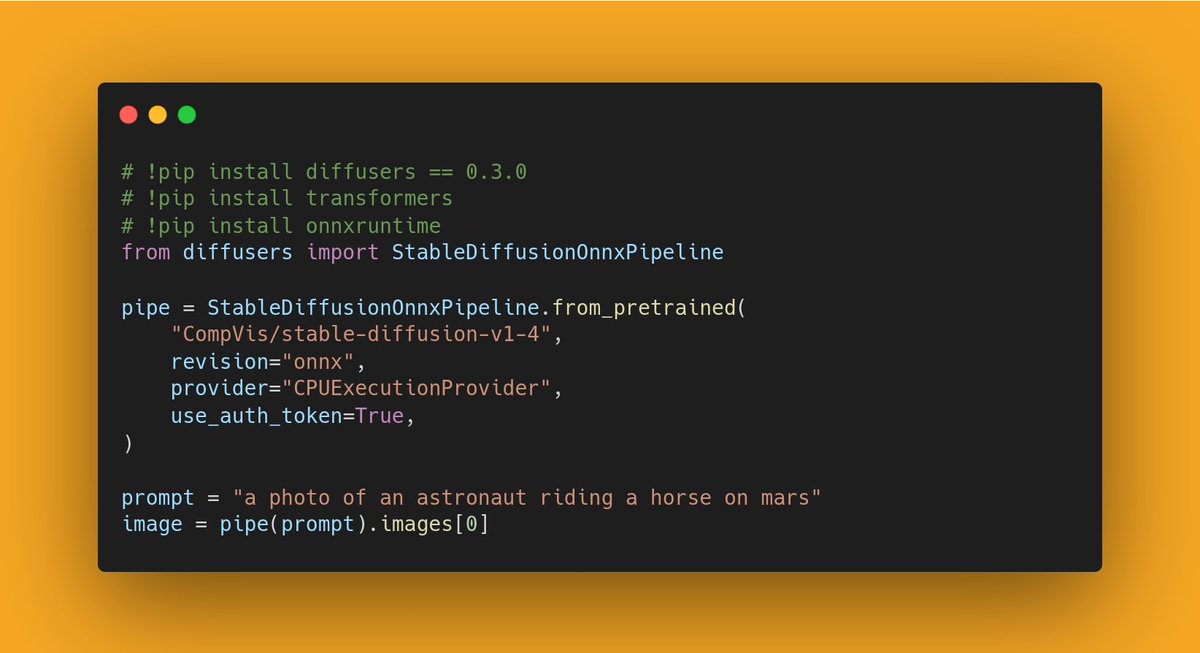

Diffusers 0.3.0 supports an experimental ONNX exporter and pipeline for Stable Diffusion 🎨

To find out how to export your own checkpoint and run it with onnxruntime, check the release notes:

github.com/huggingface/di…

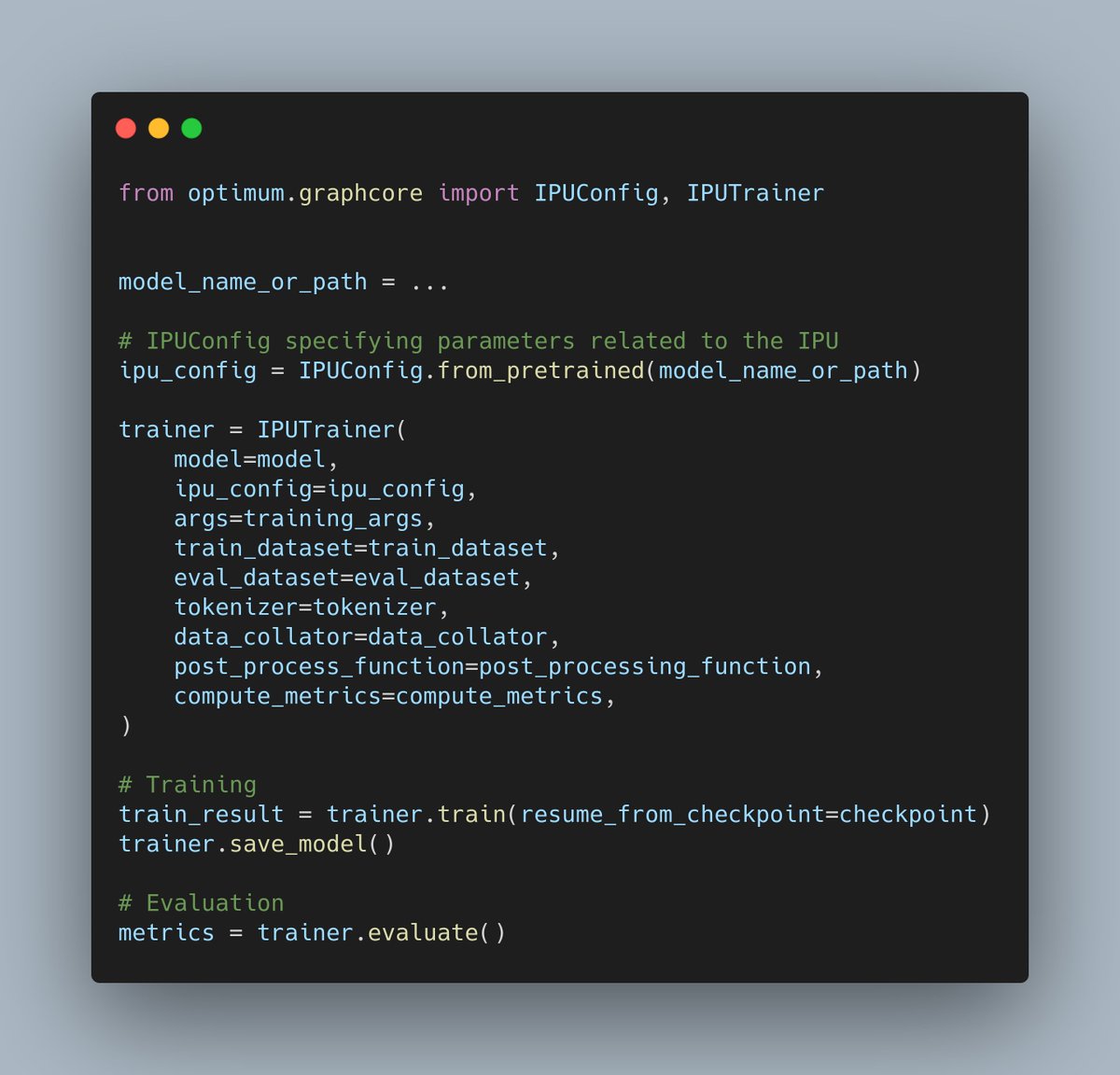

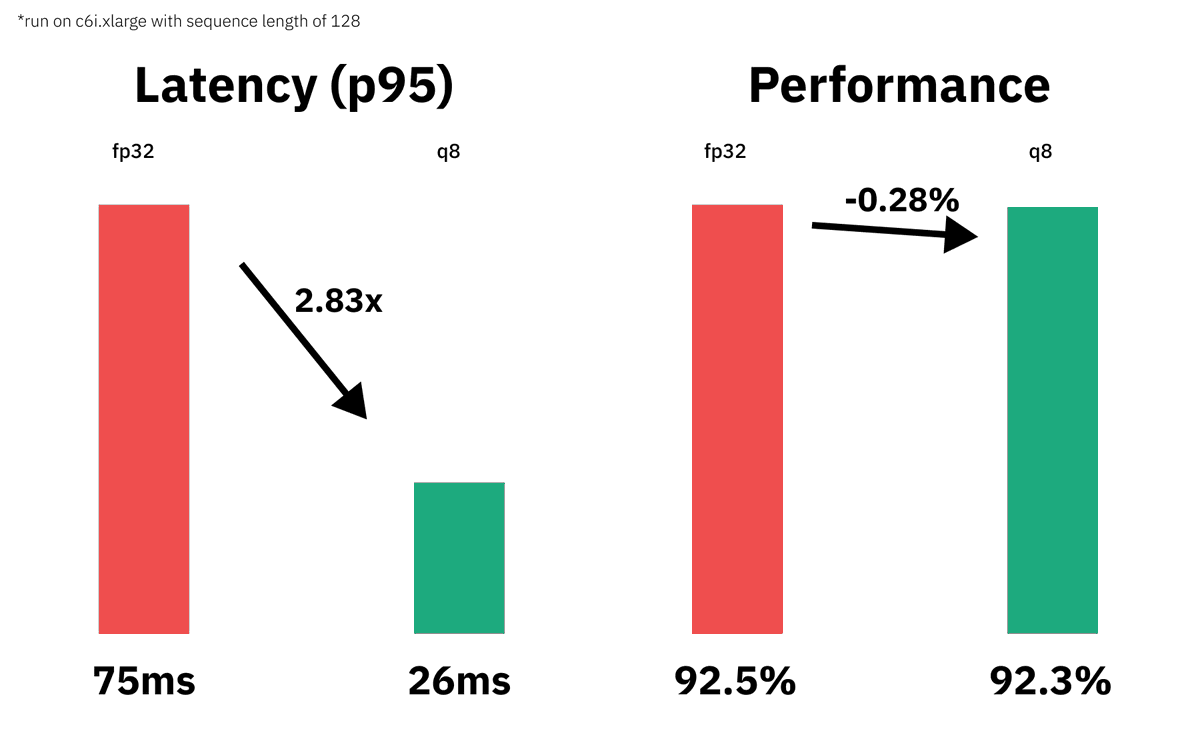

Together with Lewis Tunstall, I gave a talk today on the MLOps world about acceleration Transformers with Hugging Face Optimum🌍🚀

We covered how to accelerate DistilBERT up to ~3x faster latency while keeping > 99.7% accuracy🏎💨

📕 philschmid.de/static-quantiz…

⭐️github.com/philschmid/opt…

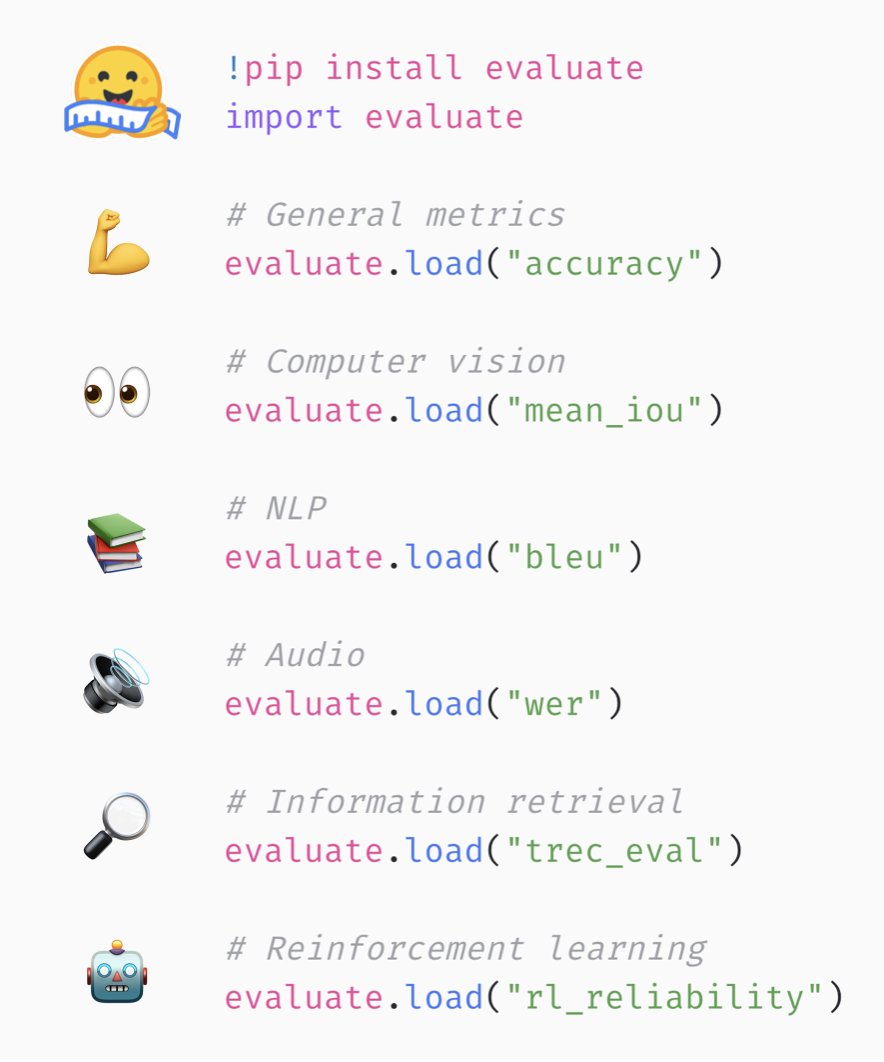

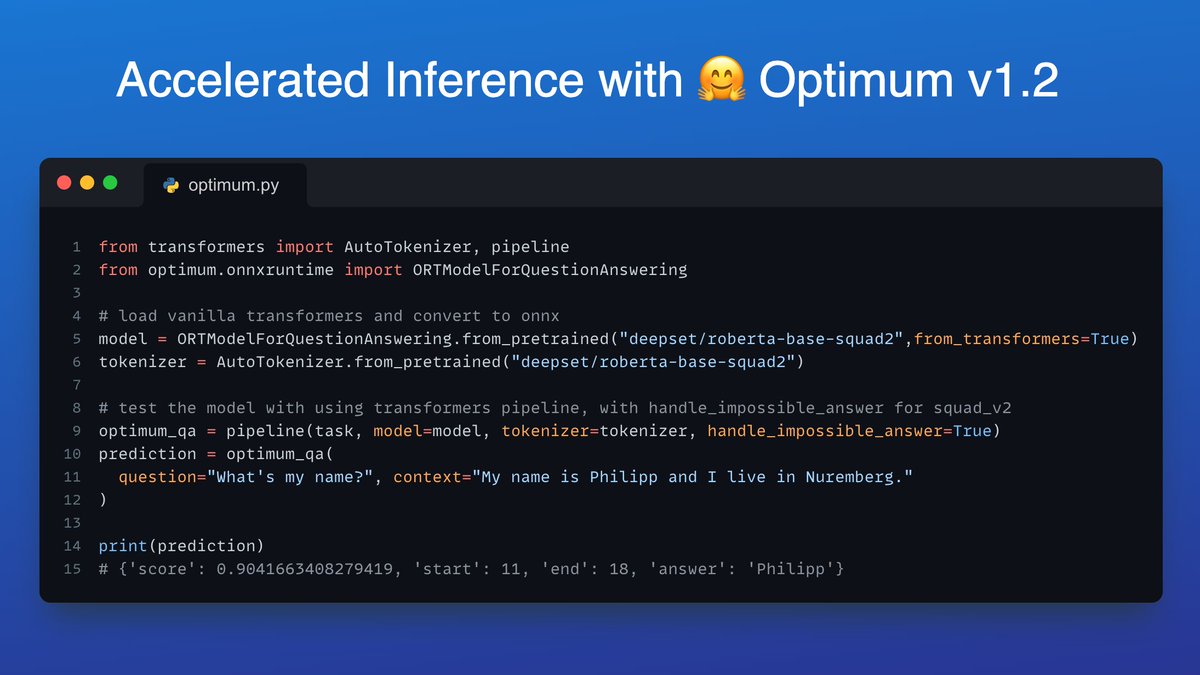

Optimum v1.2 adds ACCELERATED inference pipelines - including text generation - for onnxruntime🚀

Learn how to accelerate RoBERTa for Question-Answering including quantization and optimization with 🤗Optimum in our blog 🦾🔥

📕huggingface.co/blog/optimum-i…

⭐️github.com/huggingface/op…

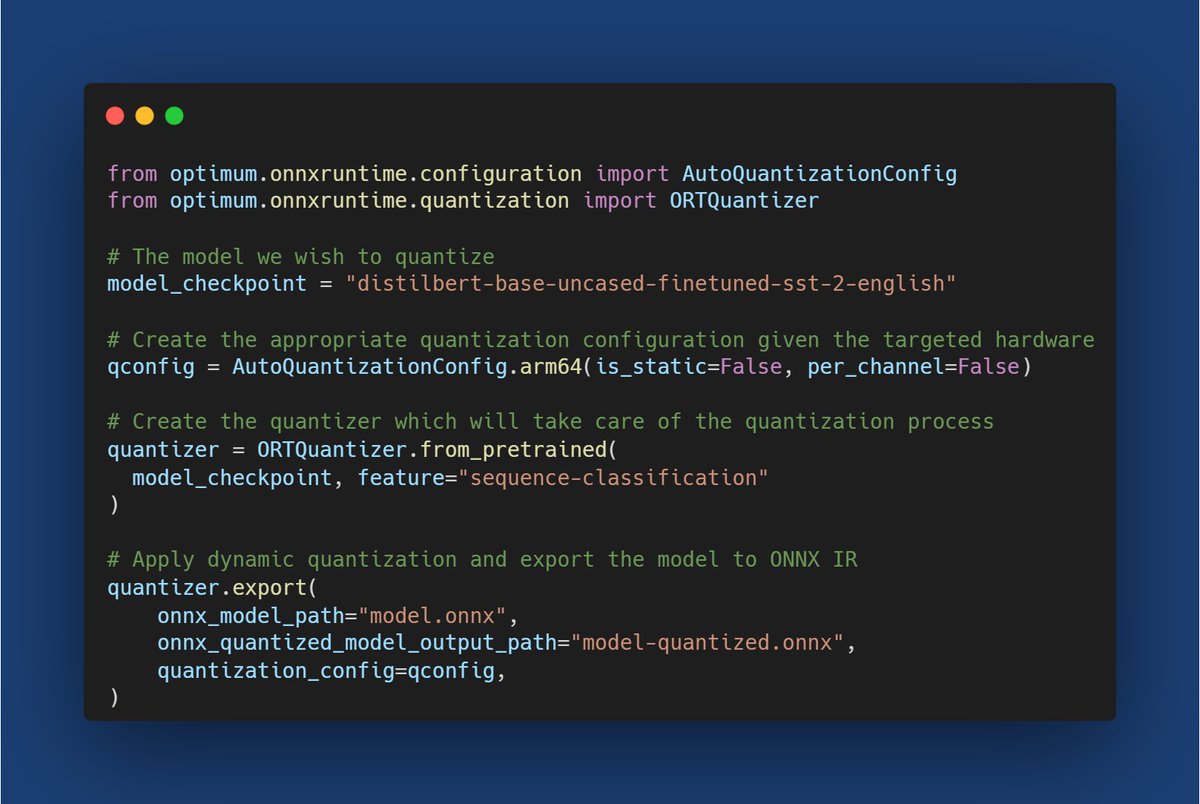

You can now accelerate inference by applying quantization to models from the Hugging Face Hub 🔥

➡️ With 🤗 Optimum, you can easily apply static and dynamic quantization on your model before exporting it to the ONNX format 🤯

Start here 👉 huggingface.co/docs/optimum/m…

I will present tomorrow at EMNLP21 (Session 9F) 'Block Pruning for Faster Transformers', a paper we proposed with Ella Charlaix Victor Sanh Sasha Rush (ICLR) Hugging Face . I will describe how we managed to speedup fine-tuned transformers by more than 2.5x while preserving accuracy !