Zaid Khan

@codezakh

@uncnlp with @mohitban47 working on grounded reasoning + multimodal agents // currently @allen_ai formerly @neclabsamerica // bs+ms CompE @northeastern

ID: 1669925833891356673

http://zaidkhan.me 17-06-2023 04:32:28

373 Tweet

508 Followers

755 Following

Shiny new building and good views from the new VirginiaTech campus in DC 😉 -- it was a pleasure to meet everyone and engage in exciting discussions about trustworthy agents, collaborative reasoning/privacy, and controllable multimodal generation -- thanks again Naren Ramakrishnan,

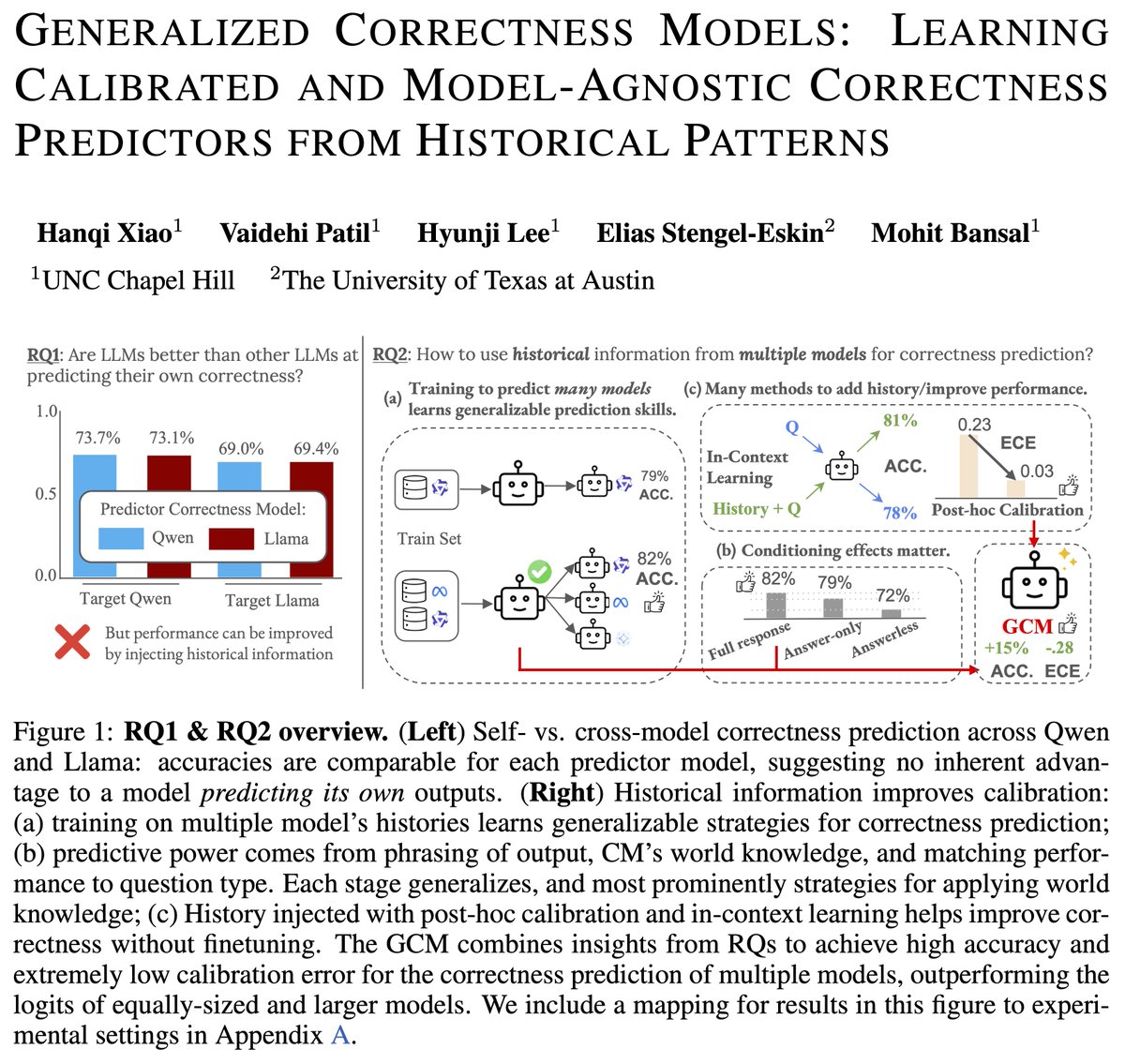

🚨Excited to announce General Correctness Models (GCM): 🔎We find no special advantage using an LLM to predict its own correctness, instead finding that LLMs benefit from learning to predict the correctness of many other models – becoming a GCM. Huge thanks to Vaidehi Patil,

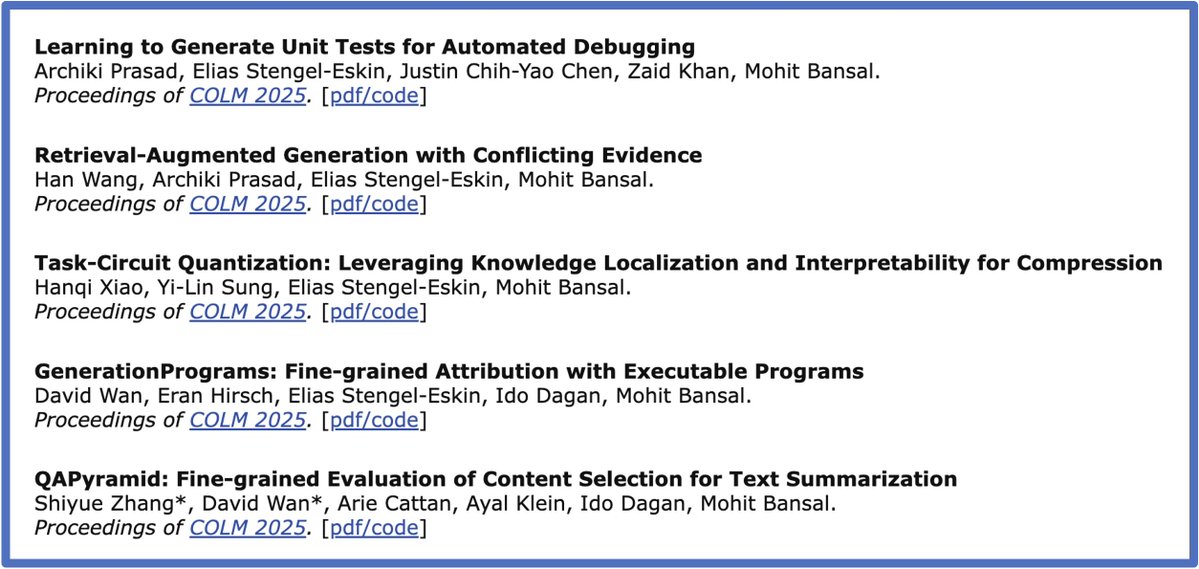

Conference on Language Modeling Archiki Prasad ✈️ COLM 2025 David Wan Elias Stengel-Eskin Han Wang @ COLM 2025 Hanqi Xiao (detailed links + websites + summary 🧵's of these papers attached below FYI 👇) -- Learning to Generate Unit Tests for Automated Debugging. Archiki Prasad ✈️ COLM 2025 Elias Stengel-Eskin Justin Chih-Yao Chen Zaid Khan arxiv.org/abs/2502.01619 x.com/ArchikiPrasad/…

Conference on Language Modeling Archiki Prasad ✈️ COLM 2025 David Wan Elias Stengel-Eskin Han Wang @ COLM 2025 Hanqi Xiao Justin Chih-Yao Chen Zaid Khan Yi Lin Sung Eran Hirsch BIU NLP Shiyue Zhang Arie Cattan Ayal Klein -- Postdoc openings info/details 👇 (flyer+links: cs.unc.edu/~mbansal/postd…) Also, PhD admissions/openings info: cs.unc.edu/~mbansal/prosp… x.com/mohitban47/sta…