Chris Rytting

@chrisrytting

Trying to make AI improve human qol.

Formerly @UW, @nvidia, OSPC @AEI, @NewYorkFed Macroeconomic Research. PhD in CS/NLP from @BYU.

ID: 19300634

https://chrisrytting.github.io/ 21-01-2009 18:49:40

820 Tweet

412 Followers

561 Following

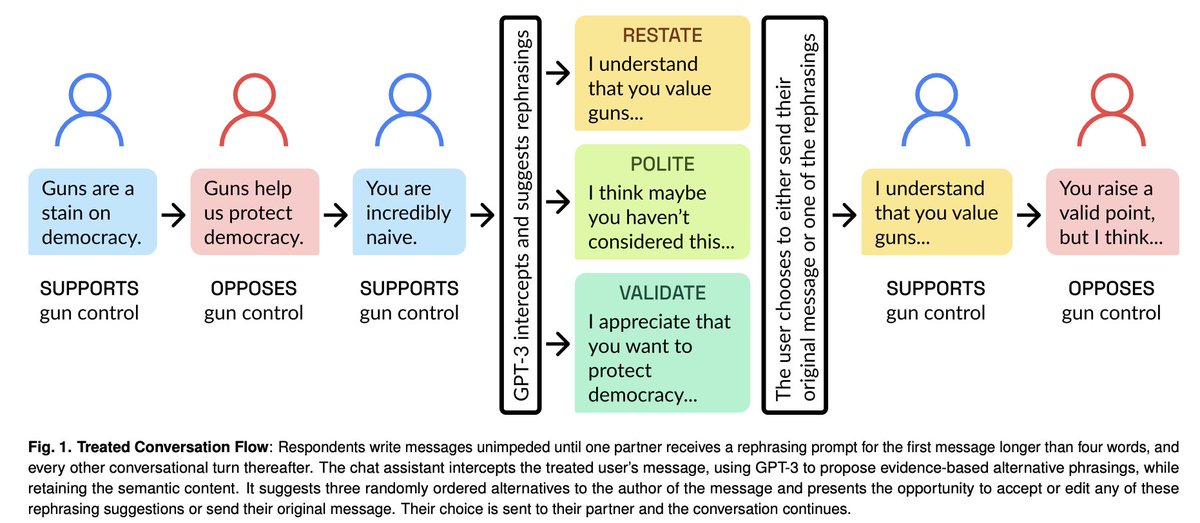

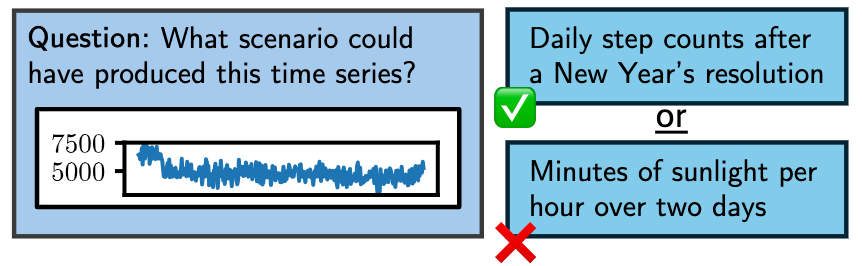

Do you ever feel like "what even is AI alignment when humans are so diverse?" or "I wonder how AI can serve everyone in pluralistic societies"? You're not alone! Check out our new piece on pluralistic alignment (or Taylor's thread) and expand that mind. Taylor Sorensen does it again!