Huayu Chen

@chenhuay17

Phd candidate at TsinghuaSAIL

ID: 1847135162636947456

http://chendrag.github.io 18-10-2024 04:38:48

7 Tweet

22 Followers

57 Following

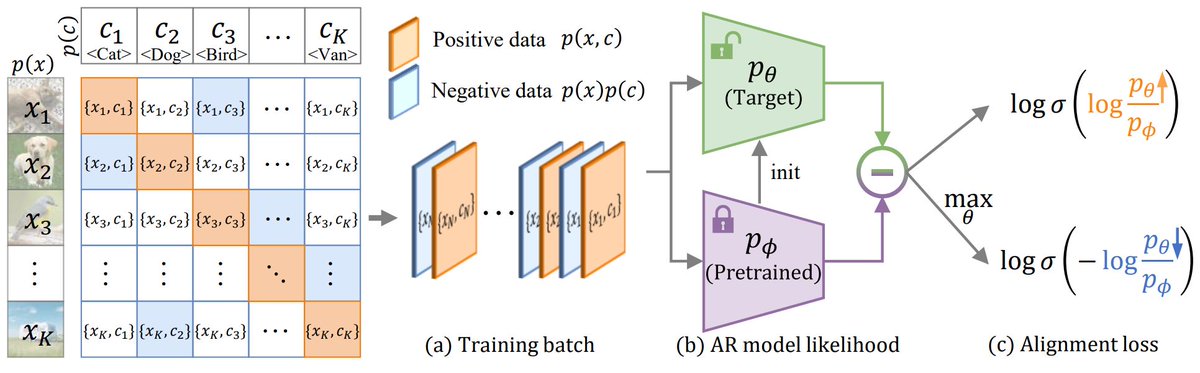

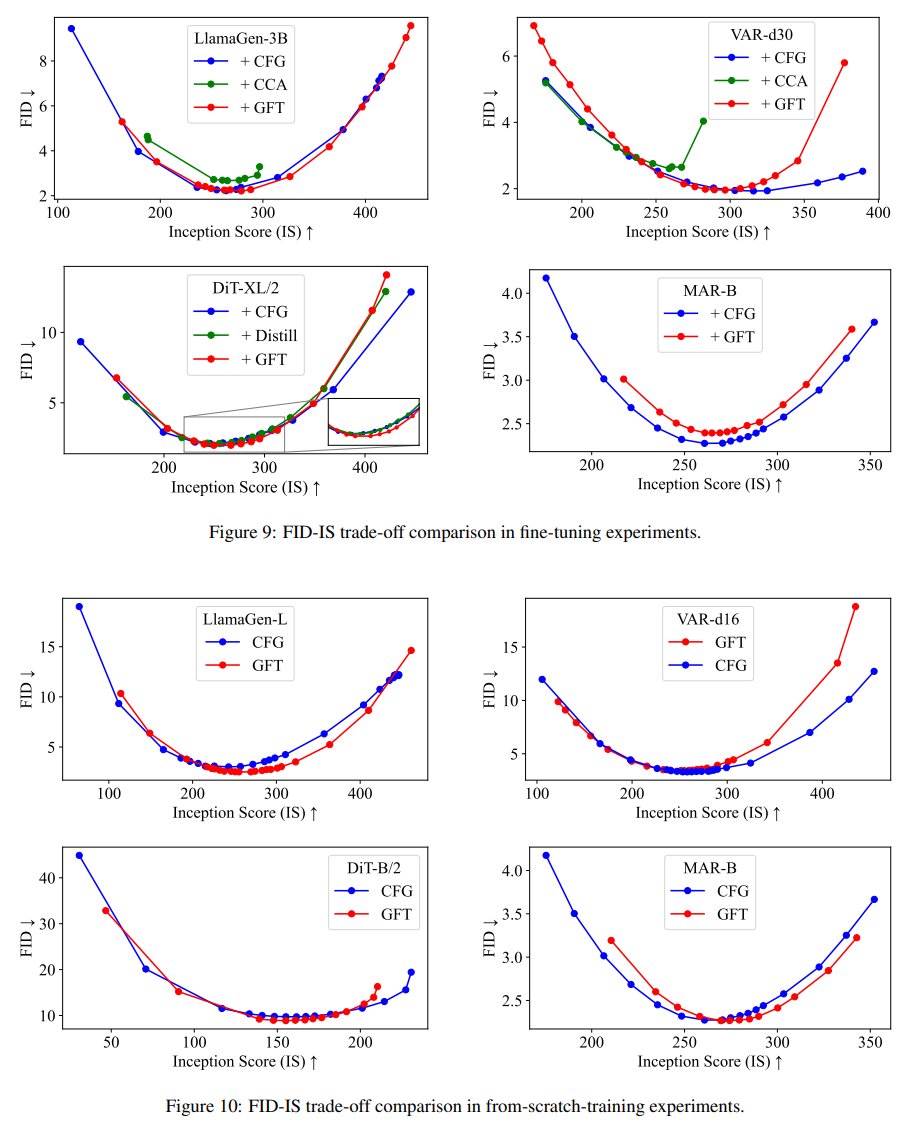

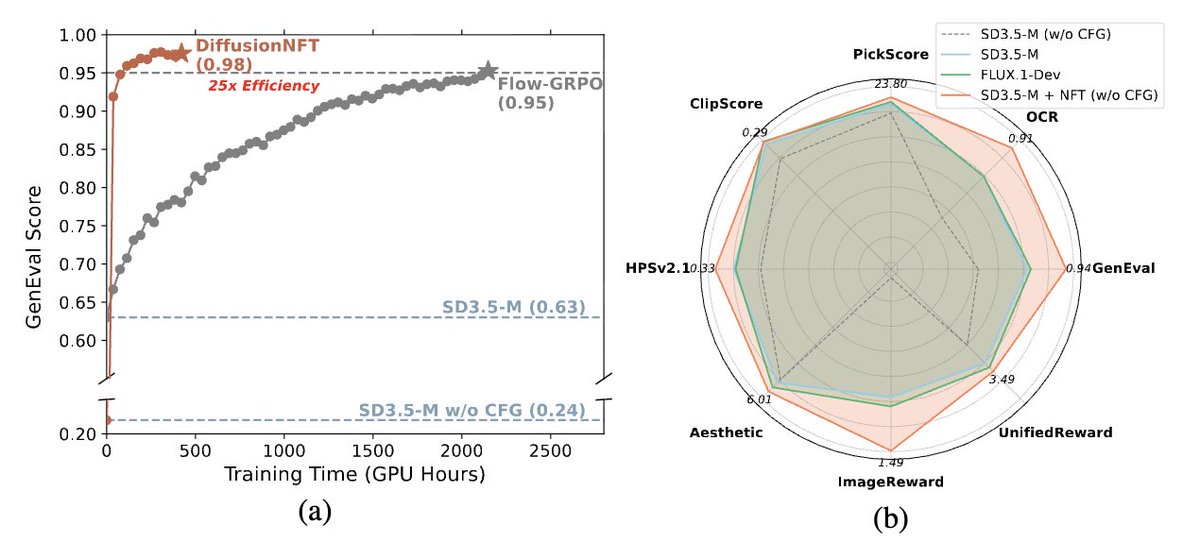

Looking for an RL algorithm for improving your diffusion models? DiffusionNFT might be able to help. Check it out. github.com/NVlabs/Diffusi… 25x more efficient than FlowGRPO and gives you SOTA results on various benchmarks. with Huayu Chen Kaiwen Zheng Qinsheng Zhang Haoxiang Wang

Check out Haotian Ye @ NeurIPS25's work on regularized video diffusion RL. It is amazing how simple data regularization turns out to be so effective in preventing hacking problem and boost quality.