Bing Yan

@bingyan4science

AI+Chemistry PhD student @nyuniversity | Prev PhD of Chemistry @mit

ID: 905020625579905025

http://bingyan.me 05-09-2017 10:51:46

13 Tweet

76 Followers

185 Following

Meet the Chalk-Diagrams plugin for ChatGPT! Based on Dan Oneață & Sasha Rush's lib, it lets you create vector graphics with language instructions. Try it with ChatGPT plugins: 1️⃣ Plugin store 2️⃣ Install unverified plugin 3️⃣ chalk-diagrams.com Test it out: "draw a pizza" 🍕

Excited to share that I'm joining Waterloo's Cheriton School of Computer Science as an Assistant Professor and Vector Institute as a Faculty Affiliate in Fall '24. Before that, I'm doing a postdoc at Ai2 with Yejin Choi. Immensely grateful to my PhD advisors Sasha Rush and Stuart Shieber (@[email protected]). This journey wouldn't have

What do people use ChatGPT for? We built WildVis, an interactive tool to visualize the embeddings of million-scale chat datasets like WildChat. Work done with Wenting Zhao Jack Hessel Sean Ren Claire Cardie Yejin Choi 📝huggingface.co/papers/2409.03… 🔗wildvisualizer.com/embeddings/eng… 1/7

Yuntian Deng from University of Waterloo presented at CILVR on internalizing reasoning in language models. His team's finetuning approach enabled GPT-2 Small to solve 20x20 multiplication with 99.5% accuracy, while standard training couldn't go beyond 4x4. Demo up at huggingface.co/spaces/yuntian…

Can we build an operating system entirely powered by neural networks? Introducing NeuralOS: towards a generative OS that directly predicts screen images from user inputs. Try it live: neural-os.com Paper: huggingface.co/papers/2507.08… Inspired by Andrej Karpathy's vision. 1/5

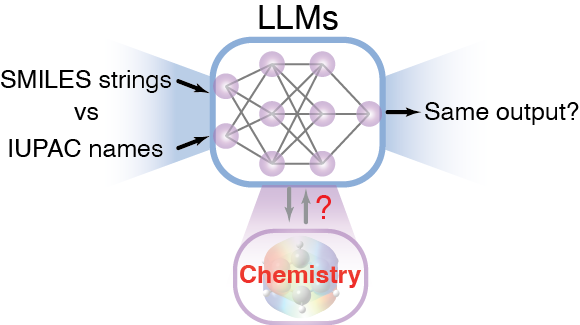

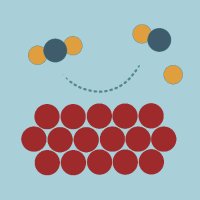

Our new paper Digital Discovery is out💡Kyunghyun Cho Angelica Chen Do LLMs capture intrinsic chemistry, beyond string representations? Across SMILES & IUPAC: ❌ SOTA models: very low consistency (<1%) ⚖️ 1-to-1 mapped finetune ↑ consistency, not accuracy Read: pubs.rsc.org/en/Content/Art…