Andrey Cheptsov

@andrey_cheptsov

@dstackai. AI infra. Anti-k8s. Previously @JetBrains

ID: 13244412

https://github.com/dstackai/dstack 08-02-2008 12:13:50

8,8K Tweet

1,1K Followers

317 Following

Andrey Cheptsov Constantin Dominique Paul With OSS LLMs - Europe’s on it. Llama was basically an (FAIR & HF) EU product. Qwen/DeepSeek-level work can happen here. I realise I sound like an old fart & I apologise - as an angel investor happy to back the teams building this. Am eg invested in Continue & Rasa.

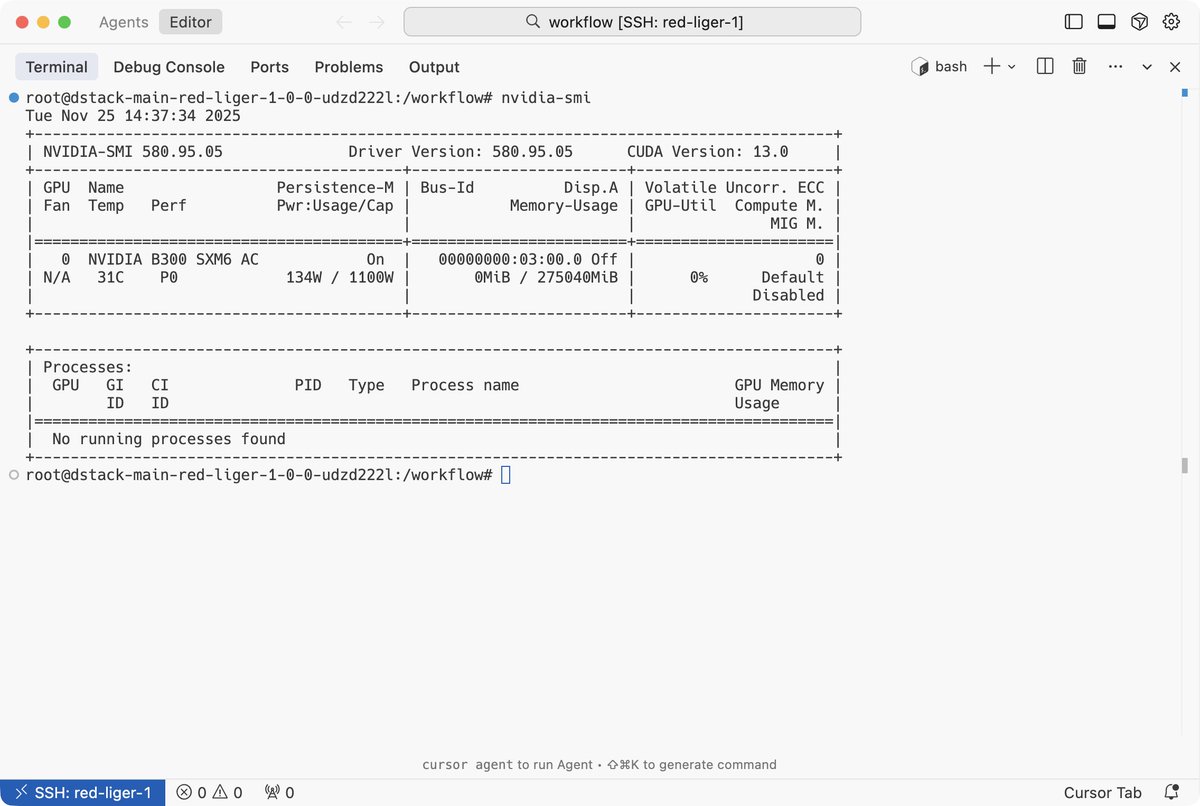

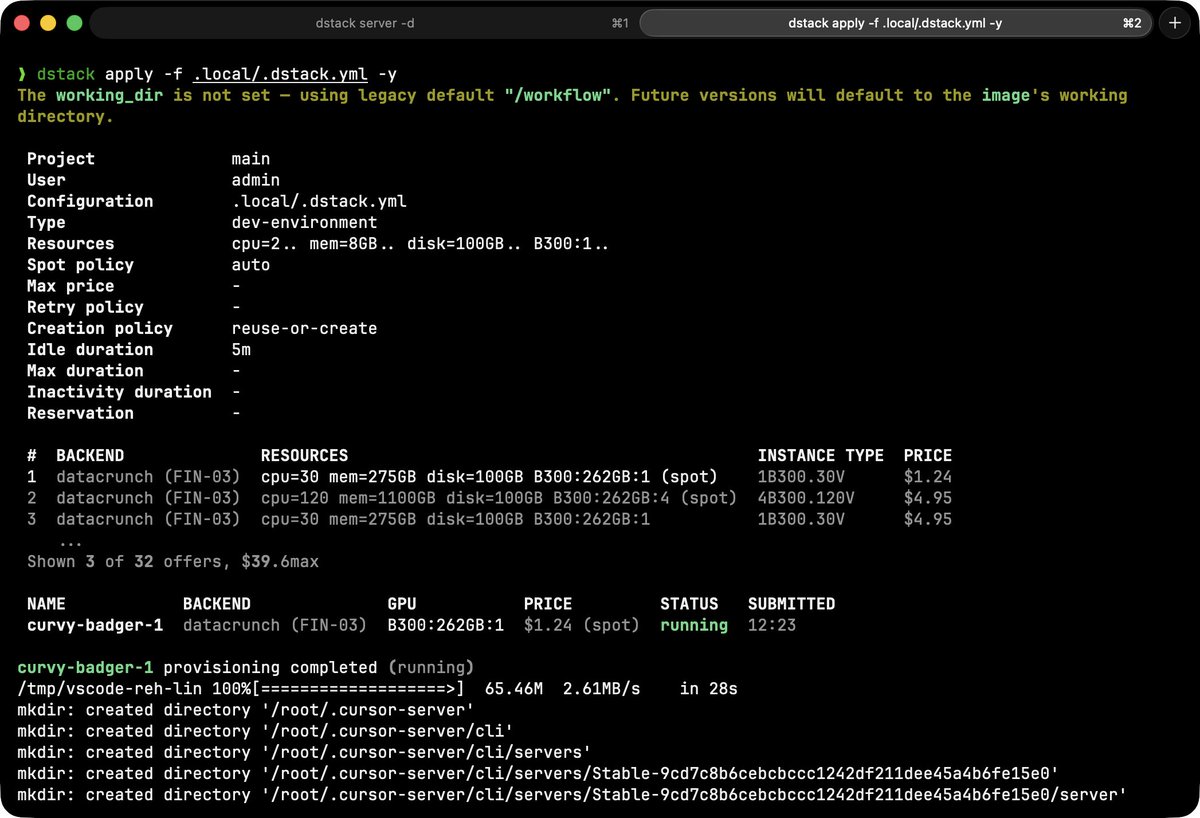

Early access: B300 (Blackwell Ultra) VMs are now available on Verda (formerly DataCrunch) (former DataCrunch) via dstack. * Available on both on-demand and spot * Up to 8× GPUs per VM * Pricing: $4.95/h on-demand, $1.24/h spot If you need Blackwell nodes for training, eval or inference, you

Full blog here 👇 aerlabs.tech/blogs/dstack-g… A big shoutout to Andrey Cheptsov the driving force behind dstack, for building something genuinely thoughtful and developer-first. 🙌

Excited to see dstack featured in Verda (formerly DataCrunch)'s monthly digest! We're thrilled about the dstack-Verda integration and how it simplifies training and inference orchestration for AI builders.