Alina Leidinger

@alinaleidinger

PhD student in NLP+AI Ethics @UvA_Amsterdam || prev mathematics @imperialcollege @TU_Muenchen

she/her

ID: 1225719705694089222

https://aleidinger.github.io 07-02-2020 09:55:43

22 Tweet

384 Followers

527 Following

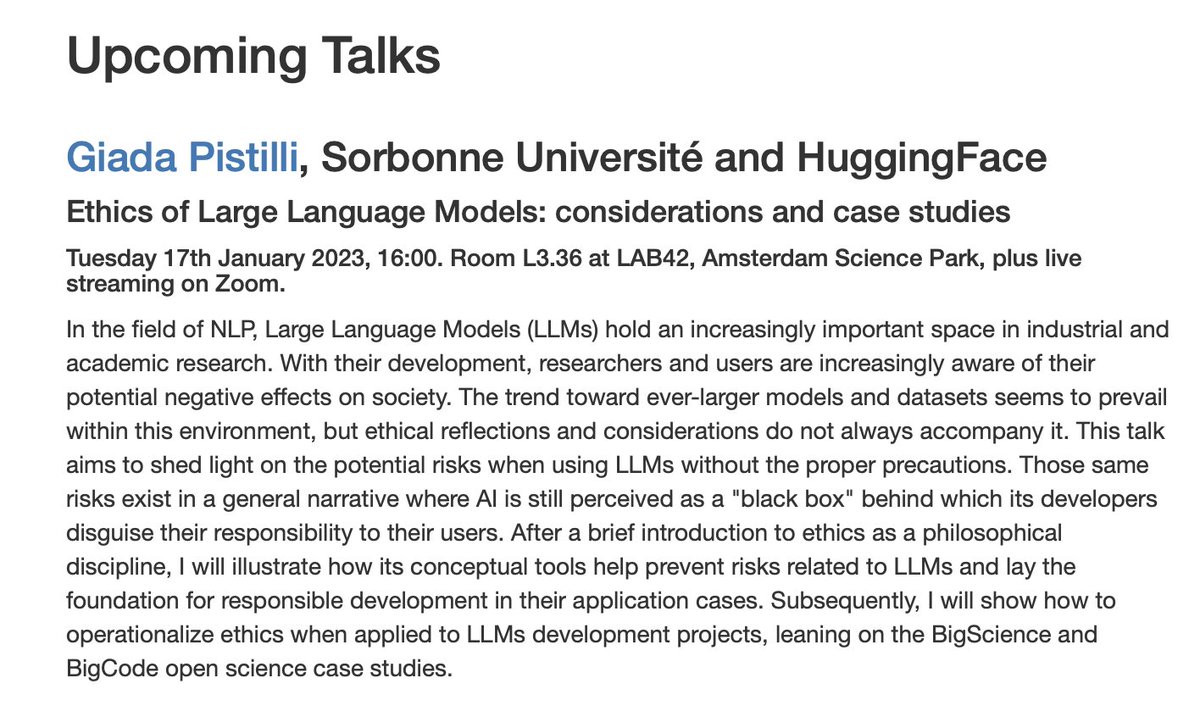

Very excited to give a seminar tomorrow on the ethics of Large Language Models at UvA Amsterdam! Be sure to stop by if you are around, or ping me if you'd like to watch the live streaming on Zoom. Big thanks to Sandro Pezzelle for the kind invitation. I am looking forward!

At the #EMNLP2023 poster session right now, all the way in the back: Check out Alina Leidinger's poster on the linguistic properties of successful prompts and start treating them as hyperparameters to be re-tuned for every task and model!

How open text-analyzing models respond to questions relating to LGBTQ+ rights, social welfare, surrogacy and more? Giada Pistilli Alina Leidinger Atoosa Kasirzadeh Yacine Jernite Sasha Luccioni, PhD 🦋🌎✨🤗 MMitchell found that they tend to answer questions inconsistently, which reflects biases embedded

Our paper has been accepted at AI, Ethics, and Society Conference (AIES)! I am on maternity leave and won't be able to attend, but be sure to get a chance to talk about it with my co-authors Alina Leidinger, MMitchell, Atoosa Kasirzadeh Yacine Jernite, Sasha Luccioni, PhD 🦋🌎✨🤗 ✨

Very happy to be presenting this project at AI, Ethics, and Society Conference (AIES) today! If you're at #AIES2024, drop by our poster at 6 p.m. today!