SHT

@dbohler

ID:17441423

17-11-2008 13:39:24

3,9K Tweet

210 Takipçi

1,8K Takip Edilen

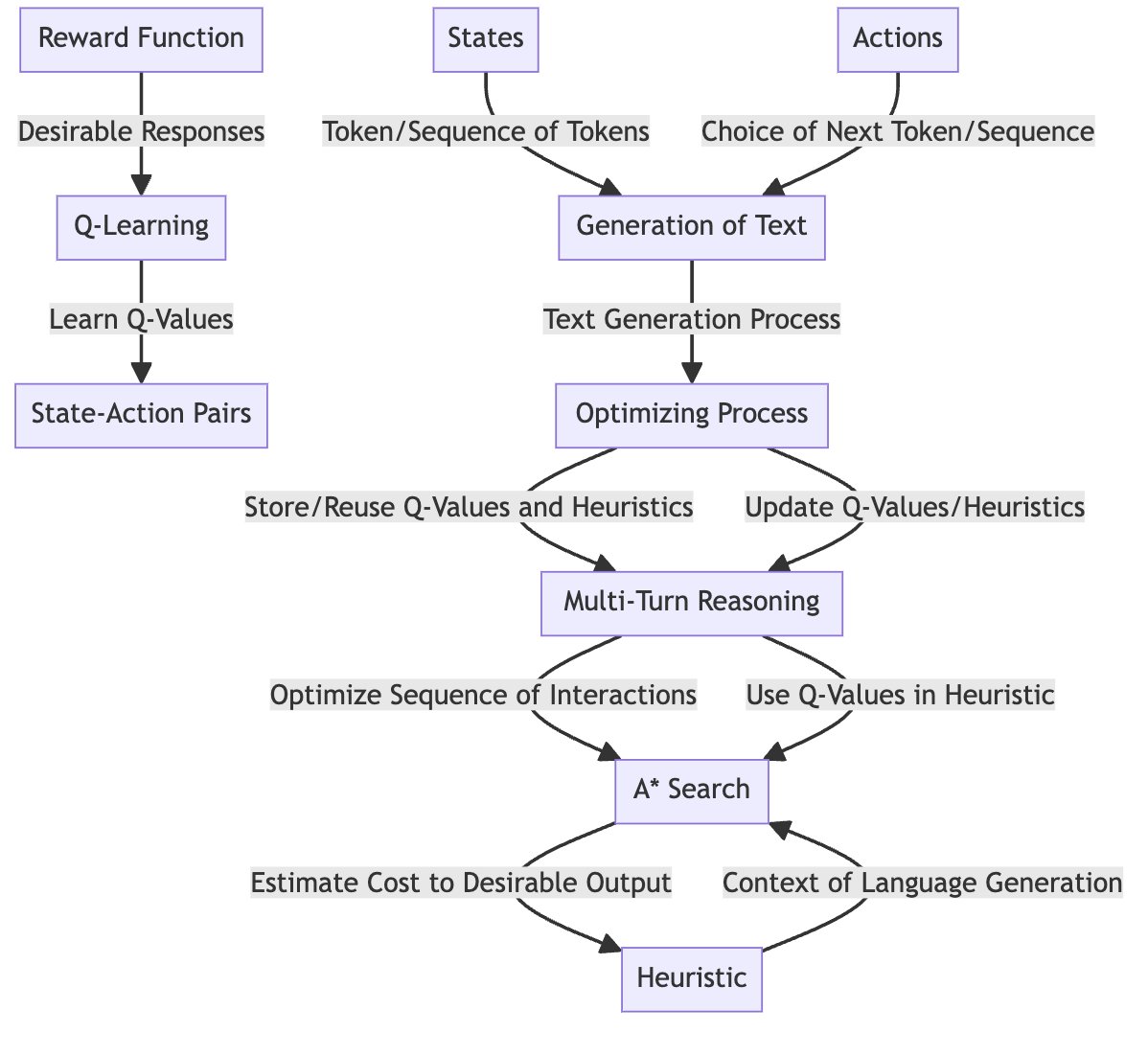

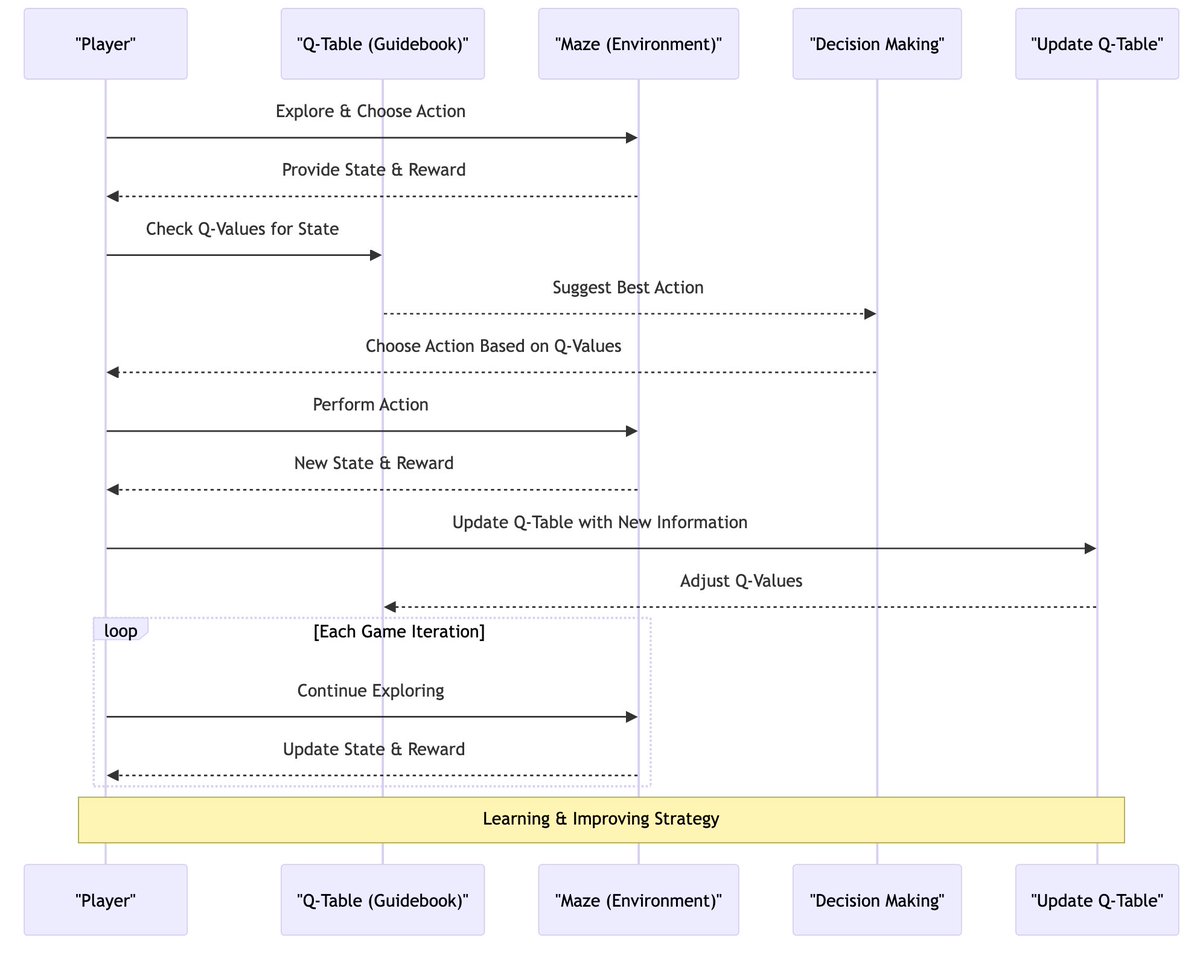

MrDee@SOG 🫡 This is a surprisingly good response. I highly recommend you look at DQN. They are small, fast, and learn over time. They are great for small tasks where information is scarce. E.g., should you evict an element in a cache, or should you switch lanes in a car?

After almost a decade, I have made the decision to leave OpenAI. The company’s trajectory has been nothing short of miraculous, and I’m confident that OpenAI will build AGI that is both safe and beneficial under the leadership of Sam Altman, Greg Brockman, Mira Murati and now, under the

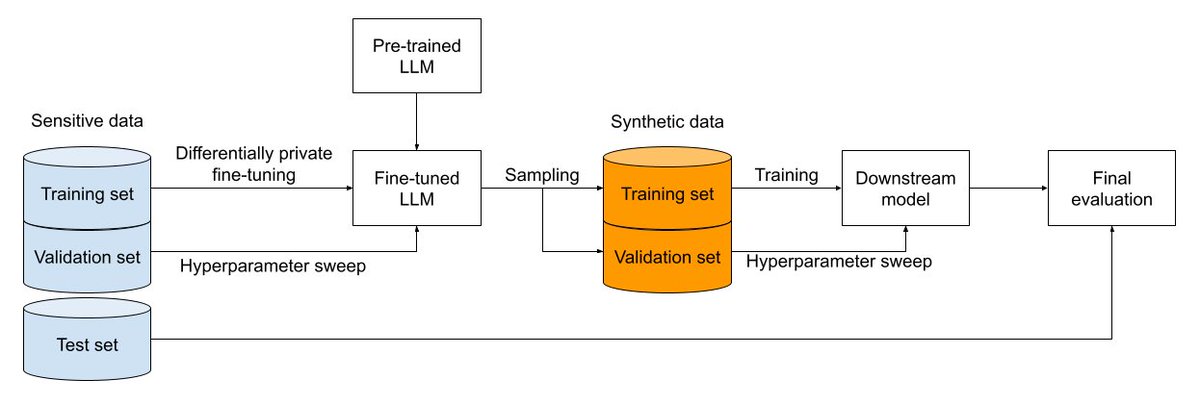

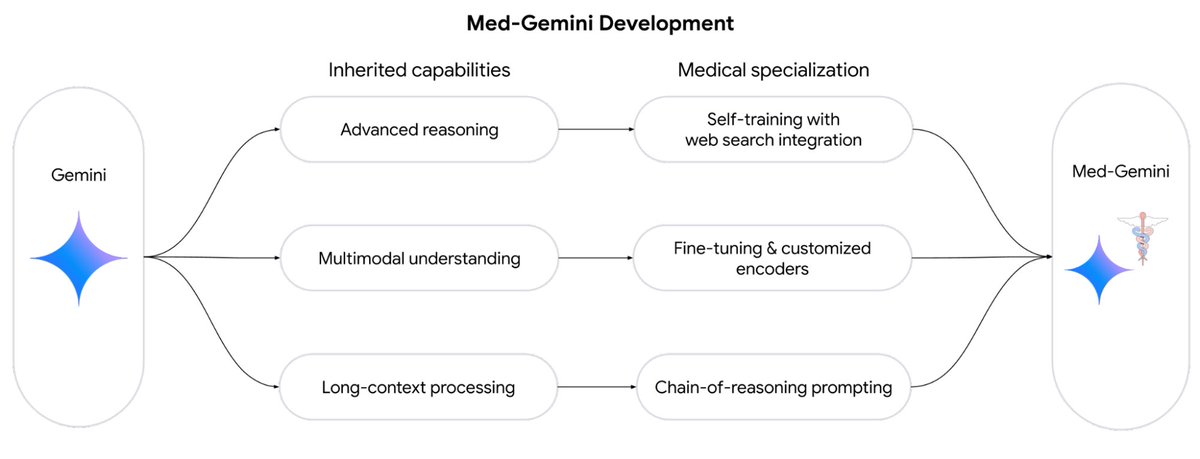

Introducing Med-Gemini, our new family of AI research models for medicine, building on Gemini's advanced capabilities. We've achieved state-of-the-art performance on a variety of benchmarks and unlocked novel applications. goo.gle/3UK7Oax #MedGemini #MedicalAI

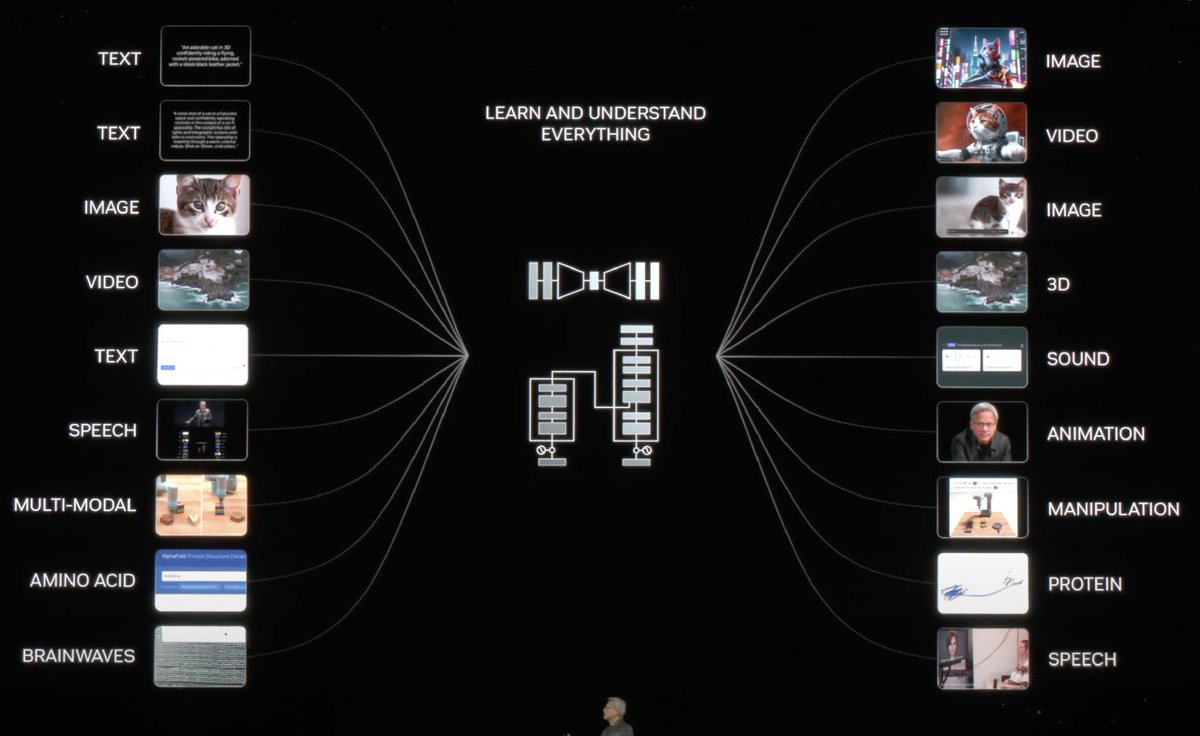

TED Talks Andrej Karpathy Agrim Gupta Kyle Sargent ~10 years is a small blip in history, but a giant leap forward for the field of AI, and Computer Vision. This was my TED talk in 2015, at the dawn of Modern AI. What a decade we have had since then! If you watch both these two talks, it will give you a pretty good understanding

TED Talks Andrej Karpathy Agrim Gupta Kyle Sargent One day, Spatially Intelligent robots can even hope to help people to do tasks that they need help with. 9/ x.com/drfeifei/statu…

TED Talks Andrej Karpathy Agrim Gupta Kyle Sargent Empowering embodied intelligence with spatial intelligence and language intelligence will unlock exciting possibilities. 8/ x.com/drfeifei/statu…