Danqi Chen

@danqi_chen

Associate professor @princeton_nlp @princetonPLI @PrincetonCS. Previously: @facebookai, @stanfordnlp, @Tsinghua_Uni danqi-chen.bsky.social

ID: 96570221

https://www.cs.princeton.edu/~danqic/ 13-12-2009 15:38:10

404 Tweet

15,15K Takipçi

753 Takip Edilen

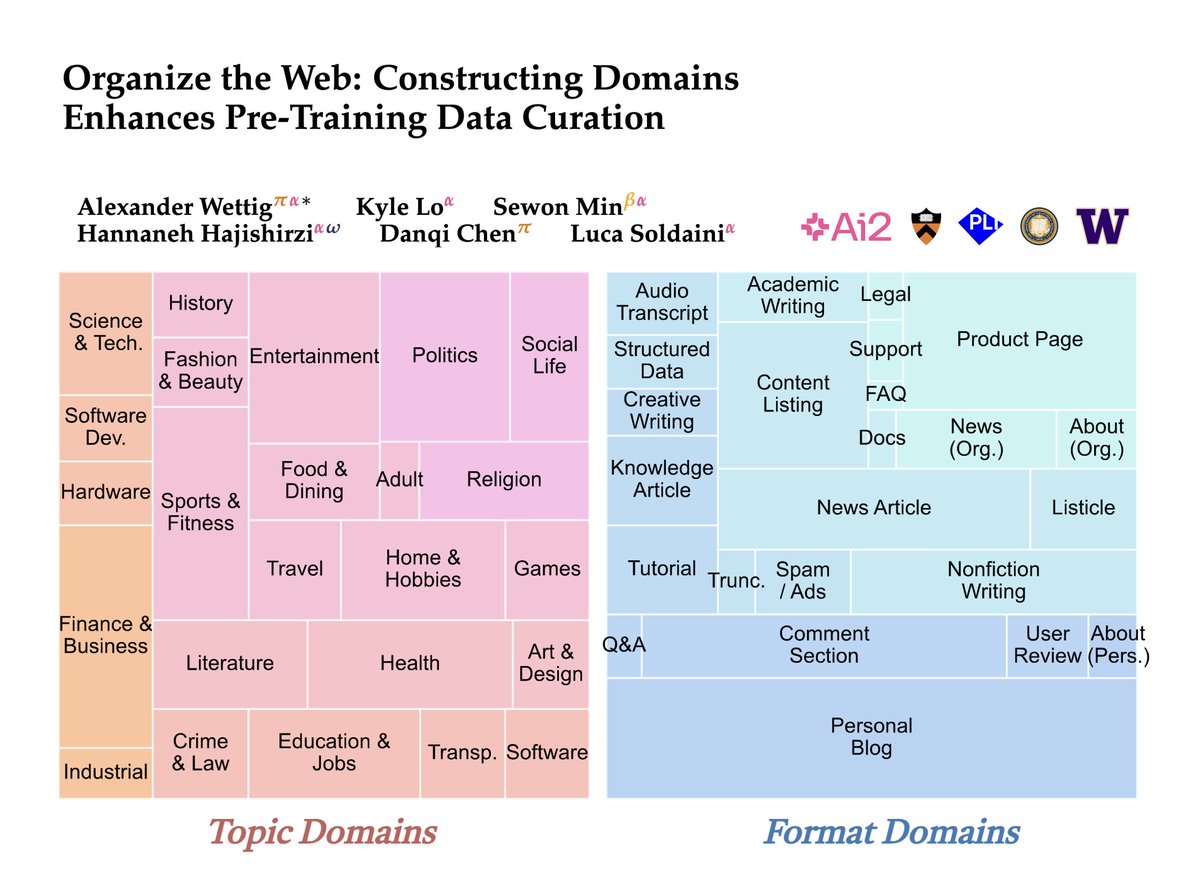

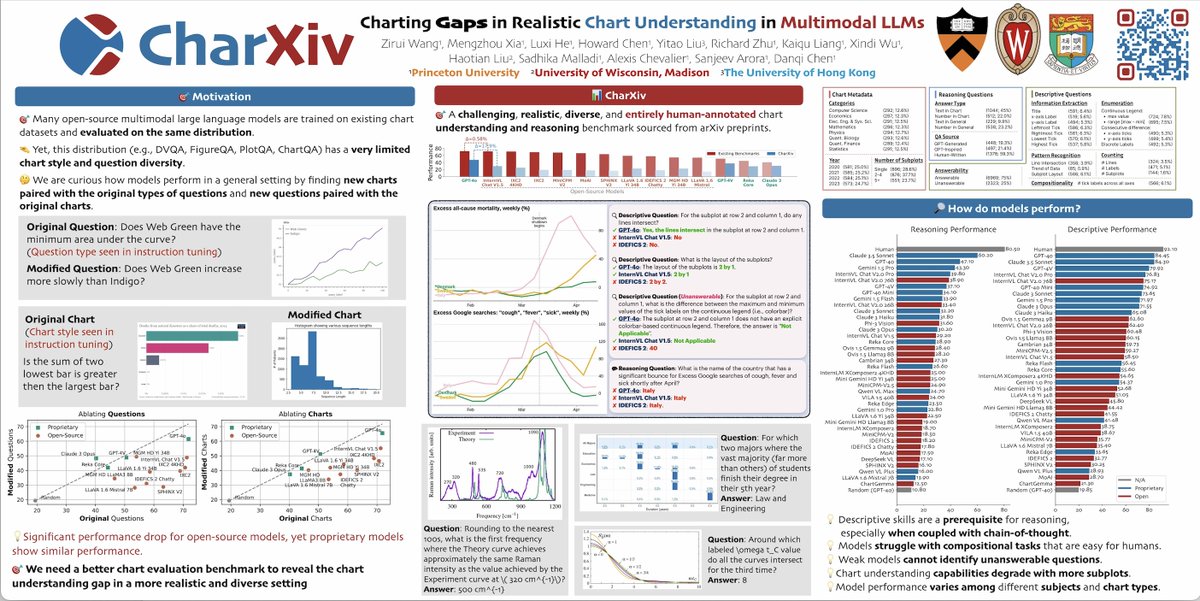

I’ve just arrived in Vancouver and am excited to join the final stretch of #NeurIPS2024! This morning, we are presenting 3 papers 11am-2pm: - Edge pruning for finding Transformer circuits (#3111, spotlight) Adithya Bhaskar - SimPO (#3410) Yu Meng @ ICLR'25 Mengzhou Xia - CharXiv (#5303)

Mitigating racial bias from LLMs is a lot easier than removing it from humans! Can’t believe this happened at the best AI conference NeurIPS Conference We have ethical reviews for authors, but missed it for invited speakers? 😡

Announcement #1: our call for papers is up! 🎉 colmweb.org/cfp.html And excited to announce the COLM 2025 program chairs Yoav Artzi Eunsol Choi Ranjay Krishna and Aditi Raghunathan

Congratulations to Stanford NLP Group founder Christopher Manning for being elected to The National Academy of Engineering (NAE, National Academies) Class of 2025 for the development and dissemination of natural language processing methods.

Excited to announce our 2025 keynote speakers: Shirley Ho, Nicholas Carlini, Luke Zettlemoyer, and Tom Griffiths!

Welcome to the official X for the Princeton Laboratory for Artificial Intelligence (“AI Lab” for short). Our mission is to support and expand the scope of AI research Princeton University Follow our page for the latest updates on events, news, research, and more at the AI Lab

We're so proud that Princeton researchers have received 1 outstanding paper award and 1 honorable mention at ICLR 2025 2025 Congratulations to Peter Henderson, Xiangyu Qi, Prateek Mittal, Ashwinee Panda @ ICLR 2025, Tianhao Wang ("Jiachen") @ICLR, and Kaifeng Lyu blog.iclr.cc/2025/04/22/ann…

Our warmest congratulations to Danqi Chen, Stanford NLP Group grad and now Associate Professor at Princeton Computer Science and Associate Director of Princeton PLI on her stunning ICLR 2025 keynote!