Danny Driess

@dannydriess

Research Scientist @physical_int.

Formerly Google DeepMind

ID: 1425030305501663282

http://dannydriess.github.io 10-08-2021 09:45:00

124 Tweet

3,3K Followers

316 Following

Had a blast on the Unsupervised Learning Podcast with Karol Hausman! We covered the past, present, and future of robot learning 🤖 Big thanks to Jacob Effron for being a fantastic host!

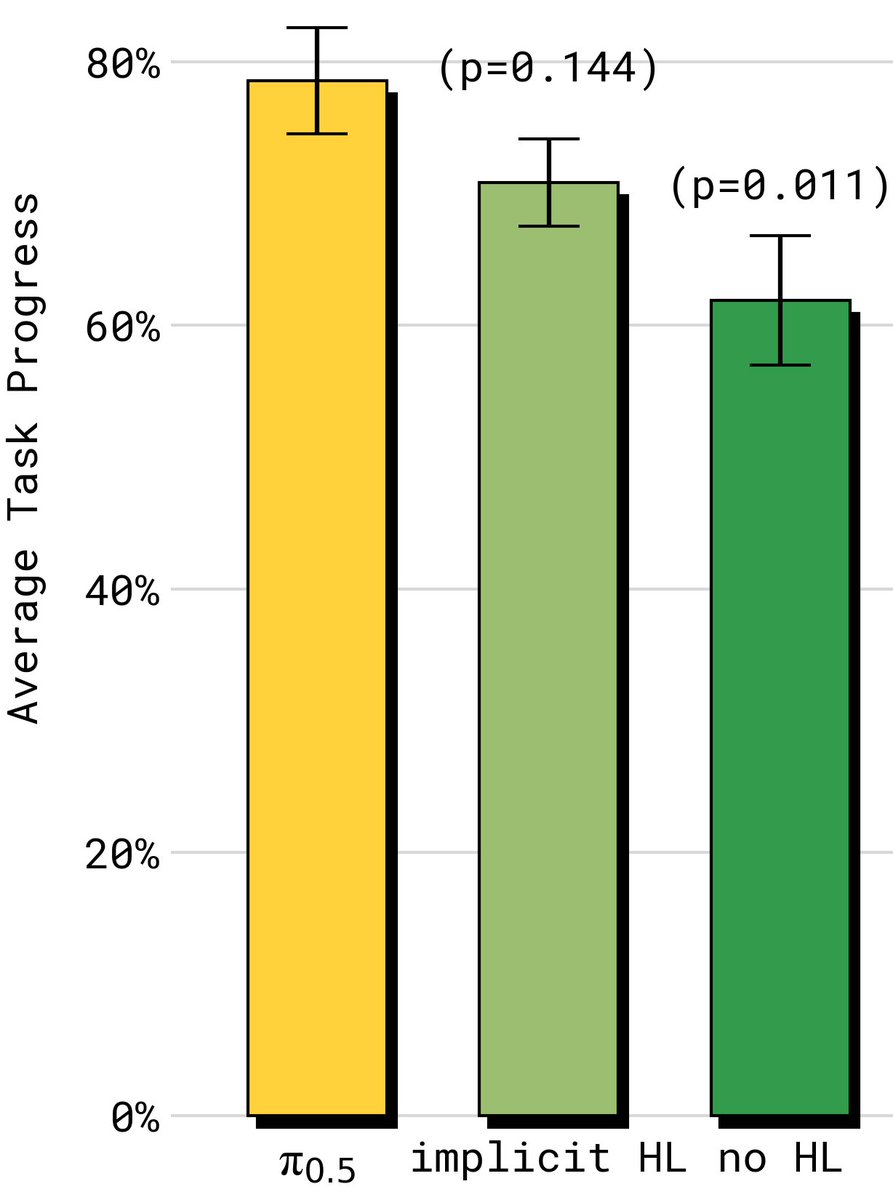

TRI's latest Large Behavior Model (LBM) paper landed on arxiv last night! Check out our project website: toyotaresearchinstitute.github.io/lbm1/ One of our main goals for this paper was to put out a very careful and thorough study on the topic to help people understand the state of the