Daniele Paliotta

@danielepaliotta

ML PhD @Unige_en, and other things.

ID: 1189887448618328065

https://danielep.xyz 31-10-2019 12:51:01

415 Tweet

560 Followers

1,1K Following

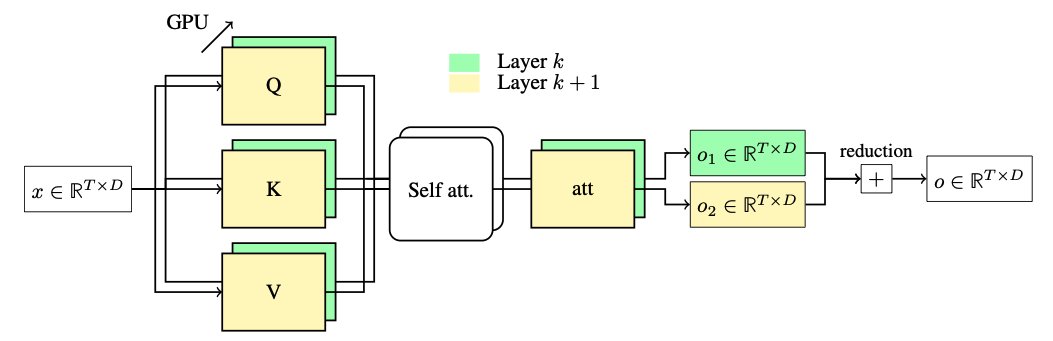

With the awesome Ramón Calvo, Daniele Paliotta, Matteo Pagliardini, and Martin Jaggi. Faculty of Science | UNIGE EPFL Computer and Communication Sciences TL;DR: you can shuffle the middle layers of a transformer without retraining it. We take advantage of that to compute layers in parallel. arxiv.org/abs/2502.02790

*Leveraging the true depth of LLMs* by Ramón Calvo Daniele Paliotta Matteo Pagliardini François Fleuret Based on the observation that layer shuffling has little impact on the middle layers of LLMs, they propose to parallelize their execution in pairs. arxiv.org/abs/2502.02790