Crunchy Data

@crunchydata

Trusted Open Source PostgreSQL and Enterprise PostgreSQL Support, Technology and Training

ID: 1657873424

http://www.crunchydata.com 09-08-2013 14:26:12

3,3K Tweet

6,6K Followers

2,2K Following

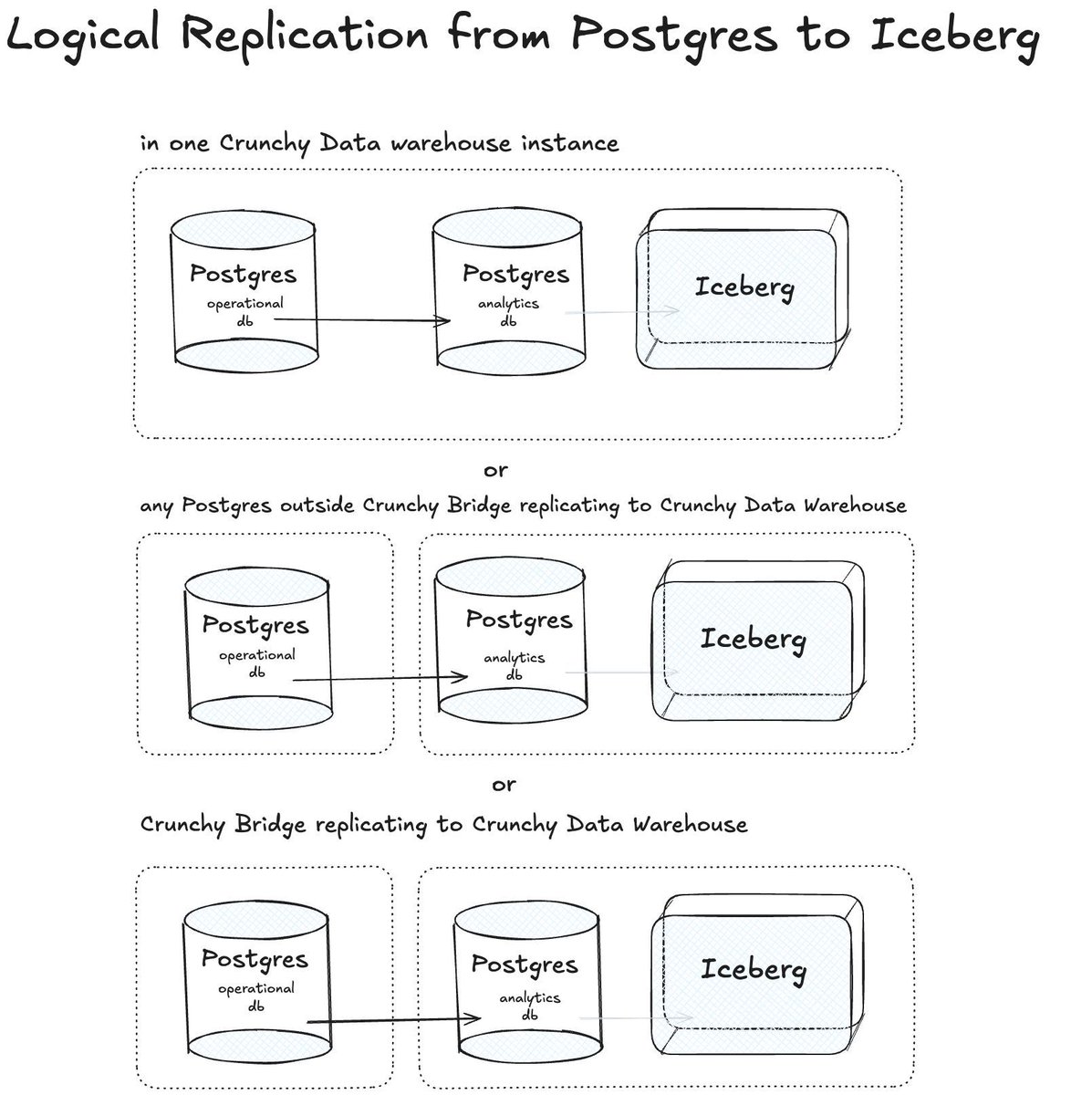

Looking for an archiving solution with long term retention for analytics? Craig Kerstiens - Finger lime evangelist has the recipe for success that combines Postgres partitioning with Iceberg replication. 1 - Partition your high throughput data - this is ideal for performance and management anyways. 2 -

Choosing an index for a very large data set? Paul Ramsey digs on how to evaluate BRIN vs B-tree. tldr: The BRIN index can be a useful alternative to the BTree, for specific cases: - For tables with an "insert only" data pattern, and a correlated column - For use cases with very

This week Karen Jex will be at PyCon Italia (PyCon Italia) in Bologna talking about Postgres. She's giving a talk on table partitioning and best practices. 🐘 🐍