Christian Langreiter

@chl

how hard could it be?

ID: 801282

http://langreiter.com 28-02-2007 16:37:34

4,4K Tweet

1,1K Takipçi

6,6K Takip Edilen

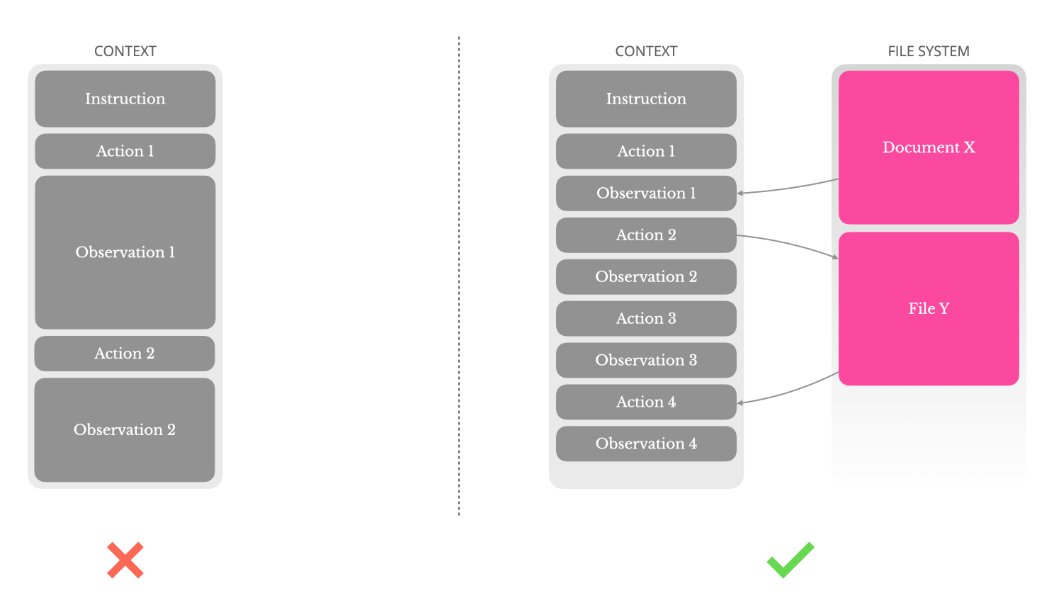

The race for LLM "cognitive core" - a few billion param model that maximally sacrifices encyclopedic knowledge for capability. It lives always-on and by default on every computer as the kernel of LLM personal computing. Its features are slowly crystalizing: - Natively multimodal