Chenliang Xu

@chenliangxu

Associate Professor of Computer Science at the University of Rochester

ID: 192459149

https://www.cs.rochester.edu/~cxu22/ 19-09-2010 06:02:00

22 Tweet

192 Takipçi

150 Takip Edilen

Zooming Slow-Mo: Fast and Accurate One-Stage Space-Time Video Super-Resolution deepai.org/publication/zo… by Xiaoyu Xiang et al. including Yapeng Tian, Yulun Zhang, @chenliangxu #Interpolation #ComputerVision

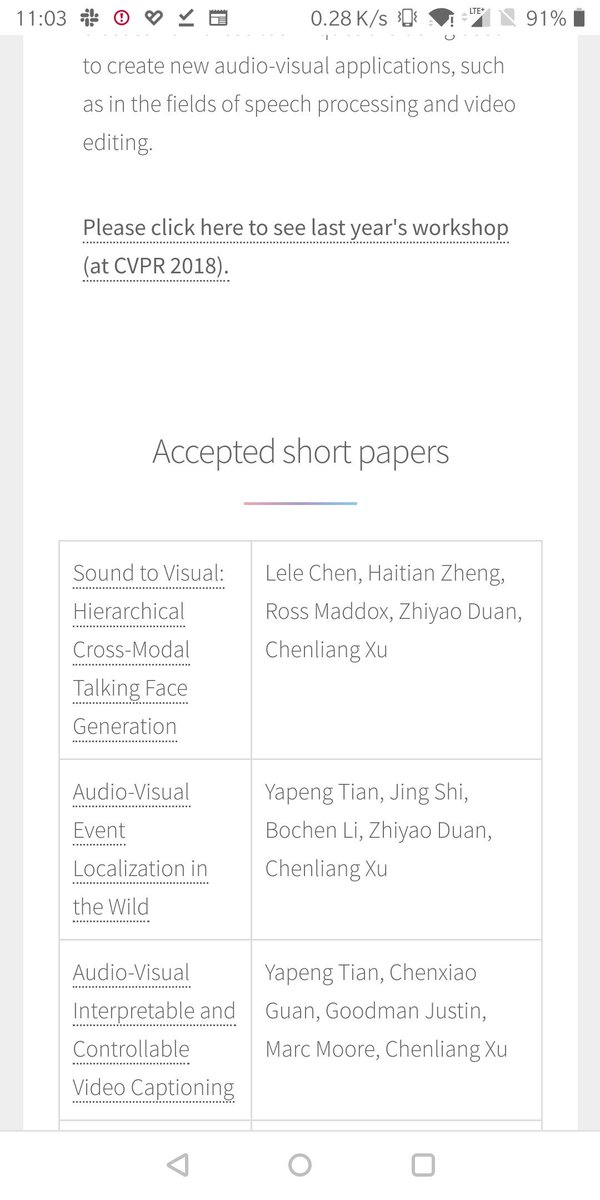

Want to make computers that can see *and* hear? Come to the CVPR Sight and Sound workshop today! Schedule: sightsound.org Invited talks by: Justin Salamon, Chenliang Xu, Kristen Grauman, Dima Damen, Chuang Gan, John Hershey, Efthymios Tzinis, and James Traer

Feel proud to hood three PhDs at a time. From left to right: Lele Chen Oppo US Research, Yapeng Tian UTD TT faculty, Jing Shi Adobe Research. Will meet again at Vancouver CVPR~

At #Neurips2023 next week to talk about our paper/AV-NeRF and all things AI, Audio/Speech & Multimodal😀 AV-NeRF:Learning Neural Fields for Real-World Audio-Visual Scene Synthesis Demo: t.ly/UuXkG Paper t.ly/0oIhV (w. Susan,Chao,Yapeng Tian,Chenliang Xu)