Ting-Yun Chang

@charlottetyc

PhD student @CSatUSC @nlp_usc

ID: 1432782439944908801

https://terarachang.github.io/ 31-08-2021 19:09:08

90 Tweet

481 Followers

365 Following

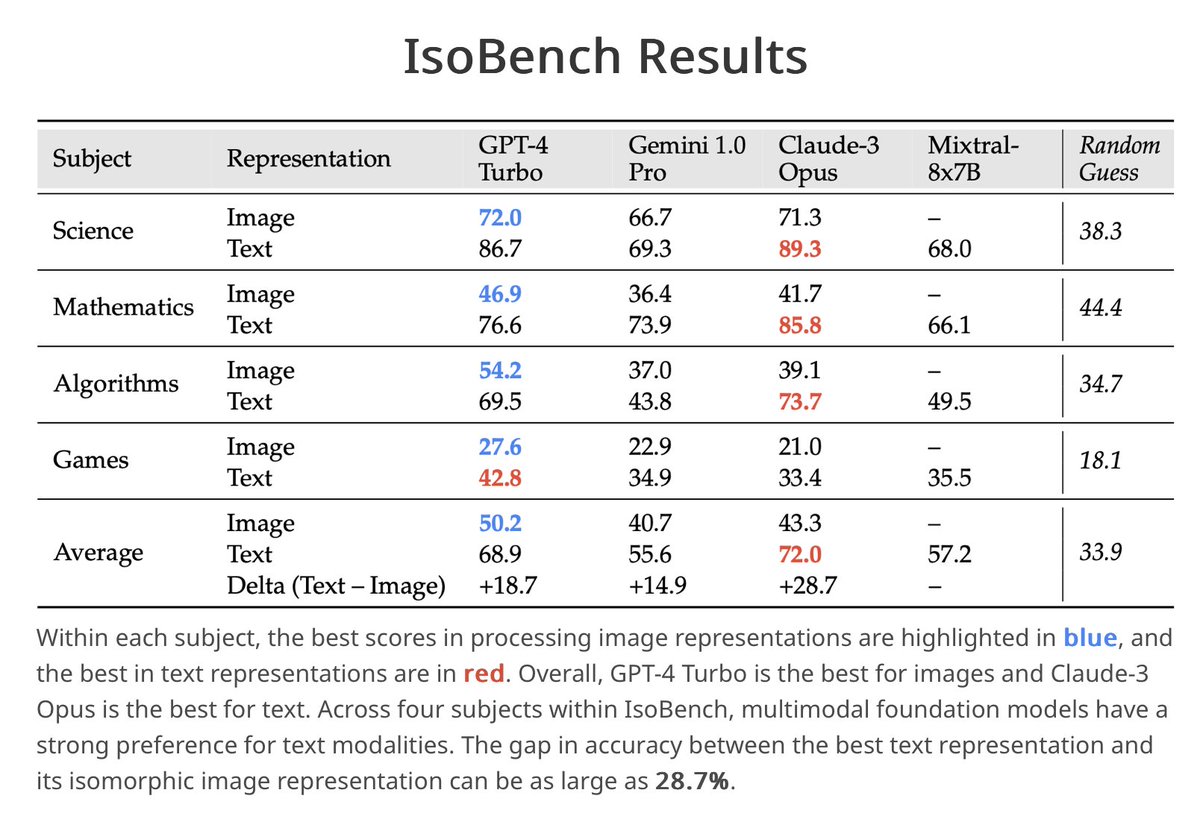

It's cool to see Google DeepMind's new research to show similar findings as we did back in April. IsoBench (isobench.github.io, accepted to Conference on Language Modeling 2024) was curated to show the performance gap across modalities and multimodal models' preference over text modality.

Thank you so much Spectrum News 1 SoCal Jas Kang for featuring our work on OATH-Frames: Characterizing Online Attitudes towards Homelessness with LLM Assistants👇 🖥️📈 oath-frames-dashboard.streamlit.app 🗞️ spectrumnews1.com/ca/southern-ca… USC Thomas Lord Department of Computer Science USC NLP USC Social Work USC Center for AI in Society USC Viterbi School Swabha Swayamdipta

Come by Poster Session A tomorrow to hear Sayan Ghosh tell you why your preference eval is probably broken (and how you can fix it!)

I'll be at #NeurIPS2024! My group has papers analyzing how LLMs use Fourier Features for arithmetic (Tianyi Zhou ) and how TFs learn higher-order optimization for ICL (Deqing Fu), plus workshop papers on backdoor detection (Jun Yan) and LLMs + PDDL (Wang Bill Zhu)

I'll present a poster for Lifelong ICL and Task Haystack at #NeurIPS2024! ⏰ Wednesday 11am-2pm 📍 East Exhibit Hall A-C #2802 📜 arxiv.org/abs/2407.16695 My co-first author Xiaoyue Xu is applying to PhD programs and I am looking jobs in industry! Happy to connect at NeurIPS!

For this week’s NLP Seminar, we are thrilled to host Yun-Nung Vivian Chen to talk about Optimizing Interaction and Intelligence — Multi-Agent Simulation and Collaboration for Personalized Marketing and Advanced Reasoning! When: 3/6 Thurs 11am PT Non-Stanford affiliates registration form

At NAACL HLT 2025 this week! I’ll be presenting our work on LLM domain induction with Jesse Thomason on Thu (5/1) at 4pm in Hall 3, Section I. Would love to connect and chat about LLM planning, reasoning, AI4Science, multimodal stuff, or anything else. Feel free to DM!