Charlie Ruan

@charlie_ruan

MSCS @CSDatCMU | prev @CornellCIS

ID: 2733746502

https://www.charlieruan.com 15-08-2014 05:49:35

125 Tweet

569 Takipçi

347 Takip Edilen

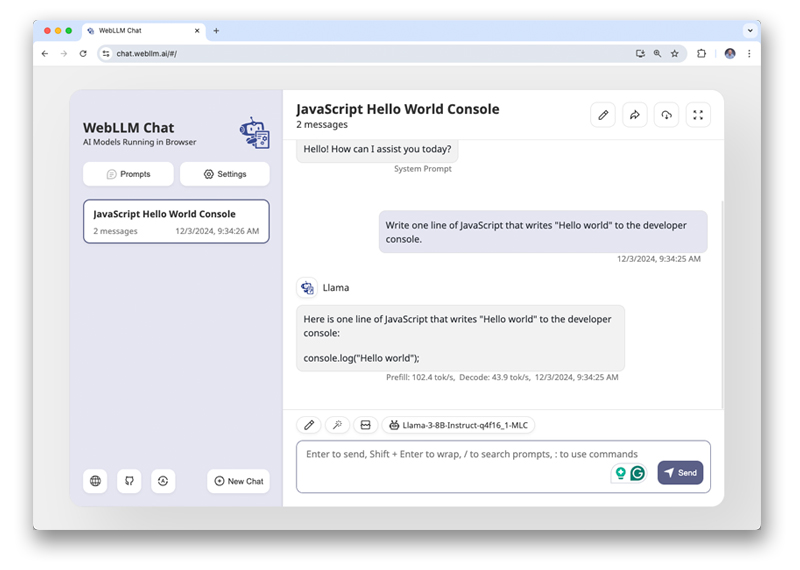

Build private web apps with WebLLM. Google Developer Expert, Christian Liebel (🦋 @christianliebel.com) walks you through adding WebLLM to a to-do list app, enabling local LLM inference with WebAssembly and WebGPU. See how it works → goo.gle/40laHSa

Huge thank you to NVIDIA Data Center for gifting a brand new #NVIDIADGX B200 to CMU’s Catalyst Research Group! This AI supercomputing system will afford Catalyst the ability to run and test their work on a world-class unified AI platform.

Thanks NVIDIA Data Center for the DGX B200 machine for the CMU Catalyst group! I'm perhaps already a bit too enthralled by it in the photos...

Thank you to @NVIDIA for gifting our Catalyst Research Group the latest NVIDIA DGX B200! The B200 platform will greatly accelerate our research in building next-generation ML systems.🚀 #NVIDIADGX #DGXB200 NVIDIA Data Center

Really thrilled to receive #NVIDIADGX B200 from NVIDIA . Looking forward to cooking with the beast. Together with an amazing team at CMU Catalyst group Beidi Chen Tim Dettmers Zhihao Jia Zico Kolter, We are looking at the innovate across entire stack from model to instructions