Can Demircan

@can_demircann

phd student @cpilab working on machine learning/cognitive science

ID: 1623247942327930880

https://candemircan.github.io 08-02-2023 09:10:53

32 Tweet

95 Followers

261 Following

Come work with Can Demircan and me on semantic label smoothing (it's cool, I promise)!

Excited to announce Centaur -- the first foundation model of human cognition. Centaur can predict and simulate human behavior in any experiment expressible in natural language. You can readily download the model from Hugging Face and test it yourself: huggingface.co/marcelbinz/Lla…

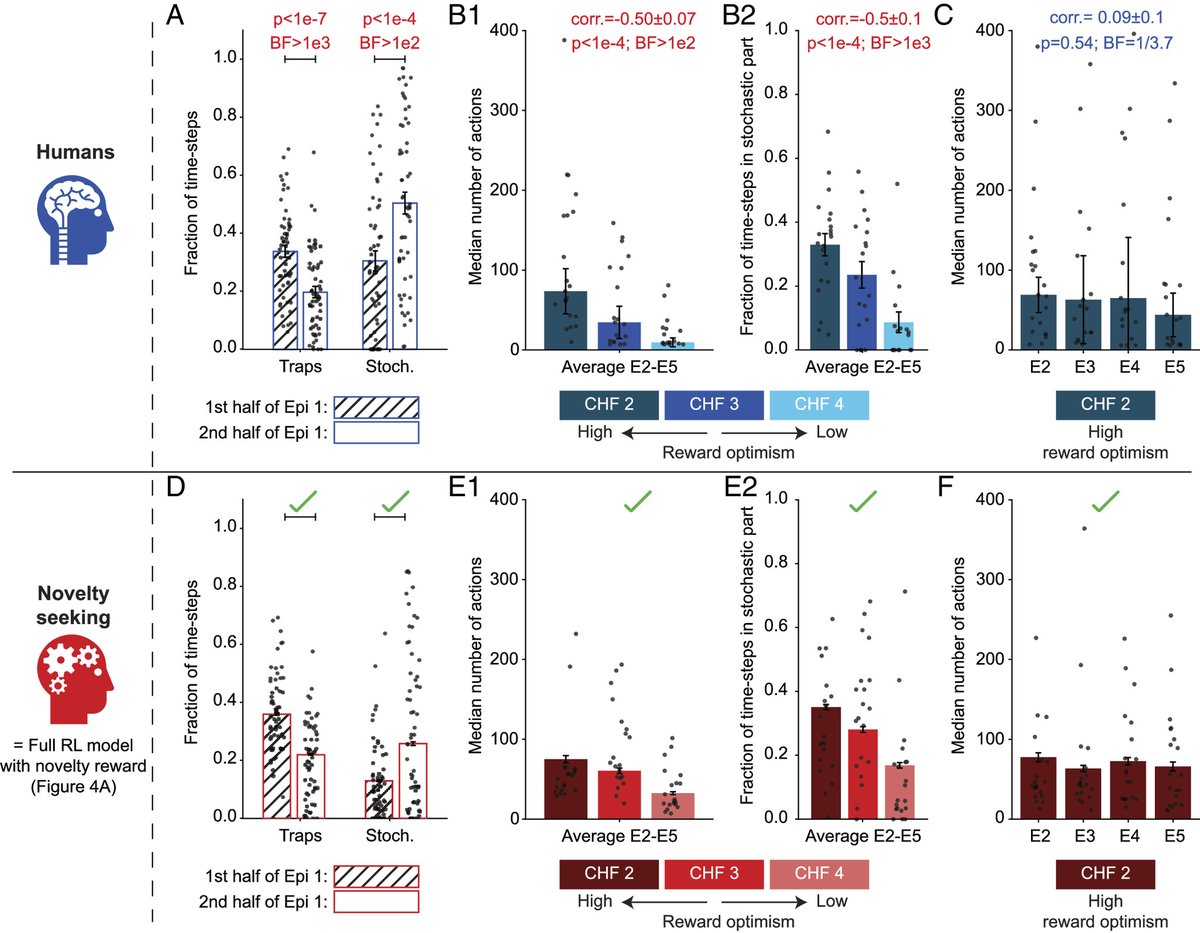

🚨Preprint alert🚨 In an amazing collaboration with Gruaz Lucas, Sophia Becker, & J Brea, we explored a major puzzle in neuroscience & psychology: *What are the merits of curiosity⁉️* osf.io/preprints/psya… 1/7

Happy to say we made the cover too! (Elif Akata, Helmholtz Institute for Human-Centered AI)

🚀 We are hiring! 🚀 🔍 Join us as a Postdoctoral Researcher (fully-funded) at the Helmholtz Institute for Human-Centered AI in Munich. Helmholtz Munich | @HelmholtzMunich Helmholtz AI ELLIS