Carlos Lassance

@cadurosar

MTS @ Cohere, constantly trying to make Information Retrieval work better, while making mistakes on the process.

ID: 969183405077401600

http://cadurosar.github.io 01-03-2018 12:11:45

256 Tweet

464 Takipçi

122 Takip Edilen

Welcome Cohere For AI Command-R! The top trending among over 500k open-access models! 🚀 huggingface.co/CohereForAI/c4…

Just as splade-v3 comes out (huggingface.co/naver/splade-v3), splade++ achieves 1M monthly downloads again, pretty cool seeing this happen again! Always curious to know what people are doing it with and congrats to the NAVER LABS Europe team @thibault_formal Stéphane Clinchant HerveDejean

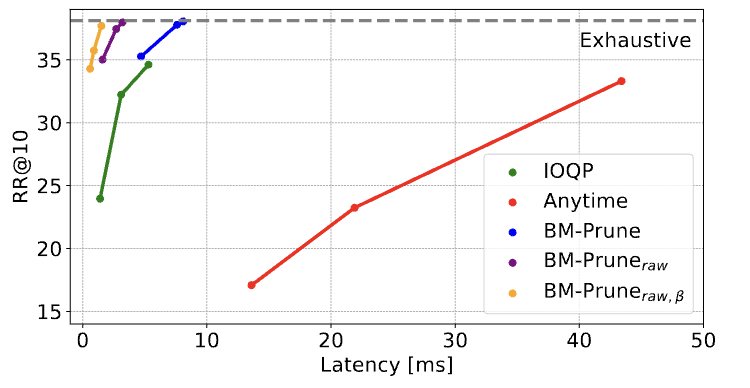

People are asking me what to expect from "Faster Learned Sparse Retrieval with Block-Max Pruning" with Nicola Tonellotto and Torsten Suel 10x faster safe retrieval wrt. Maxscore and you can check yourself the approximate retrieval trade-offs on naver/splade-cocondenser-ensembledistil

Aya-Expanse, the strongest open weights multilingual LLM, was just released by Cohere For AI It beats Llama 70B multilingual, while being half the size and twice the speed.

Excited to share that Provence is accepted to #ICLR2025! Provence is a method for training an efficient & high-performing context pruner for #RAG, either standalone or combined with a reranker huggingface.co/blog/nadiinchi… w/ @thibault_formal Vassilina Nikoulina Stéphane Clinchant NAVER LABS Europe