Walid BOUSSELHAM

@bousselhamwalid

PhD Student at Bonn University |

Computer Vision, Multi-modal learning and Zero-shot adaptation.

Prev. @NUSingapore & @ENSTAParis

Visiting MiT for the summer24

ID: 1302975089802178560

http://walidbousselham.com/ 07-09-2020 14:21:00

106 Tweet

104 Followers

212 Following

Monika Wysoczańska Super cool work! We found something similar to the idea of pooling for GEM … github.com/WalBouss/GEM … might be interesting to think about how to merge… overall it looks like an exciting topic! Thanks for sharing 🤩!

VLMs have a resolution problem, which prevents them from finding small details in large images. In this Hugging Face community post, I discuss the ways to solve it and describe the details of MC-LLaVA architecture: huggingface.co/blog/visherati…

Paper accepted to #CVPR2024! Grounding Everything: Emerging Localization Properties in Vision-Language Transformers Paper: arxiv.org/abs/2312.00878 Demo:huggingface.co/spaces/WalidBo… Code: github.com/WalBouss/GEM With Walid BOUSSELHAM, Felix Petersen, Hilde Kuehne

I had so much fun working on LeGrad with Walid BOUSSELHAM, Hendrik Strobelt, and Hilde Kuehne! Demo it here 💻 huggingface.co/spaces/WalidBo…

Join our community-led Geo-Regional Asia Group on Monday, May 27th as they welcome Walid BOUSSELHAM , PhD student at Bonn University, for a presentation on "An Explainability Method for Vision Transformers via Feature Formation Sensitivity." Learn more: cohere.com/events/c4ai-Wa…

Be sure to tune in Monday for our community-led event with speaker Walid BOUSSELHAM , who will be presenting "An Explainability Method for Vision Transformers via Feature Formation Sensitivity." 💻

Today, we’re demonstrating GEM💎: Grounding Everything (in particular grounding VLM transformers) at Demo #13 at #CVPR2024 Walid BOUSSELHAM Hilde Kuehne Vittorio Ferrari github.com/WalBouss/GEM

🚀We release the dataset, code, models for our "HowToCaption: Prompting LLMs to Transform Video Annotations at Scale," presented at #ECCV2024!🎥📚 🔗Github: github.com/ninatu/howtoca…🔗arXiv: arxiv.org/abs/2310.04900 Anna Kukleva Xudong Hong will go to #NAACL2024 Christian Rupprecht Bernt Schiele Hilde Kuehne

![fly51fly (@fly51fly) on Twitter photo [CV] EmerDiff: Emerging Pixel-level Semantic Knowledge in Diffusion Models

arxiv.org/abs/2401.11739

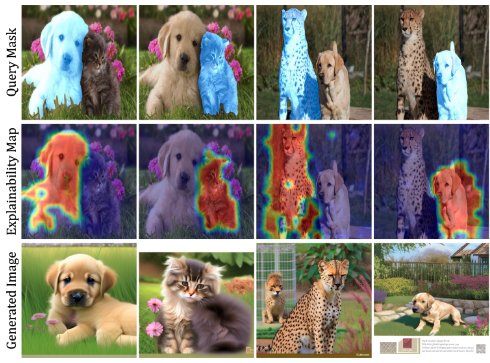

This paper presents a method for image segmentation using diffusion models. By extracting semantic knowledge from a pre-trained diffusion model, fine-grained segmentation maps [CV] EmerDiff: Emerging Pixel-level Semantic Knowledge in Diffusion Models

arxiv.org/abs/2401.11739

This paper presents a method for image segmentation using diffusion models. By extracting semantic knowledge from a pre-trained diffusion model, fine-grained segmentation maps](https://pbs.twimg.com/media/GEj6c7vbMAAJehb.jpg)