Yonatan Belinkov

@boknilev

Assistant professor of computer science @TechnionLive. #NLProc

ID: 554869994

http://www.cs.technion.ac.il/~belinkov 16-04-2012 04:54:07

2,2K Tweet

4,4K Takipçi

1,1K Takip Edilen

⏰ Only 9 days away! Join us at Conference on Language Modeling on October 10 for the first workshop on the application of LLM explainability to reasoning and planning. Featuring: 📑 20 poster presentations 🎤 9 distinguished speakers View our schedule at tinyurl.com/xllm-workshop.

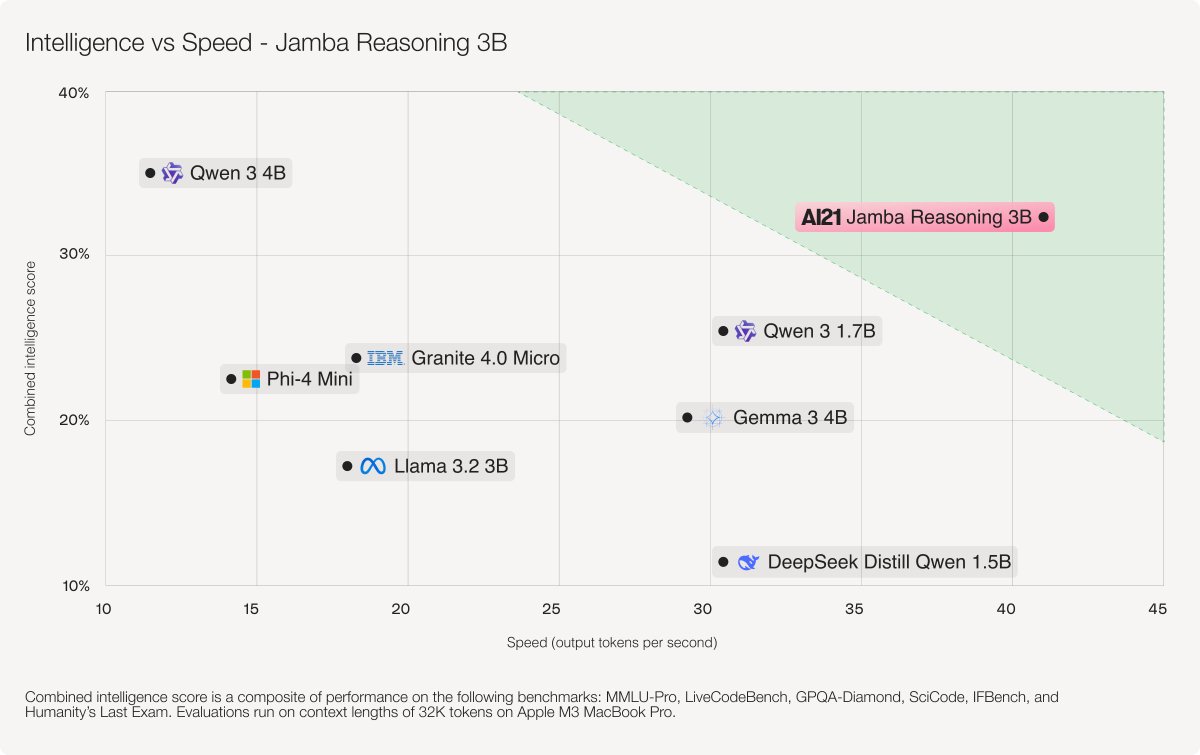

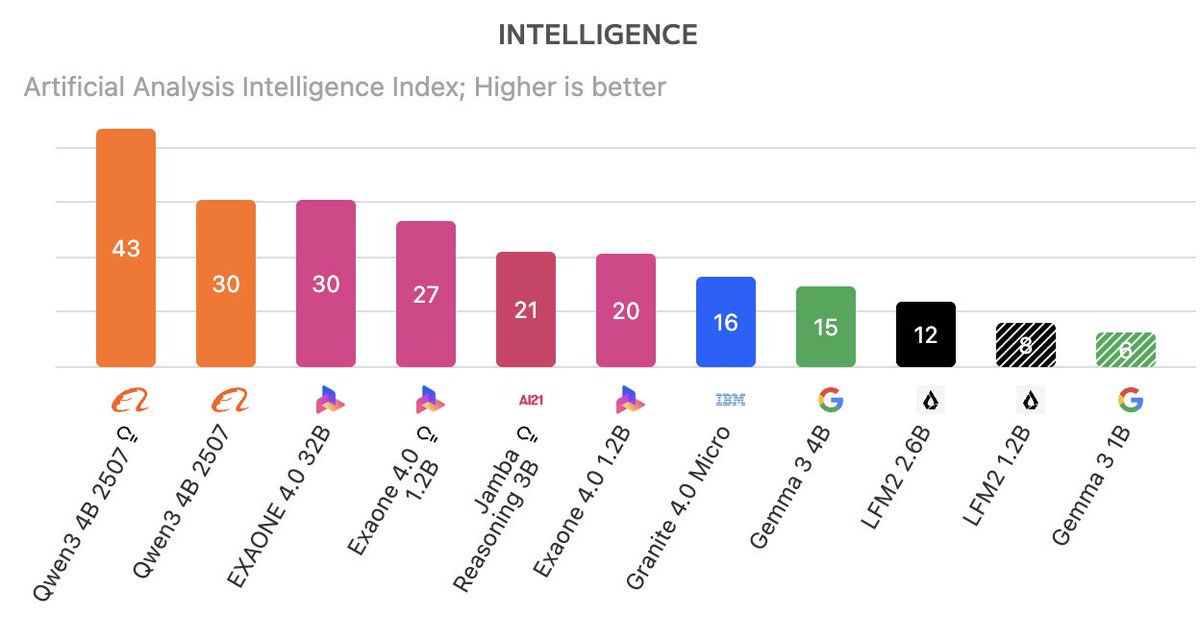

🧠 Jamba Reasoning 3B leads tiny reasoning models (Artificial Analysis). 🥇 #1 on #IFBench (52%) for instruction following 📈 21 on the Artificial Analysis Intelligence Index 👉Charts by Artificial Analysis: artificialanalysis.ai/models/open-so…

.Cornell University is recruiting for multiple postdoctoral positions in AI as part of two programs: Empire AI Fellows and Foundational AI Fellows. Positions are available in NYC and Ithaca. Deadline for full consideration is Nov 20, 2025! academicjobsonline.org/ajo/jobs/30971