Benjamin Minixhofer

@bminixhofer

PhD Student @CambridgeLTL

ID: 3492830254

http://bmin.ai 30-08-2015 16:29:31

414 Tweet

1,1K Followers

364 Following

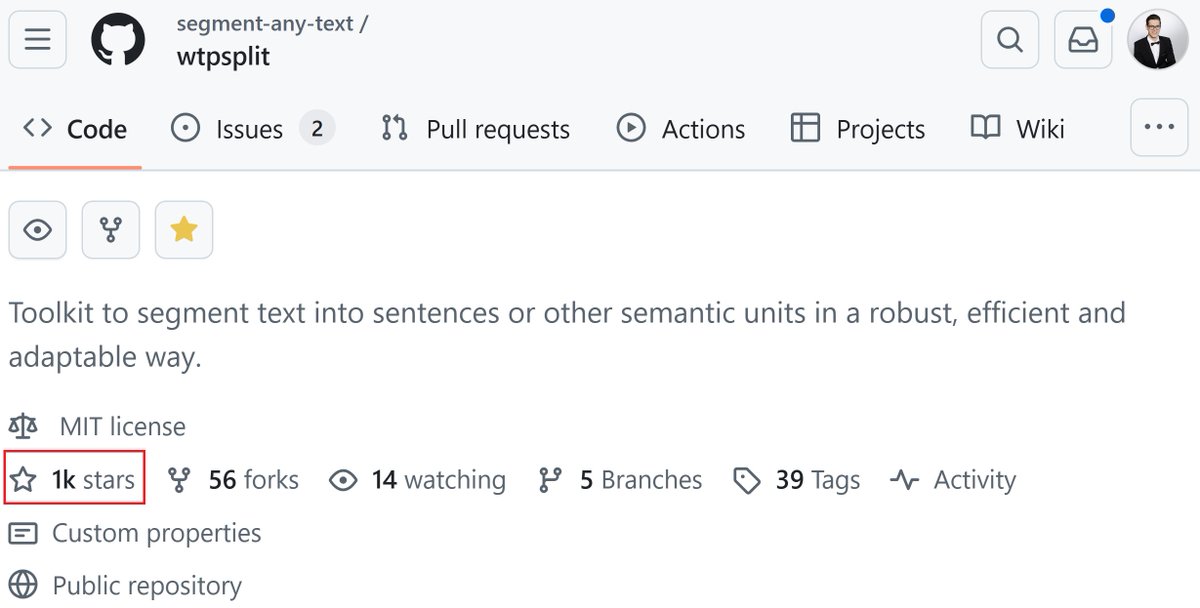

.Markus Frohmann released a new version of wtpsplit which speeds up sentence segmentation by 4x-20x a couple of days ago thanks to a one-line change. The beauty/horrors of Python. github.com/segment-any-te…

🚨 NEW WORKSHOP ALERT 🚨 We're thrilled to announce the first-ever Tokenization Workshop (TokShop) at #ICML2025 ICML Conference! 🎉 Submissions are open for work on tokenization across all areas of machine learning. 📅 Submission deadline: May 30, 2025 🔗 tokenization-workshop.github.io

Delighted there will finally be a workshop devoted to tokenization - a critical topic for LLMs and beyond! 🎉 Join us for the inaugural edition of TokShop at #ICML2025 ICML Conference in Vancouver this summer! 🤗

To appear at #NAACL2025 (2 orals, 1 poster)! Coleman Haley: which classes of words are most grounded on (perceptual proxies of) meaning? Uri Berger: how do image descriptions vary across languages and cultures? Hanxu Hu: can LLMs follow sequential instructions? 🧵below

Excited to see our study on linguistic generalization in LLMs featured by University of Oxford News!