Berivan Isik

@berivanisik

Research scientist @GoogleAI. Efficient & trustworthy AI, LLMs, Gemini data & eval | prev: PhD @Stanford @StanfordAILab

ID: 2701532126

https://sites.google.com/view/berivanisik 02-08-2014 19:11:20

299 Tweet

10,10K Takipçi

1,1K Takip Edilen

Our QuEST paper was selected for Oral Presentation at ICLR Sparsity in LLMs Workshop at ICLR 2025 workshop! QuEST is the first algorithm with Pareto-optimal LLM training for 4bit weights/activations, and can even train accurate 1-bit LLMs. Paper: arxiv.org/abs/2502.05003 Code: github.com/IST-DASLab/QuE…

Happy to announce our ICML Conference 2025 workshop: "The Next Wave of On-Device Learning for Foundation Models" Details👇 1/3

Super excited for our upcoming ICML Conference workshop. Stay tuned for updates and follow On-Device Learning for Foundation Models @ICML 25!

Sparse LLM workshop will run on Sunday with two poster sessions, a mentoring session, 4 spotlight talks, 4 invited talks and a panel session. We'll host an amazing lineup of researchers: Dan Alistarh Vithu Thangarasa Yuandong Tian Amir Yazdan Gintare Karolina Dziugaite Olivia Hsu Pavlo Molchanov Yang Yu

We welcome submissions on efficient foundation models, on-device learning, and distributed learning to our ICML Conference workshop. Deadline: May 23rd Steve Laskaridis, Samuel Horváth, Berivan Isik, Peter Kairouz, Christina Giannoula, bilge, Angelos Katharopoulos, Martin Takac, nic lane

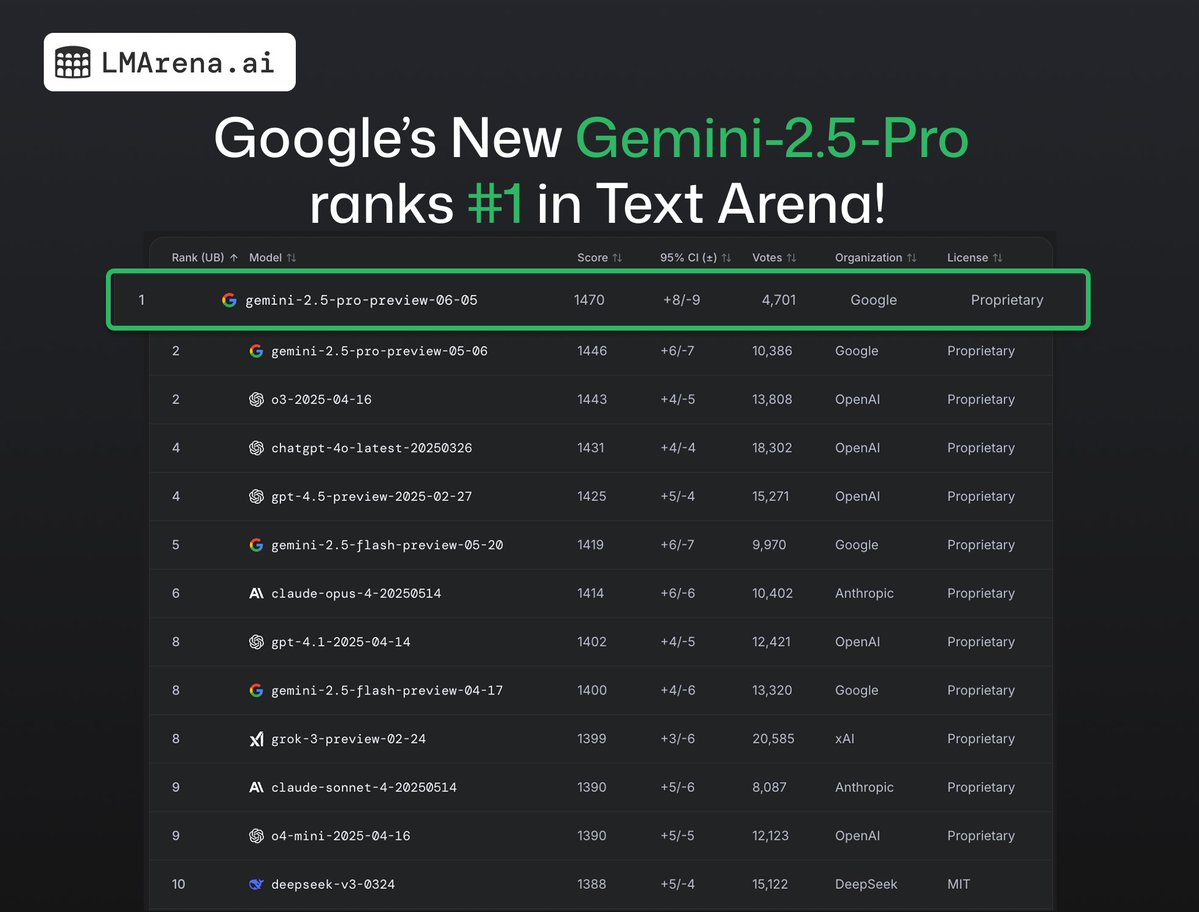

🚨Breaking: New Gemini-2.5-Pro (06-05) takes the #1 spot across all Arenas again! 🥇 #1 in Text, Vision, WebDev 🥇 #1 in Hard, Coding, Math, Creative, Multi-turn, Instruction Following, and Long Queries categories Huge congrats Google DeepMind!