Ben Wu @ICLR

@benwu_ml

PhD Student @SheffieldNLP Prev @Cambridge_Uni

Mechanistic Interpretability and LLM uncertainty

ID: 1613198311510446080

https://bpwu1.github.io/ 11-01-2023 15:37:08

11 Tweet

95 Followers

121 Following

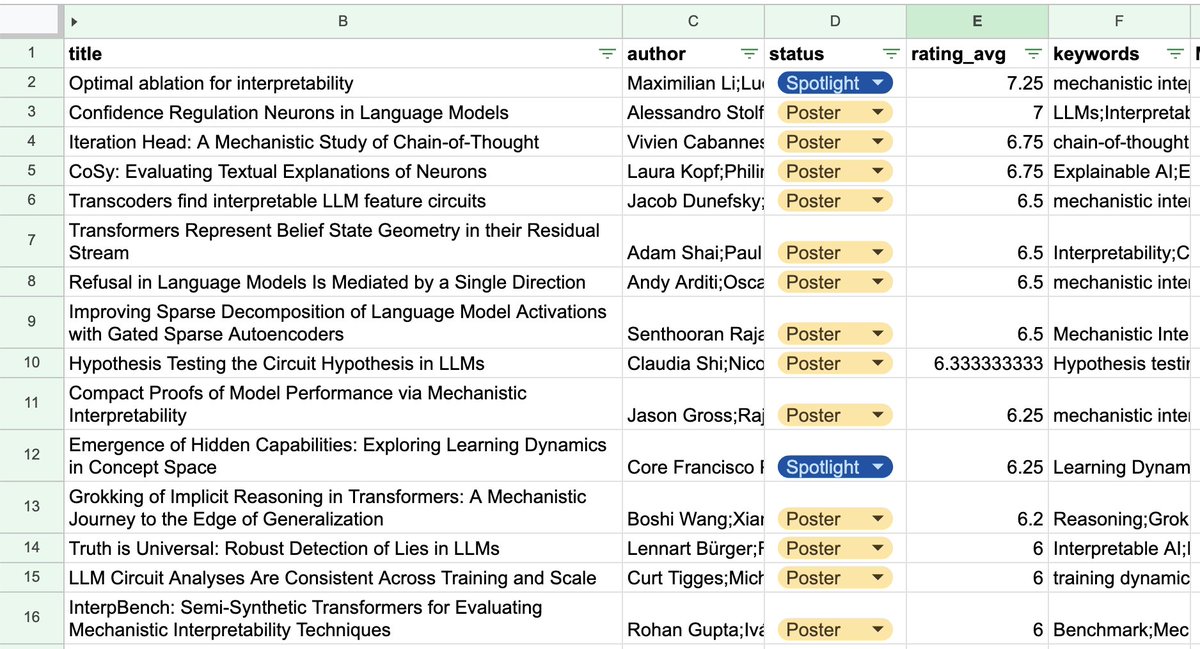

New paper w/ Ben Wu @ICLR and Neel Nanda! LLMs don’t just output the next token, they also output confidence. How is this computed? We find two key neuron families: entropy neurons exploit final LN scale to change entropy, and token freq neurons boost logits proportional to freq 🧵

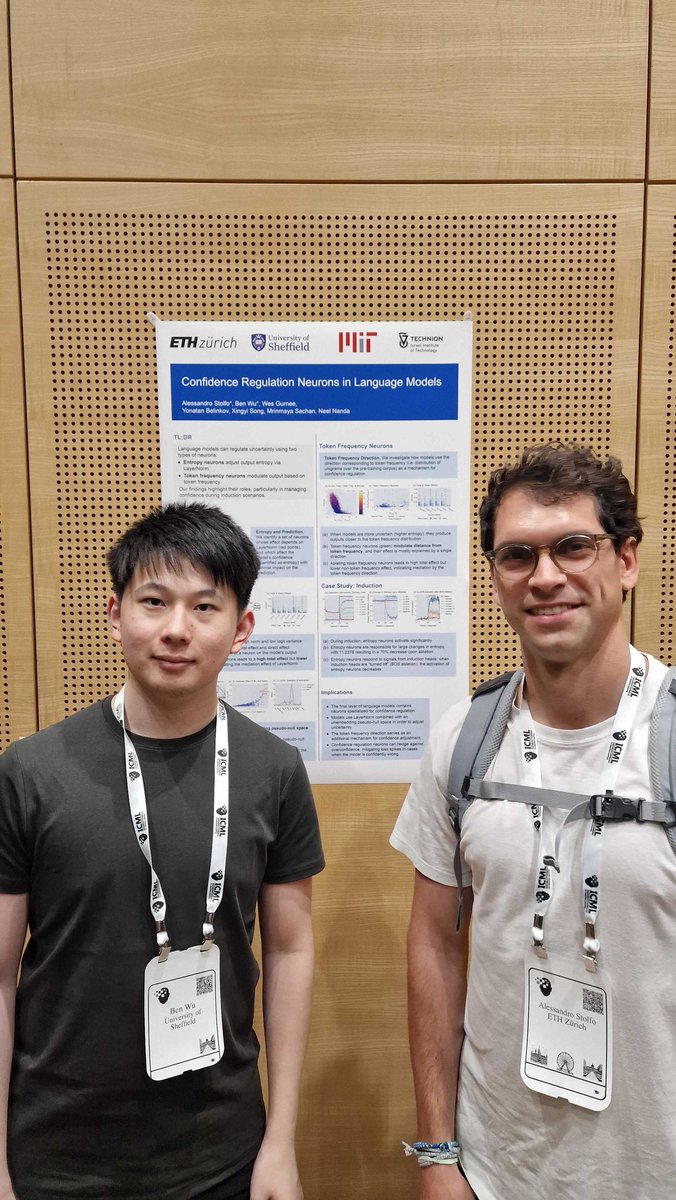

"Confidence Regulation Neurons in Language Models" at #ICML2024 Mech Interp Workshop! w/ amazing co-author Alessandro Stolfo

Our paper on individual neurons that regulate an LLM's confidence was accepted to NeurIPS! Great work by Alessandro Stolfo and Ben Wu @ICLR Check it out if you want to learn about wild mechanisms, that exploit LayerNorm's non-linearity and the null space of the unembedding, productively!