Nandan Thakur

@beirmug

PhD @uwaterloo🌲 Efficient IR, likes good evals 🔍 Ex Intern @DbrxMosaicAI @GoogleAI | RA @UKPLab | Author of beir.ai, miracl.ai, TREC-RAG! 🍻

ID: 751326416495517697

https://thakur-nandan.github.io 08-07-2016 08:05:51

1,1K Tweet

2,2K Takipçi

2,2K Takip Edilen

Relabeling datasets for Information Retrieval improves NDCG@10 of both embedding models & cross-encoder rerankers. This was already the prevalent belief, but now it's been confirmed. Great job Nandan Thakur, Crystina Zhang, Xueguang Ma & Jimmy Lin

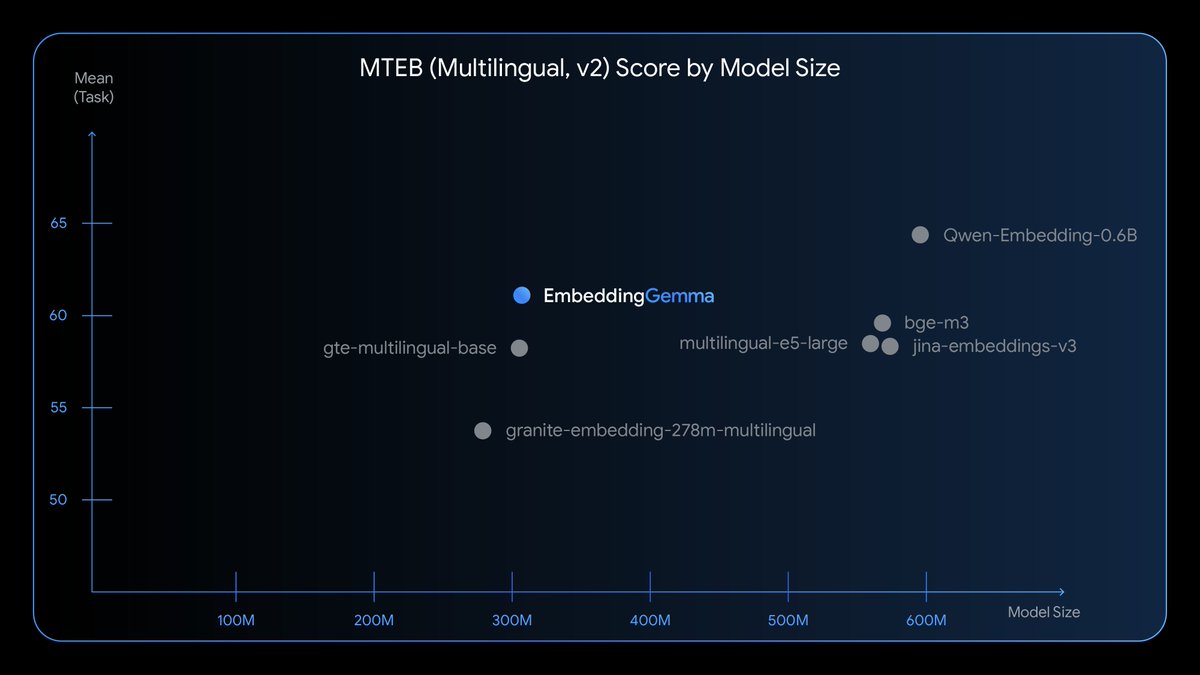

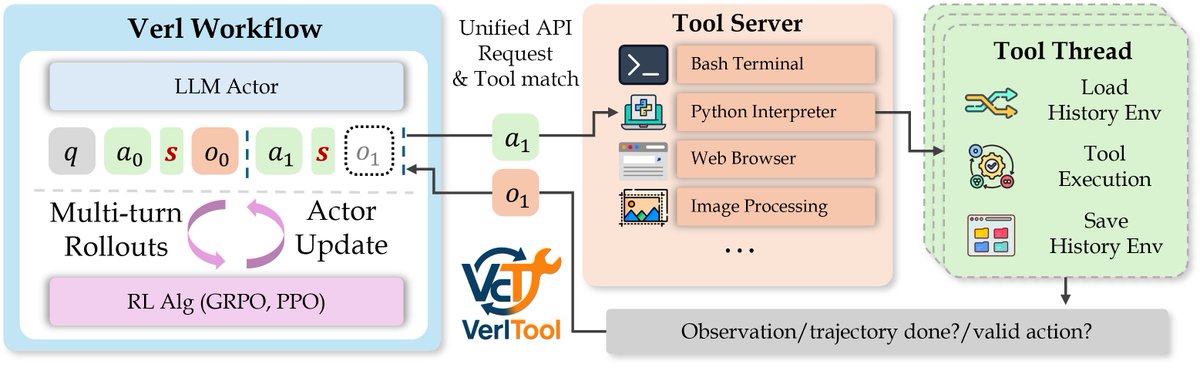

Looks like the kind of benchmark that's so good, it feels like sacrifice for the sake of the community to repost it instead of working on it in silence ;D But we need much more of this, so here goes: Check out this release from Zijian Chen Xueguang Ma Shengyao Zhuang et al!

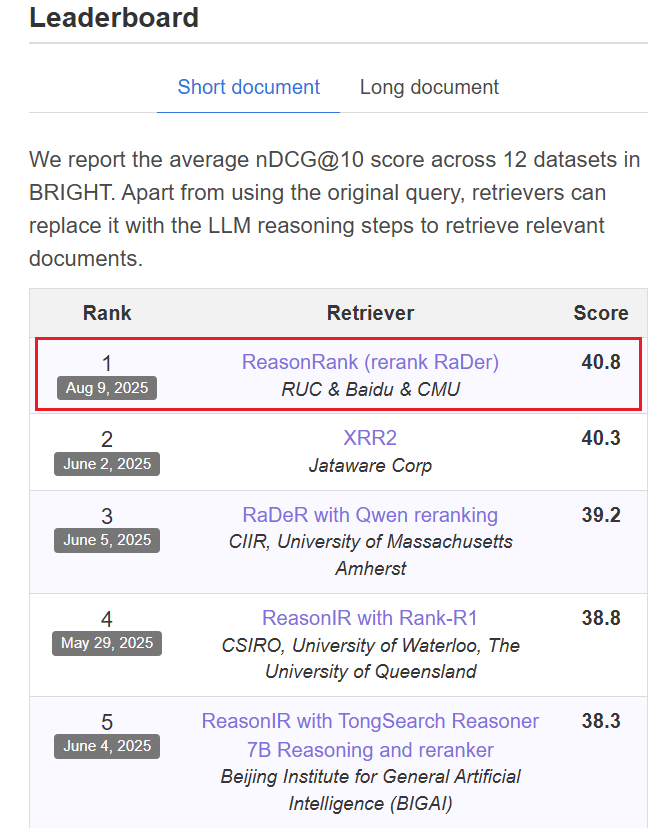

Excited to share ReasonRank—a listwise reranker that reasons before it ranks. On complex queries, ReasonRank-32B is #1 on the BRIGHT reasoning-intensive leaderboard (Aug 9, 2025; +.6 vs. strong baselines). Working with Wenhan, Weiwei Sun, etc.

Granite Embedding R2 Models are here! 🔥 8k context 🏆 Top performance on BEIR, MTEB, COIR, MLDR, MT-RAG, Table IR, LongEmbed ⚡Fast and lightweight 🎯 Apache 2.0 license (trained on commercial friendly data) Try them now on Hugging Face 👉 hf.co/ibm-granite

Amazing to see FreshStack highlighted here in Chapter 2. Incredible work Nandan Thakur Hamel Husain !

Happy to see that our RLHN paper on cleaning IR training data (e.g. MSMARCO/HotPotQA) by relabeling hard negatives using LLMs has been accepted at #EMNLP2025 findings! 🎉🍾 Huge thanks to everyone involved: Xinyu Crystina Zhang, Xueguang Ma, and Jimmy Lin! 📜 arxiv.org/abs/2505.16967

had an interesting discussion with Xueguang Ma on BrowseComp-Plus, something i noticed before: scaling up text embedding beyond 2-4B brings marginal gain (MTEB), while in deep research doesn't hold anymore: larger ones seems can capture sophisticated query patterns way better.

![Fan Nie (@fannie1208) on Twitter photo Can frontier LLMs solve unsolved questions? [1/n]

Benchmarks are saturating. It’s time to move beyond.

Our latest work #UQ shifts evaluation to real-world unsolved questions: naturally difficult, realistic, and with no known solutions.

All questions, candidate answers, Can frontier LLMs solve unsolved questions? [1/n]

Benchmarks are saturating. It’s time to move beyond.

Our latest work #UQ shifts evaluation to real-world unsolved questions: naturally difficult, realistic, and with no known solutions.

All questions, candidate answers,](https://pbs.twimg.com/media/GzSx16Ia4AEnR7B.jpg)