Ali Behrouz

@behrouz_ali

Intern @Google, Ph.D. Student @Cornell_CS, interested in machine learning.

ID: 1611553104532762624

https://abehrouz.github.io/ 07-01-2023 02:39:47

111 Tweet

4,4K Followers

1,1K Following

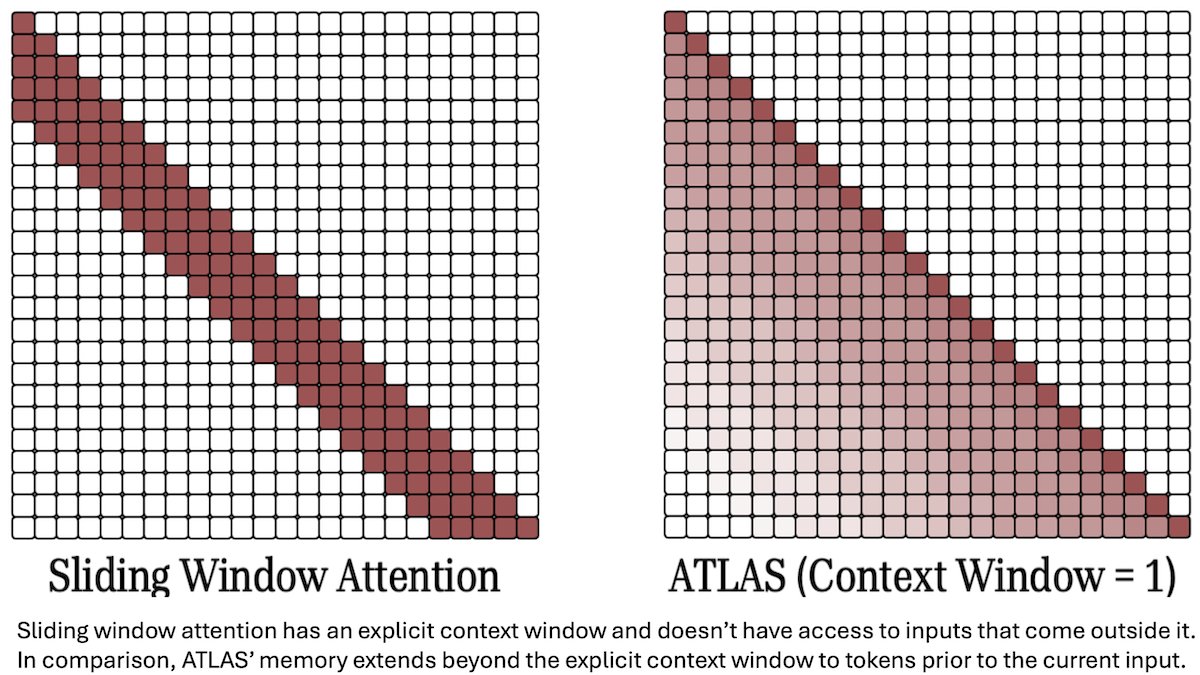

Author of Titans and Atlas from Deepmind is the upcoming guest on Ground Zero pod! it was a quite a chat w him on the recent progress with titans, atlas and their real adoption.